A Man for All Markets

PREFACE

Join me in my odyssey through the worlds of science, gambling, and the securities markets. You will see how I overcame risks and reaped rewards in Las Vegas, Wall Street, and life. On the way, you will meet interesting people from blackjack card counters to investment experts, from movie stars to Nobel Prize winners. And you’ll learn about options and other derivatives, hedge funds, and why a simple investment approach beats most investors in the long run, including experts.

I began life in the Great Depression of the 1930s. Along with millions of others, my family was struggling to get by from one day to the next. Though we didn’t have helpful connections and I went to public schools, I found a resource that made all the difference: I learned how to think.

Some people think in words, some use numbers, and still others work with visual images. I do all of these, but I also think using models. A model is a simplified version of reality, like a street map that shows you how to travel from one part of a city to another or the vision of a gas as a swarm of tiny elastic balls ceaselessly bouncing against one another.

I learned that simple devices such as gears, levers, and pulleys follow basic rules. You could discover the rules by experimenting and, if you got them right, could then use the rules to predict what would happen in new situations.

Most amazing to me was the magic of a crystal set—an early primitive radio made with wire, a mineral crystal, and headphones. Suddenly, I heard voices coming from hundreds or thousands of miles away, carried through the air by some mysterious process. The notion that things I couldn’t even see followed rules I could discover just by thinking—and that I could use what I discovered to change the world—inspired me from an early age.

Because of circumstances, I was largely self-taught and that led me to think differently. First, rather than subscribing to widely accepted views—such as you can’t beat the casinos—I checked for myself. Second, since I tested theories by inventing new experiments, I formed the habit of taking the result of pure thought—such as a formula for valuing warrants—and using it profitably. Third, when I set a worthwhile goal for myself, I made a realistic plan and persisted until I succeeded. Fourth, I strove to be consistently rational, not just in a specialized area of science, but in dealing with all aspects of the world. I also learned the value of withholding judgement until I could make a decision based on evidence.

I hope my story will show you a unique perspective and that A Man for All Markets will help you think differently about gambling, investments, risk, money management, wealth-building, and life.

FOREWORD

Ed Thorp’s memoir reads like a thriller—mixing wearable computers that would have made James Bond proud, shady characters, great scientists, and poisoning attempts (in addition to the sabotage of Ed’s car so he would have an “accident” in the desert). The book reveals a thorough, rigorous, methodical person in search of life, knowledge, financial security, and, not least of all, fun. Thorp is also known to be a generous man, intellectually speaking, eager to share his discoveries with random strangers (in print but also in person)—something you hope to find in scientists but usually don’t. Yet he is humble—he might qualify as the only humble trader on planet Earth—so, unless the reader can reinterpret what’s between the lines, he or she won’t notice that Thorp’s contributions are vastly more momentous than he reveals. Why?

Because of their simplicity. Their sheer simplicity.

For it is the straightforward character of his contributions and insights that made them both invisible in academia and useful for practitioners. My purpose here is not to explain or summarize the book; Thorp—not surprisingly—writes in a direct, clear, and engaging way. I am here, as a trader and a practitioner of mathematical finance, to show its importance and put it in context for my community of real-world scientist-traders and risk-takers in general.

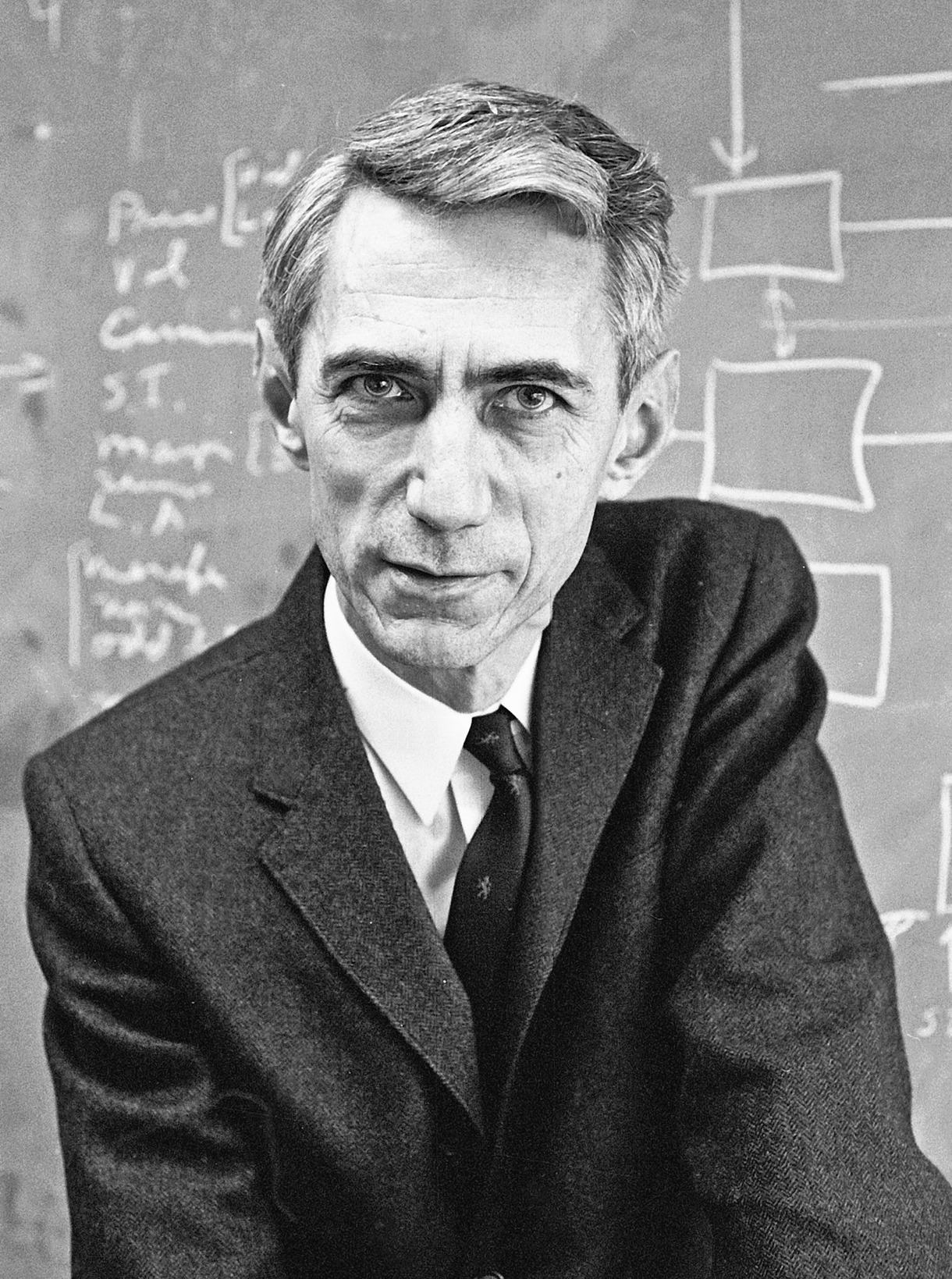

That context is as follows. Ed Thorp is the first modern mathematician who successfully used quantitative methods for risk taking—and most certainly the first mathematician who met financial success doing it. Since then there has been a cohort of such “quants”, such as the whiz kids in applied mathematics at SUNY Stony Brook—but Thorp is their dean.

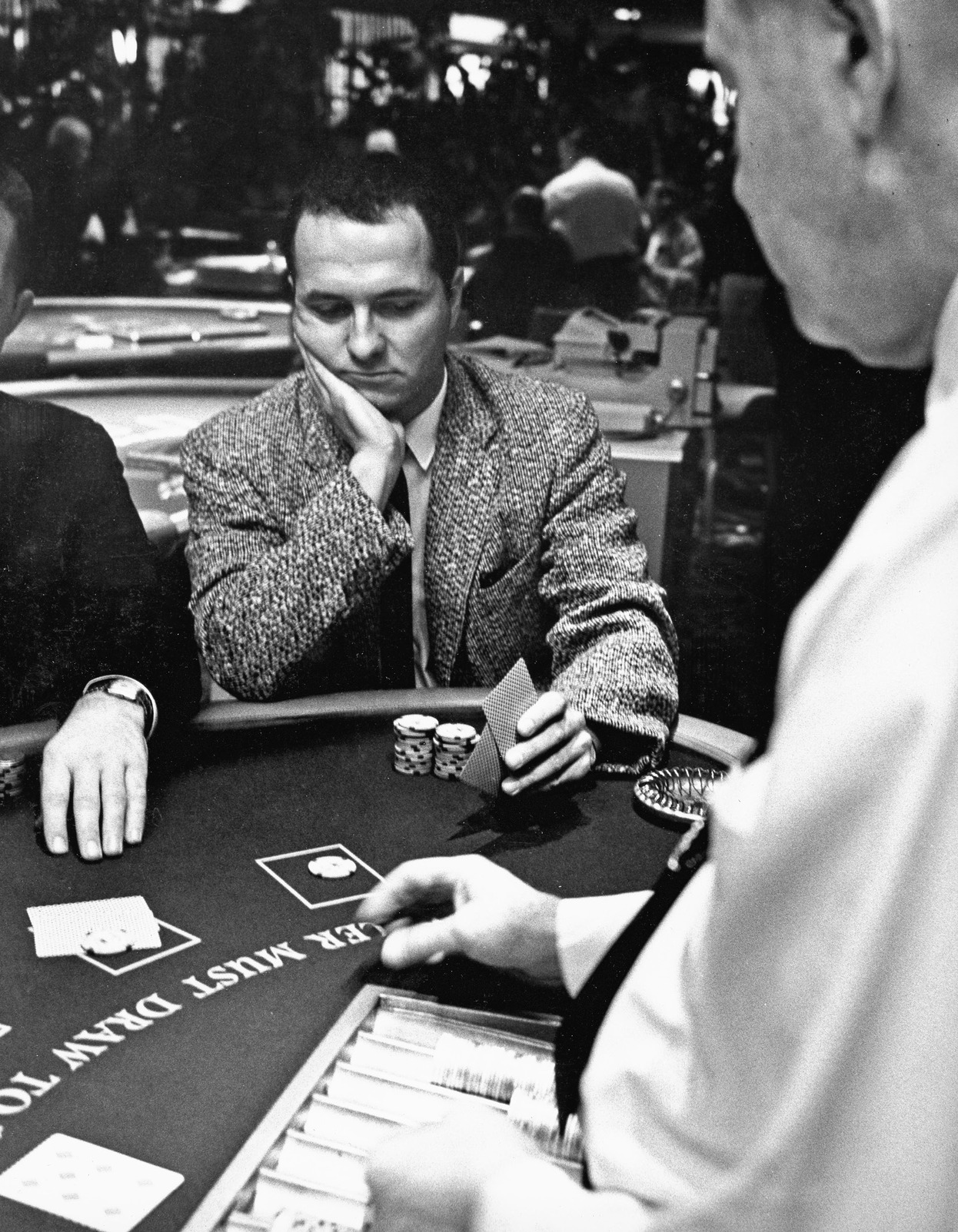

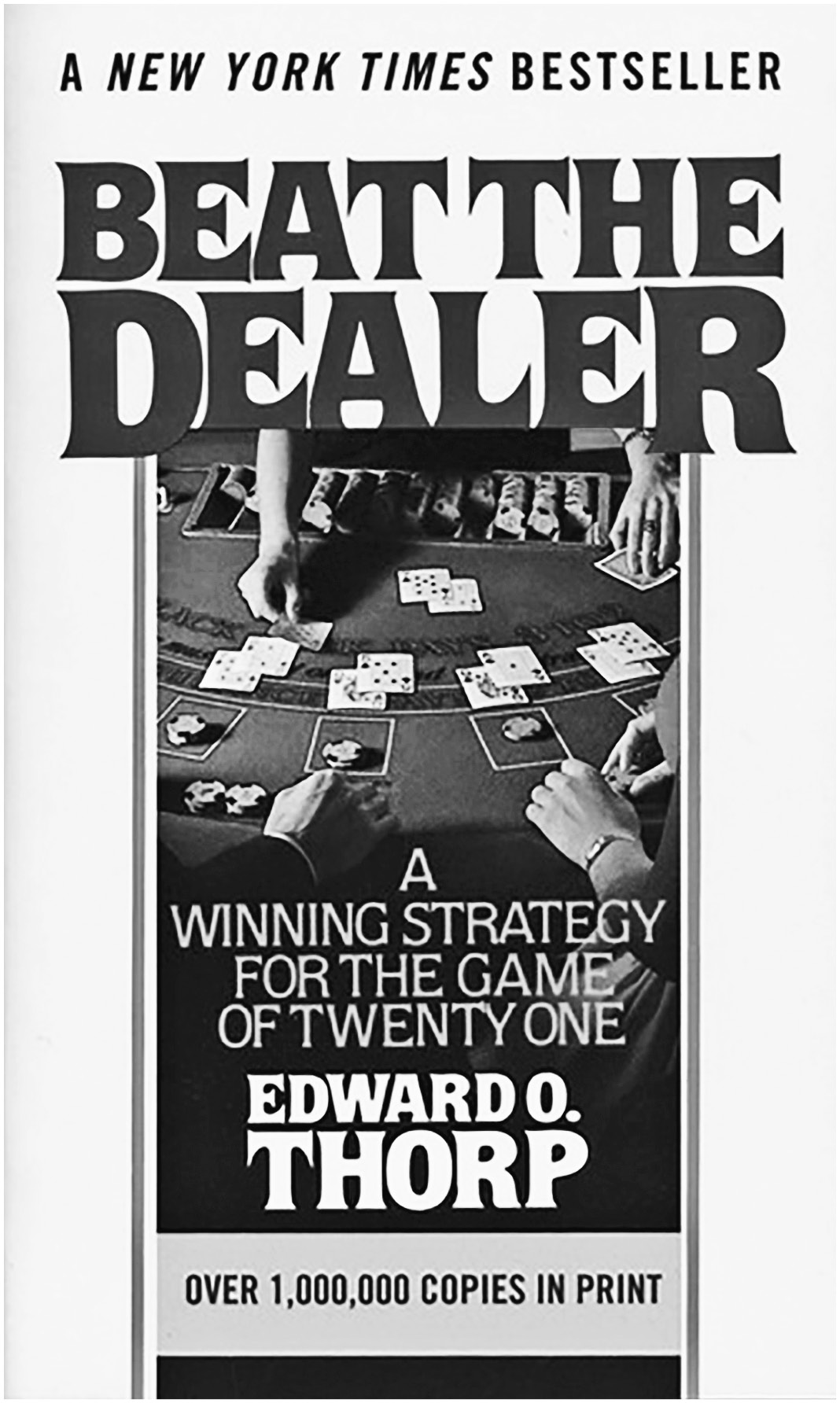

His main and most colorful predecessor, Girolamo (sometimes Geronimo) Cardano, a sixteenth-century polymath and mathematician who—sort of—wrote the first version of Beat the Dealer, was a compulsive gambler. To put it mildly, he was unsuccessful at it—not least because addicts are bad risk-takers; to be convinced, just take a look at the magnificence of Monte Carlo, Las Vegas, and Biarritz, places financed by their compulsion. Cardano’s book Liber de ludo aleae (“Book on Games of Chance”) was instrumental in the later development of probability, but, unlike Thorp’s book, was less of an inspiration for gamblers and more for mathematicians. Another mathematician, a French Protestant refugee in London, Abraham de Moivre, a frequenter of gambling joints and the author of The doctrine of chances: or, a method for calculating the probabilities of events in play (1718) could hardly make both ends meet. One can easily count another half a dozen mathematician-gamblers, including greats like Fermat and Huygens—who were either indifferent to the bottom line or not particularly good at mastering it. Before Ed Thorp, mathematicians of gambling had their love of chance largely unrequited.

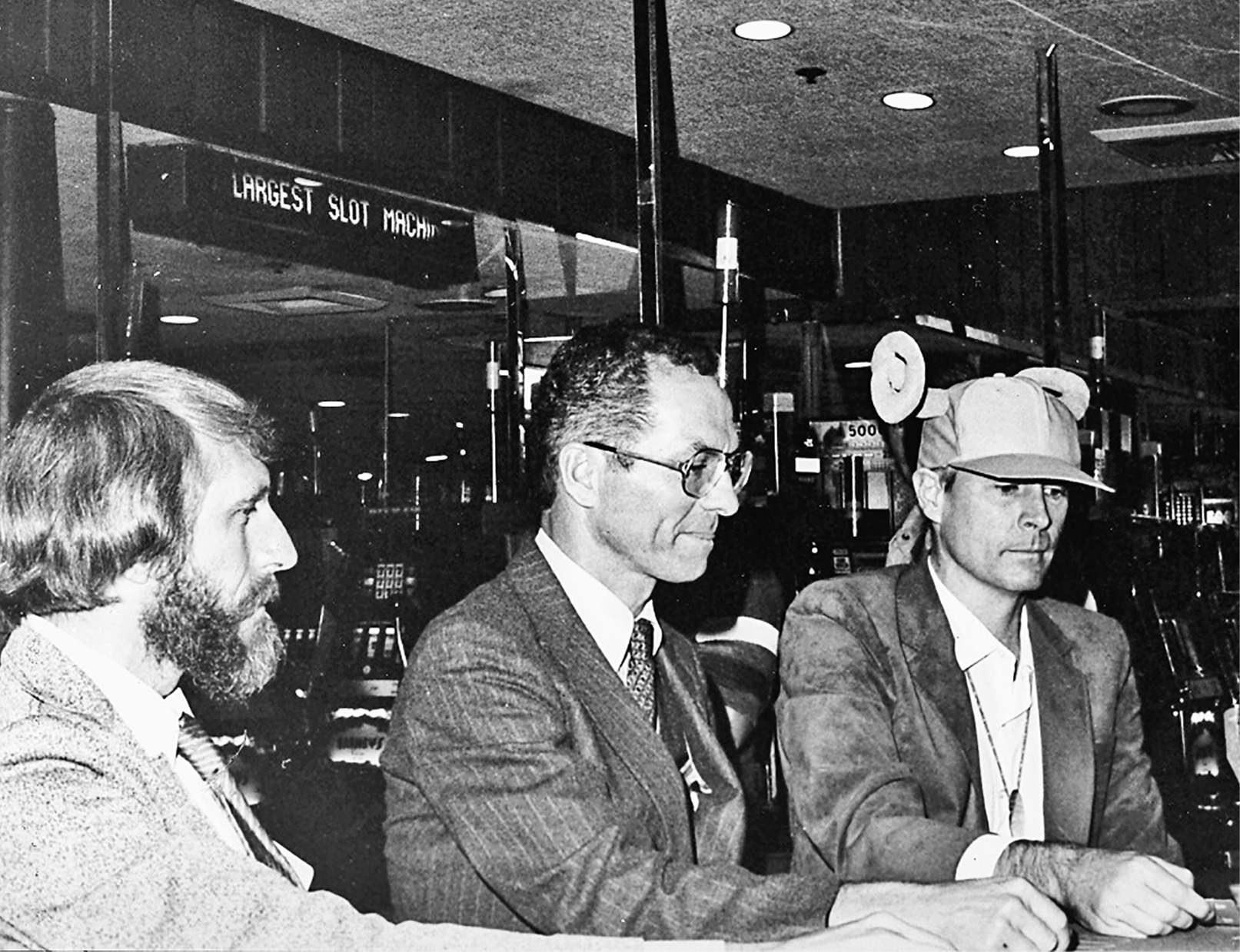

Thorp’s method is as follows: He cuts to the chase in identifying a clear edge (that is something that in the long run puts the odds in his favor). The edge has to be obvious and uncomplicated. For instance, calculating the momentum of a roulette wheel, which he did with the first wearable computer (and with no less a coconspirator than the great Claude Shannon, father of information theory), he estimated a typical edge of roughly 40 percent per bet. But that part is easy, very easy. It is capturing the edge, converting it into dollars in the bank, restaurant meals, interesting cruises, and Christmas gifts to friends and family—that’s the hard part. It is the dosage of your betting—not too little, not too much—that matters in the end. For that, Ed did great work on his own, before the theoretical refinement that came from a third member of the Information Trio: John Kelly, originator of the famous Kelly Criterion, a formula for placing bets that we discuss today because Ed Thorp made it operational.

A bit more about simplicity before we discuss dosing. For an academic judged by his colleagues, rather than the bank manager of his local branch (or his tax accountant), a mountain giving birth to a mouse, after huge labor, is not a very good thing. They prefer the mouse to give birth to a mountain; it is the perception of sophistication that matters. The more complicated, the better; the simple doesn’t get you citations, H-values, or some such metric du jour that brings the respect of the university administrators, as they can understand that stuff but not the substance of the real work. The only academics who escape the burden of complication-for-complication’s sake are the great mathematicians and physicists (and, from what I hear, even for them it’s becoming harder and harder in today’s funding and ranking environment).

Ed was initially an academic, but he favored learning by doing, with his skin in the game. When you reincarnate as practitioner, you want the mountain to give birth to the simplest possible strategy, and one that has the smallest number of side effects, the minimum possible hidden complications. Ed’s genius is demonstrated in the way he came up with very simple rules in blackjack. Instead of engaging in complicated combinatorics and memory-challenging card counting (something that requires one to be a savant), he crystallizes all his sophisticated research into simple rules: Go to a blackjack table. Keep a tally. Start with zero. Add one for some strong cards, minus ones for weak ones, and nothing for others. It is mentally easy to just bet incrementally up and down—bet larger when the number is high, smaller when it is low—and such a strategy is immediately applicable by anyone with the ability to tie his shoes or find a casino on a map. Even while using wearable computers at the roulette table, the detection of edge was simple, so simple that one can get it while standing on a balance ball in the gym; the fanciness resides in the implementation and the wiring.

As a side plot, Ed discovered what is known today as the Black-Scholes option formula, before Black and Scholes (and it is a sign of economics public relations that the formula doesn’t bear his name—I’ve called it Bachelier-Thorp). His derivation was too simple—nobody at the time realized it could be potent.

Now money management—something central for those who learn from being exposed to their own profits and losses. Having an “edge” and surviving are two different things: The first requires the second. As Warren Buffet said: “In order to succeed you must first survive.” You need to avoid ruin. At all costs.

And there is a dialectic between you and your P/L: You start betting small (a proportion of initial capital) and your risk control—the dosage—also controls your discovery of the edge. It is like trial and error, by which you revise both your risk appetite and your assessment of your odds one step at a time.

Academic finance, as has been recently shown by Ole Peters and Murray Gell-Mann, did not get the point that avoiding ruin, as a general principle, makes your gambling and investment strategy extremely different from the one that is proposed by the academic literature. As we saw, academics are paid by administrators via colleagues to make life complicated, not simpler. They invented something useless called utility theory (tens of thousands of papers are still waiting for a real reader). And they invented the idea that one could get to know the collective behavior of future prices in infinite detail—things like correlation, that could be identified today and would never change in the future. (More technically, to implement the portfolio construction suggested by modern financial theory, one needs to know the entire joint probability distribution of all assets for the entire future, plus the exact utility function for wealth at all future times. And without errors! [I have shown that estimation errors make the system explode.] We are lucky if we can know what we will eat for lunch tomorrow—how can we figure out the dynamics until the end of time?)

The Kelly-Thorp method requires no joint distribution or utility function. In practice, one needs the ratio of expected profit to worst-case return—dynamically adjusted (that is, one gamble at a time) to avoid ruin. That’s all.

Thorp and Kelly’s ideas were rejected by economists—in spite of their practical appeal—because of economists’ love of general theories for all asset prices, dynamics of the world, etc. The famous patriarch of modern economics, Paul Samuelson, was supposedly on a vendetta against Thorp. Not a single one of the works of these economists will ultimately survive: Strategies that allow you to survive are not the same thing as the ability to impress colleagues.

So the world today is divided into two groups using distinct methods. The first method is that of the economists who tend to blow up routinely or get rich collecting fees for managing money, not from direct speculation. Consider that Long-Term Capital Management, which had the crème de la crème of financial economists, blew up spectacularly in 1998, losing a multiple of what they thought their worst-case scenario was.

The second method, that of the information theorists as pioneered by Ed, is practiced by traders and scientist-traders. Every surviving speculator uses explicitly or implicitly this second method (evidence: Ray Dalio, Paul Tudor Jones, Renaissance Technologies, even Goldman Sachs!). I said every because, as Peters and Gell-Mann have shown, those who don’t will eventually go bust.

And thanks to that second method, if you inherit, say, $82,000 from uncle Morrie, you know that a strategy exists that will allow you to double the inheritance without ever going through bankruptcy.

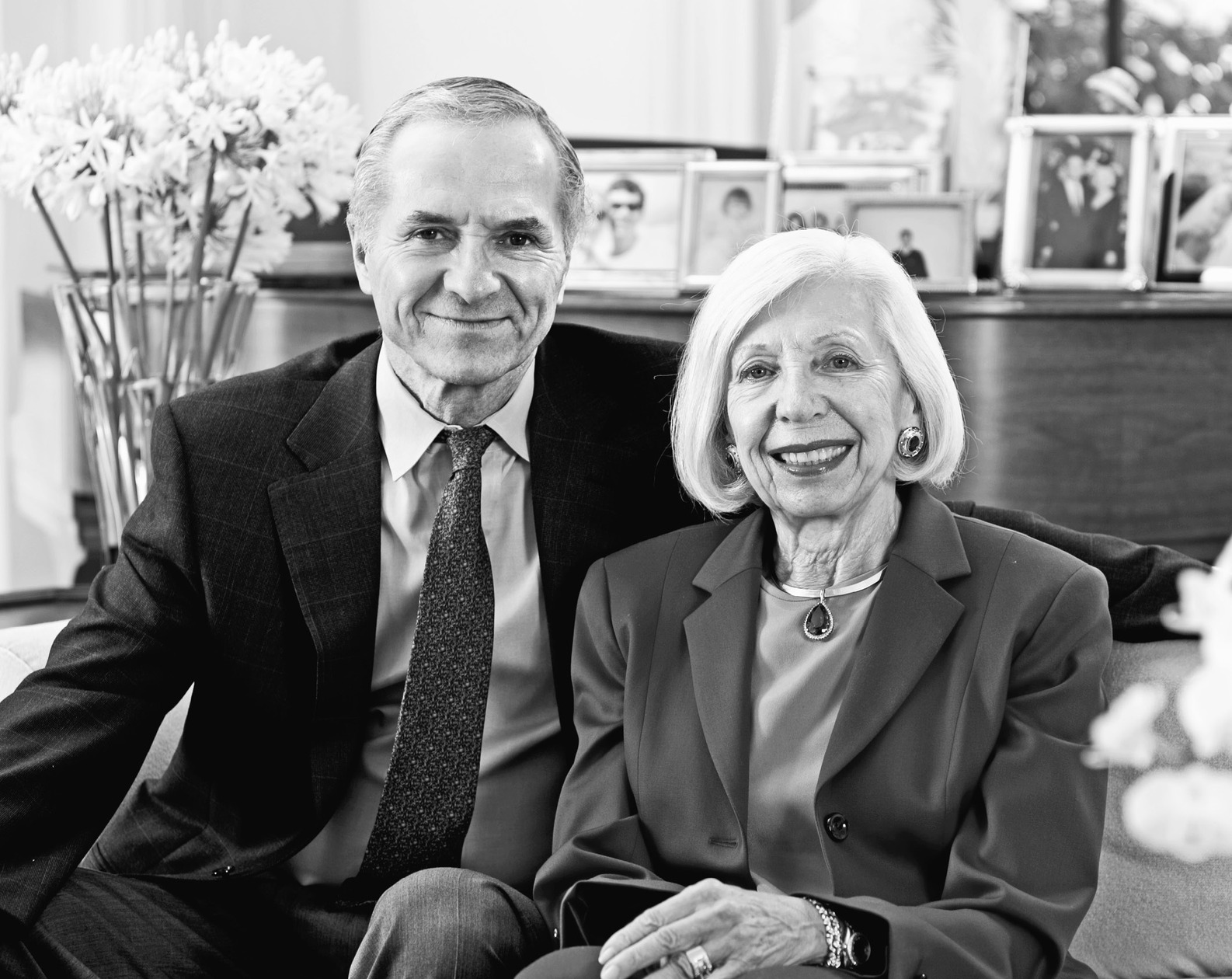

—

Some additional wisdom I personally learned from Thorp: Many successful speculators, after their first break in life, get involved in large-scale structures, with multiple offices, morning meetings, coffee, corporate intrigues, building more wealth while losing control of their lives. Not Ed. After the separation from his partners and the closing of his firm (for reasons that had nothing to do with him), he did not start a new mega-fund. He limited his involvement in managing other people’s money. (Most people reintegrate into the comfort of other firms and leverage their reputation by raising monstrous amounts of outside money in order to collect large fees.) But such restraint requires some intuition, some self-knowledge. It is vastly less stressful to be independent—and one is never independent when involved in a large structure with powerful clients. It is hard enough to deal with the intricacies of probabilities, you need to avoid the vagaries of exposure to human moods. True success is exiting some rat race to modulate one’s activities for peace of mind. Thorp certainly learned a lesson: The most stressful job he ever had was running the math department of the University of California, Irvine. You can detect that the man is in control of his life. This explains why he looked younger the second time I saw him, in 2016, than he did the first time, in 2005.

Ciao,

Nassim Nicholas Taleb

Chapter 1: LOVING TO LEARN

My first memory is of standing with my parents on an outdoor landing at the top of some worn and dirty wooden steps. It was a gloomy Chicago day in December 1934, when I was two years and four months old. Even wearing my only set of winter pants and a jacket with a hood, it was cold. Black and leafless, the trees stood out above the snow-covered ground. From inside the house a woman was telling my parents, “No, we don’t rent to people with children.” Their faces fell and they turned away. Had I done something wrong? Why was I a problem? This image from the depths of the Great Depression has stayed with me always.

I next recall being taken at age two and a half to our beloved family physician, Dr. Dailey. My alarmed parents explained that I had yet to speak a single word. What was wrong? The doctor smiled and asked me to point to the ball on his desk. I did so, and he asked me to pick up his pencil. After I had done this and a few more tasks he said, “Don’t worry, he’ll talk when he’s ready.” We left, my parents relieved and a little mystified.

After this, the campaign to get me to talk intensified. About the time of my third birthday, my mother and two of her friends, Charlotte and Estelle, took me along with them to Chicago’s then famous Montgomery Ward department store. As we sat on a bench near an elevator, two women and a man got off. Charlotte, keen to tempt me into speech, asked, “Where are the people going?” I said clearly and distinctly, “The man is going to buy something and the two women are going to the bathroom to do pee-pee.” Charlotte and Estelle both blushed deeply at the mention of pee-pee. Far too young to have learned conventional embarrassment, I noticed this but didn’t understand why they reacted that way. I also was puzzled by the sensation I had caused with my sudden change from silence to talkativeness.

From then on I spoke largely in complete sentences, delighting my parents and their friends, who now plied me with questions and often received surprising answers. My father set out to see what I could learn.

Born in Iowa in 1898, my father, Oakley Glenn Thorp, was the second of three children, with his brother two years older and sister two years younger. When he was six his family broke up. His father took him and his brother to settle in the state of Washington. His mother and sister remained in Iowa. In 1915 my grandfather died from the flu, three years before the Great Flu Pandemic of 1918–19, which killed between twenty and forty million people worldwide. The two boys lived with an uncle until 1917. Then my father, at age eighteen, went to France to join World War I as part of the great American Expeditionary Force. He fought with the infantry in the trenches, rose from private to sergeant, and was awarded the Bronze Star, the Silver Star, and two Purple Hearts for heroism in places like Château-Thierry, Belleau Wood, and the Battles of the Marne. As a very small boy I remember sitting in his lap on a humid afternoon examining the shrapnel scars on his chest and the minor mutilation of some of his fingers.

Following his discharge from the army after the war, my father enrolled at Oklahoma A&M. He completed a year and a half before he had to leave for lack of funds, but his hunger and respect for education endured and he instilled them in me, along with his unspoken hope that I would achieve more. Sensing this and hoping it would bring us closer, I welcomed his efforts to teach me.

As soon as I began to talk, he introduced me to numbers. I found it easy to count first to a hundred, then to a thousand. Next I learned how to increase any number by adding one to get the next number, which meant I could count forever if I only knew the names of the numbers. I soon learned how to count to a million. Adults seemed to think this was a very big number so I sat down to do it one morning. I knew I could eventually get there but I had no idea how long it was going to take. To get started, I chose a Sears catalog the size of a big-city telephone book because it seemed to have the most things to count. The pages were filled with pictures of merchandise labeled with the letters A, B, C…, which I recall appeared as black letters in white circles. I started at the beginning of the catalog and counted all the circled letters, one for each item, page after page. After a few hours I fell asleep at something like 32,576. My mother reported that when I awoke I resumed with “32,577…”

A trait that showed up at about this time was my tendency not to accept anything I was told until I had checked it for myself. This had consequences. When I was three, my mother told me not to touch the hot stove because it would burn me. I brought my finger close enough to feel the warmth, then pressed the stove with my hand. Burned. Never again.

Another time, I was warned that fresh eggs would crack if they were squeezed just a little bit. Wondering what “a little bit” meant, I squeezed an egg very slowly until it cracked, then practiced squeezing another, stopping just before it would crack, to see exactly how far I could go. From the beginning, I loved learning through experimentation and exploration how my world worked.

—

After teaching me counting, my father’s next project for me was reading. We started with See Spot, See Spot Run, and See Jane. I was puzzled and disoriented for a couple of days; then I saw that the groups of letters stood for the words we spoke. In the next few weeks I went through all of our simple beginner books and developed a small vocabulary. Now it got exciting. I saw printed words everywhere and realized that if I could figure out how to pronounce them I might recognize them and know what they meant. Phonics came naturally, and I learned to sound out words so I could say them aloud. Next was the reverse process—hear a word and say the letters—spelling. By the time I turned five I was reading at the level of a ten-year-old, gobbling up everything I could find.

Our family dynamics also changed then, with the birth of my brother. My father, fortunate to be employed in the midst of the Great Depression, worked longer hours to support us. My mother was fully occupied by the new baby and was even more focused on him when, at six months of age, he caught pneumonia and nearly died. This left me much more on my own and I responded by exploring endless worlds, both real and imagined, to be found in the books my father gave me.

Over the next couple of years I read books including Gulliver’s Travels, Treasure Island, and Stanley and Livingstone in Africa. When, after an eight-month arduous and dangerous search, Stanley found his quarry, the only European known to be in Central Africa, I thrilled to his incredible understatement, “Dr. Livingstone, I presume,” and I discussed the splendor of the Victoria Falls on the Zambezi River with my father, who assured me (correctly) that they far surpassed our own Niagara Falls.

Gulliver’s Travels was a special favorite, with its tiny Lilliputians, giant Brobdingnagians, talking horses, and finally the mysterious Laputa, a flying island in the sky supported by magnetic forces. I enjoyed the vivid pictures it created in my mind and the fantastical notions that spurred me to imagine for myself further wonders that might be. But at the time Swift’s historical allusions and social satire mostly escaped me, despite explanations by my father.

From Malory’s story of King Arthur and the Knights of the Round Table, I learned about heroes and villains, romance, justice, and retribution. I admired the heroes who, through extraordinary abilities and resourcefulness, achieved great things. Introverted and thoughtful, I may have been inspired to mirror this in the future by using my mind to overcome intellectual obstacles, instead of my body to defeat human opponents. The books helped establish lifelong values of fair play, a level playing field for everyone, and treating others as I myself wish to be treated.

The words and adventures were largely in my head; I didn’t really have anyone to discuss them with, except sometimes my tired father after work or on weekends. This led to an occasional unique pronunciation. For instance, for a couple of years I thought misled (miss-LED) was pronounced MYE-zzled, and for years afterward when I saw the word in print I would hesitate for a beat as I mentally corrected my pronunciation.

When I was reading or just thinking, my concentration was so complete that I lost all awareness of my surroundings. My mother would call me, with no response. Thinking I was willfully ignoring her, the shout would became a yell, then she would bring her flushed face right up to me. Only when she appeared in my visual field did I snap back into the here and now and respond. She had a hard time deciding whether her son was stubborn and badly behaved or was really as unaware as he claimed.

Though we were poor, my parents valued books and managed to buy me one occasionally. My father made challenging choices. As a result, between the ages of five and seven I carried around adult-looking books and strangers wondered if I actually knew what was in them. One man put me to an unexpected and potentially embarrassing test.

It happened because my parents became friends with the Kesters, who lived on a farm in Crete, Illinois, about forty-five miles from our home. They invited us out for two weeks every summer, starting in 1937 when I was turning five. These special days were what I most looked forward to each year. For a city boy from the outskirts of Chicago, it was sheer joy to watch “water spiders” scoot over the surface of a slowly meandering creek, to play hide-and-seek in the fields of tall corn, to catch butterflies and display them arrayed and mounted on boards, and to wander through the fields and among the cottonwood trees and orchards. The Kesters’ oldest boy, strapping twentysomething Marvin, would carry me around on his shoulders. My mother, along with the women of the household, Marvin’s pretty sister Edna Mae, their mother, and their aunt May, would preserve massive quantities of fruits and vegetables. In our basement back home my father built racks for the rubber-sealed mason jars of corn, peaches, and apricots that we brought back. Then there were the rows of fruit jellies, jams, and preserves in glasses sealed with a layer of paraffin on top. This cornucopia would last us well into the next year.

My father helped Marvin and his father, Old Man Kester, with the work of the farm, and sometimes I tagged along. One sunny forenoon during the second summer of our two weeks in Crete, my father took me to pick up supplies at a local store. I was just turning six, tall and thin with a mop of curly brown hair, lightly tanned, pants too short, the bare ankles ending in a pair of tennis shoes with frayed laces. I was carrying A Child’s History of England by Charles Dickens.

A stranger chatting with my father took the volume I was holding, written at the tenth-grade level, thumbed through it, then told my father, “That kid can’t read this book.” My father replied proudly, “He’s already read it. Ask him a question and you’ll see.”

With a smirk the man said, “Okay, kid, name all the kings and queens of England in order and tell me the years that they reigned.” My father’s face fell but to me this seemed to be just another routine request to look into my head to see if the information was there.

I did and then recited, “Alfred the Great, began 871, ended 901, Edward the Elder, began 901, ended 925,” and so on. As I finished the list of fifty or so rulers with “Victoria, began in 1837 and it doesn’t say when she ended,” the man’s smirk had long vanished. Silently he handed me back the book. My father’s eyes were shining.

My father was a sad and lonely man who didn’t express his feelings and who rarely touched us, but I loved him. I felt that this stranger was using me to put him down and I realized that I had stopped it. Whenever I remember my dad’s happiness at this, it echoes in me with a force that still seems undiminished.

My unusual retention of information was pronounced until I was about nine or ten, when it faded into a memory that is very good for what I’m interested in and, with exceptions, not especially remarkable for much else. I still remember facts from this time such as my phone number (Lackawanna 1123) and address (3627 North Oriole; 7600 W, 3600 N) in Chicago and Chicago’s seven-digit population (3,376,438), cited in the old green 1930 Rand McNally Atlas and Gazetteer that’s still on my bookshelf.

Between the ages of three and five I learned to add, subtract, multiply, and divide numbers of any size. I also learned the US version of the prefixes million, billion, trillion, and so on, up to decillion. I found that I could add columns of figures quickly by either seeing them or hearing them. One day when I was five or six I was in the neighborhood grocery store with my mother and overheard the owner calling out the prices as he totaled up the customer’s bill on his adding machine. When he announced the answer, I said no, and gave him my number. He laughed good-naturedly, added the numbers again, and found I was right. To my delight he rewarded me with an ice-cream cone. After that I dropped by when I could and checked his totals. On the rare occasions when we disagreed, I was usually correct and would get another cone.

My father taught me to compute the square root of a number. I learned to do it with pencil and paper as well as to work out the answer in my head. Then I learned to do cube roots.

Before the advent of writing and books, human knowledge was memorized and transmitted down the generations by storytellers; but when this skill wasn’t necessary it declined. Similarly, in our time with the ubiquity of computers and hand calculators, the ability to carry out mental calculations has largely disappeared. Yet a person who knows just grammar school arithmetic can learn to do mental calculations comfortably and habitually.

This skill, especially to make rapid approximate calculations, remains valuable, particularly for assessing the quantitative statements that one continually encounters. For instance, listening to the business news on the way to my office one morning, I heard the reporter say, “The Dow Jones Industrial Average [DJIA] is down 9 points to 11,075 on fears of a further interest rate rise to quell an overheated economy.” I mentally estimated a typical (one standard deviation ) DJIA change from the previous close, by an hour after the open, at about 0.6 percent or about sixty-six points. The probability of the reported move of “at least” nine points, or less than a seventh of this, was about 90 percent, so the market action was, contrary to the report, very quiet and hardly indicative of any fearful response to the news. There was nothing to worry about. Simple math allowed me to separate hype from reality.

Another time, a well-known and respected mutual fund manager reported that Warren Buffett, since he took over Berkshire Hathaway, had compounded money after taxes at 23 to 24 percent annually. Then he said, “Those kind of numbers will not be achieved in the next ten years—he’d own the world.” A quick mental estimate of what $1 grows to in ten years compounded at 24 percent gave me a little over $8. (A calculator gives $8.59.) Since, at the time, Berkshire had a market cap of about $100 billion, this rate of growth would bring the company to a market value of roughly $859 billion. This falls far short of my guesstimate of $400 trillion for the present market value of the world. The notion of a market value for the whole world reminds me of a sign I saw on an office door in the Physics Department of the University of California, Irvine. It read EARTH PEOPLE, THIS IS GOD. YOU HAVE THIRTY DAYS TO LEAVE. I HAVE A BUYER FOR THE PROPERTY.

Just after I turned five I started kindergarten at Dever Grammar School in northwest Chicago. I was immediately puzzled by why everything we were asked to do was so easy. One day our teacher gave us all blank paper and told us to draw a copy of an outline of a horse from a picture she had given us. I put little dots on the picture and used a ruler to measure the distance from one to the next. Then I reproduced the dots on my piece of paper, using the ruler to make the distance between them the same as they were on the picture and with my eye estimating the proper angles. Next, I connected up the new dots smoothly, matching the curves as well as I could. The result was a close copy of the original sketch.

My father had shown me this method and also how to use it to draw magnified or reduced versions of a figure. For example, to draw at double scale, just double the distance between the dots on the original drawing, keeping angles the same when placing the new dots. To triple the scale, triple the distance between dots, and so on. I called the other kids over, showed them what I had done and how to do it, and they set to work. We all handed in copies using my method instead of the freehand sketches the teacher expected, and she wasn’t happy.

A few days later the teacher had to leave the room for a few minutes. We were told to entertain ourselves with some giant (to us) one-foot-sized hollow wooden blocks. I thought it would be fun to build a great wall so I organized the other kids and we quickly assembled a large terraced mass of blocks. Unfortunately my project totally blocked the rear door—and that was the one the teacher chose when she attempted to reenter the classroom.

The last straw came a few days later. I sat on one of the school’s tiny chairs meant for five-year-olds and discovered that one of the two vertical back struts was broken. A sharp splintered shard stuck up from the seat where it had separated from the rest of the strut, so the whole back was now fragilely supported only by the one remaining upright. The hazard was obvious, and something needed to be done. I found a small saw and quietly cut off both struts flush with the chair’s seat, neatly converting it into a perfect little stool. At this, the teacher sent me to the principal’s office and my parents were called in for a serious conference.

The principal interviewed me and immediately recommended that I be moved up to first grade. After a few days in my new class, it was clear that the work there also was much too easy. What to do? Another parent–teacher conference. The principal suggested skipping me again into second grade. But I had barely been old enough to qualify for kindergarten: I was a year and a half younger on average than my first-grade classmates. My parents felt that skipping another grade would leave me at an extreme social, emotional, and physical disadvantage. Looking back on twelve years of pre-college schooling, where I was among the smallest and always the youngest in my class, I think they were right.

As we were barely managing on my father’s Depression-era wages, an academically advanced private school was never an option. We were fortunate that he had found work as a security guard at the Harris Trust and Savings Bank. His battlefield medals from World War I may have helped.

The Depression permeated every facet of our lives. Living on my father’s $25-a-week salary, we never wasted food, and we wore our clothes until they fell apart. I treasured objects such as the Smith Corona typewriter my father had won in a writing contest and the military binoculars he used in World War I. Eventually both became part of my tiny collection of possessions and followed me for the next thirty years. For the rest of my life I would meet Depression-era survivors who retained a compulsive, often irrational frugality and an economically inefficient tendency to hoard.

Money was scarce and no one scorned pennies. Seeing the perspiring WPA workers in the streets (created by presidential order in 1935, “Works Progress Administration” was the largest of FDR’s New Deal programs to provide useful work for the unemployed), I borrowed a nickel and bought a packet of Kool-Aid, from which I made six glasses that I sold to them for a penny each. I continued to do this and found that it took a lot of work to earn a few cents. But the next winter, when my father gave me a nickel to shovel the snow from our sidewalk, I hit a bonanza. I offered the same deal to our neighbors and, after an exhausting day of snow removal, returned home soaked in sweat and bearing the huge sum of a couple of dollars, almost half of what my father was paid per day. Soon lots of the kids were out following my lead and the bonanza ended—an early lesson in how competition can drive down profits.

The Christmas I was eight, my father gave me a chess set. A friend of his made the board by gluing squares of light and dark wood on a piece of felt, so I could fold the board in half or even roll it up. The pieces were the classic Staunton-style, the kind I have ever after preferred, with ebony-black chessmen opposing a pine-colored white force. After I had learned the basics from my father, our neighbor across the back alley, “Smitty” Smittle, decided to entertain himself by playing against me. I was often at his house to use his pool table, having recently been granted the privilege. Smitty won our first two chess games easily, but then it got tougher. A few games later, I won. Smitty never won again, and after increasingly one-sided routs, he abruptly refused to play me. That evening my father told me I was no longer welcome at Smitty’s pool table.

“But why?” I asked.

“Because he’s afraid you’ll tear the felt with the cue.”

“But that makes no sense. I’ve been playing there for a while and he can see how careful I’ve been.”

“I know, but that’s what he wants.”

I was disappointed and indignant at this treatment. In my world of books, ability, hard work, and resourcefulness were rewarded. Smitty should have been pleased that I was doing well, and if he wanted to do better, he should practice and study, rather than penalize me.

Before another Christmas, this miniature war on the chessboard would be followed by the United States’ entry into the already raging World War II.

My last prewar spring of 1941 I got the measles. As it was widely believed that bright light could ruin my eyes, I was confined in a shaded room. To keep me from straining my eyes, books were removed. Not allowed to read, and bored, I discovered an atlas that had been mistakenly left in the room. For the next two weeks I studied the maps, read the write-ups on all the individual countries, and gave myself an education in geography and a facility with maps that would serve me well for a lifetime. Then I used the atlas to follow the battles around the world. I became interested in the military strategy of the antagonists. How were they deploying their forces? Why? What were they thinking? From daily radio and newspaper reports of the fighting, I used a pencil to shade in on the maps, step by step, the frightening, ever-expanding area under Axis control. I did this throughout the war, using an eraser when the Allies reclaimed territory.

That summer while we wondered whether the United States would, as we expected, enter the war, my mother’s brother Edward came to visit. Chief engineer on a ship in the merchant marine, he was classically tall, dark, and handsome with his uniform, his mustache, and a slight Spanish accent giving him the persona and appearance of a Latin Clark Gable. My parents and teacher thought I spent too much time in my head (I’m afraid I still do), and that it would be healthy for me to learn to do things with my hands. After initial resistance on my part, I was lured with Uncle Ed’s help into the world of model airplanes, and we spent several wonderful weeks making our own air force.

The boxed kits came with lots of fragile balsa-wood sticks and some sheets with other plane parts to be carefully cut from outlines. We taped the large sheet of plans onto a piece of cardboard and glued balsa-wood pieces together after laying them on the plan and holding them in place with pins. When we had completed the wings, the fuselage top, bottom, and sides, and the tail sections, we assembled them into a completed skeleton and covered it by gluing on tissue paper. I remember the pervasive acetone smell from drying glue, like that of some brands of nail polish remover. My first propeller-driven planes, powered by rubber band motors, didn’t fly well. They were too heavy because I had used excessive amounts of glue to be sure everything would hold together. When I learned to use glue more judiciously, I had some satisfying flights. The skills from model building and using tools were a valuable prequel to the science experiments that would occupy me during the next few years, and my introduction to planes helped me follow the details of the great air battles of World War II. I was sorry to see Uncle Ed go and worried about what would happen to him if war came.

Later in that pre–Pearl Harbor summer of 1941 my parents bought their first car, a new Ford sedan, for $800. We drove “America’s mother route,” historic Highway 66, from Chicago to California, where we visited friends from the Philippines who had settled in the picturesque art colony of Laguna Beach. Each year they had mailed us a little box of candy oranges, which my brother and I eagerly awaited. Now we saw groves of real orange trees.

Then the great world war that was consuming Europe and Asia struck the United States. Late on the morning of Sunday, December 7, 1941, we were listening to music on the radio and decorating our Christmas tree when an authoritative voice broke in: “We interrupt this program to bring you a special announcement. The Japanese have just bombed Pearl Harbor.” A frisson ran through me. Suddenly the world had changed in a momentous way for all of us.

“The president will address the nation shortly. Stay tuned.”

The next morning (California time), Franklin Delano Roosevelt addressed the nation asking Congress to declare war, uttering the phrase that electrified me, and the millions of others listening, “a date which will live in infamy…” When we had recess at school the next day, I was astonished to see the other children playing and laughing as usual. They seemed wholly unaware of what was to come. As I had been following the war closely, I stood alone off to one side, silent and grave.

Our immediate concern was for my mother’s family in the Philippine Islands. My mother’s father had left Germany and gone to work as an accountant for the Rockefellers in the Philippines. There he met and married my grandmother. They, along with six of my mother’s siblings and their children, were trapped in Manila when the Japanese invaded the islands just ten hours after the attack on Pearl Harbor. We heard nothing further from them. As the eldest of five sisters and three brothers, all fluent in both English and Spanish, my mother was a life-of-the-party extrovert. She was a head-turner, too, as evidenced by a picture I found decades later of her aged forty, with a black one-piece bathing suit showing off her dark hair and five-foot-two, 108-pound movie-star figure against the background of the Pacific Ocean. Her parents, along with the other siblings and their families, except for Uncle Ed, were living in Manila, the capital. We would not learn their varied fates for more than three years, until after the islands were liberated near the end of the war in the Pacific. Meanwhile, my nine-year-old eyes followed in detail the Battle of Bataan, reports of the horrors of the Bataan Death March, and the heroic resistance by the island fortress of Corregidor in Manila Bay.

For this I had my own father as a living guide. He had been stationed on Corregidor as a member of the Philippine constabulary, which the United States created, and he accurately foretold that Corregidor would fall only when the troops, weapons, ammunition, and food were exhausted. It became a twentieth-century version of the Alamo. After leaving Oklahoma A&M in order to support himself, my father went back to the Pacific Northwest, where he worked as a lumberman and became a member of the International Workers of the World, or IWW. Fleeing the fierce persecution of that union, he went to Manila, where his military credentials led him to join the constabulary. While there he met and married my mother. Fortunately they moved to Chicago in 1931, so my younger brother and I were born in America and our family spent the war in safety, unlike many among my mother’s family, who we later learned spent it in Japanese prison camps.

The war drastically transformed the lives of everyone. The Great Depression’s twelve years of persistent widespread unemployment, peaking at 25 percent, were suddenly ended by the greatest government jobs program ever, World War II. Millions of fit young men went off to war. Mothers, wives, sisters, and daughters poured from homes into factories, building planes, tanks, and ships. The “arsenal of democracy” would eventually build ships faster than U-boats could sink them and fill the skies with planes on a scale never known before and not foreseen by the Axis powers. To support our troops and allies, gasoline, meat, butter, sugar, rubber, and much else was rationed. Lights were blacked out at night. Neighborhoods were patrolled by air raid wardens and warned of possible danger with sirens. Barrage balloons, which were tethered blimps, were anchored over critical regions such as oil refineries to deter attacks by hostile aircraft.

Our earlier trip to Southern California made it easier for our family to move there after the United States went to war; my parents hoped to find jobs in the expanding war industries. While we spent a few weeks with our friends in Laguna Beach, I hung out on the seashore watching artists paint, examining tide pools and marine life, and marveling at the heaps of abalone shells (today an endangered species) in the front yards of so many of the beach cottages.

My parents soon bought a house in the small town of Lomita, located at the base of the Palos Verdes Peninsula. My mother was a riveter on the swing shift (4 P.M. until midnight) at Douglas Aircraft. Diligent and dexterous, she was nicknamed “Josie the riveter” by her co-workers after famous World War II posters of the bandanna-clad heroine. Meanwhile, my father worked the graveyard shift at Todd Shipyards in nearby San Pedro as a security guard. My parents were usually gone or sleeping, seldom seeing us or each other. They left my brother and me to raise ourselves. We served ourselves cereal and milk in the mornings. I stuffed peanut butter and grape jelly sandwiches into brown bags for our lunches.

I enrolled in the sixth grade at the Orange Street school. As I was a year and a half younger than my classmates and had also missed the first half of the school year, I was condemned to repeat the sixth grade the following year. My new school was at least two grade levels behind my school in Chicago. Faced with the horror of years of boredom, I protested. My parents met with the principal and as a result I was asked to take a supervised test one afternoon after school. Unaware of the purpose of the test and eager to play, after answering most of the 130 questions, I looked at the last twenty True–False questions and simply drew a line through the Trues so I could leave earlier. When I later learned that this was a test to see if I could avoid repeating grade six I was very upset. Yet after the test was scored, there was no further problem. Although an achievement test was appropriate, showing that I was at grade level, I eventually found out that, oddly, I had instead been given the California Test of Mental Maturity, an IQ test. Years later, I learned why I was allowed to proceed to grade seven. It was the highest score they had ever seen, one that the high school I would enter could statistically expect from a student less than once in a hundred years.

Although they were behind scholastically, my California classmates were bigger than their Chicago contemporaries and much more athletic. As a smaller, thinner, brainy kid, it looked like I might be in for hard times. Luckily I hit it off with the “alpha dog” and helped him with his homework. He was the biggest, strongest kid in our class, as well as the best athlete. Under his protection, I safely finished the sixth grade. Decades later I especially appreciated the 1980 movie My Bodyguard.

I started grade seven at nearby Narbonne High School in the fall of 1943. Over the next six years I’d face the difficulties of coping with being an extreme misfit at a school where muscles were important and brains were not. Fortunately, my test score attracted a gifted and dedicated English teacher, Jack Chasson, who would become a mentor and act in loco parentis. Jack was twenty-seven then, with wavy brown hair and the classic good looks of a Greek god. He had a ready, warm smile and a way of saying something that boosted the self-esteem of everyone he met. With a background in English and psychology from UCLA, he was an idealistic new teacher who wanted his students not only to succeed but also to work for the social good while respecting the achievements of the past. He was my first great teacher, and we would remain friends for life.

As there was no spare money, my parents encouraged me to save some so I could go on one day to college. So in the fall of 1943, at age eleven, I signed up to become a newspaper boy. I rose every morning between two thirty and three and pedaled my used bike (one speed was all we had then) about two miles to an alley behind a strip of stores. I and the classmate who told me about the job, along with a few others, would throw ourselves onto a pile of baling wire left over from previous bundles of newspapers and talk. When the Los Angeles Examiner truck finally pulled up and dumped a dozen packets of a hundred newspapers each onto the ground, we each took one, folding the papers individually for throwing and stuffing them into canvas saddlebags carried on racks over the rear wheels of our bikes.

Because of the wartime blackout the lights were out and the darkness was complete except for the headlights of an occasional early-morning driver. As we were at the base of the Palos Verdes Peninsula just a few miles from the ocean, on many nights, especially in winter, a marine layer of overcast blotted out the moon and stars, intensifying the blackness and seeming to mute the tiny background sounds from nature. As I floated along the streets, a lonely ghost tossing papers from my bicycle, the one sound I heard was the soft cooing of pigeons. Forever after, the gentle voices of the pigeons in the dark of early morning evoke memories of those paperboy days.

Getting about five hours of sleep each night, I was perpetually tired. One morning, rolling down a steep thirty-foot hill near the end of my route, I fell asleep. I awoke in pain sprawled on a front lawn, papers scattered everywhere, my bike bent, and a mailbox, its four-by-four wooden post snapped off by my impact, askew on the grass nearby. I gathered my papers and managed to make the bike ridable. Aching and bruised, I finished my route and headed for school.

About a quarter mile beyond our backyard was the Lomita Flight Strip, a small municipal airport that had become a military base. Lockheed P-38 Lightning twin-engine fighter-bombers routinely buzzed our treetops as they landed. Since I was given a couple of extra papers for contingencies—a poor throw might land a paper on a roof or in a puddle—I took to biking over to the base to sell my extras for a few cents apiece. Before long I was invited to join the soldiers for breakfast in the mess. I stuffed the bounty of ham, eggs, toast, and pancakes into my skinny frame while the soldiers read the papers I sold them. They often gave them back, encouraging me to sell them again. But selling papers on the base was too good to last. After a few weeks, the base commander called me into his office one morning and explained, sadly and considerately, that because of wartime security I was no longer allowed entry. I missed the satisfying hot breakfasts, the camaraderie with the soldiers, and the extra income.

The base, which later became Torrance Airport, was dedicated as Zamperini Field for Louis Zamperini, while he was a Japanese war prisoner. He grew up just a couple of miles from where I lived. The famous Torrance High School and Olympic track star, hero of Laura Hillenbrand’s bestseller Unbroken, had gone to war as a B-24 bombardier just a couple of months before my family arrived in adjacent Lomita.

Each newspaper route had about a hundred stops, for which we were paid about $25 per month. (Multiply by twelve to convert to 2014 dollars.) This was an astonishing amount of money for an eleven-year-old. However, our take-home pay was usually less, because we had to collect payment from our customers and any shortfall was deducted from what we received.

Since subscriptions were something like $1.25 or $1.50 per month, and there were deadbeats who moved away owing money, others who refused to pay, and some who paid only part because of missed papers, our pay was often reduced significantly. We collected after school in the afternoons and early evenings and often had to come back many times for people who weren’t home or didn’t have the money. I gave most of what I earned to my mother so she could buy savings stamps for me at the post office.

My booklets, when they reached $18.75, were exchanged for war bonds that would mature in several years for $25 each.

As my pile of bonds grew, college began to seem possible. But then my area supervisor gradually cut our paper-route wages so he could keep more for himself.

We understood when we signed on that if we continued to do our jobs well, we would get our full pay and eventually maybe get a small raise. Now the boss was taking part of our pay simply because he could get away with it. This was unfair but what could a bunch of kids do? Would King Arthur’s Knights of the Round Table tolerate this? No! We took action.

My friends and I went on strike against the Examiner. Our supervisor, an ever-perspiring obese man of about fifty, with thinning black hair and rumpled clothing, was forced to deliver newspapers to ten routes in his aging black Cadillac. After a few months of this, his car wore out, papers were not delivered, and he was replaced. Meanwhile, I had already signed on with the Los Angeles Daily News. Unlike the Examiner, it was an afternoon paper, so I could start catching up on my years of sleep deprivation. As I was delivering newspapers on the beautiful summer afternoon of Tuesday, August 14, 1945, people suddenly erupted from their houses, cheering wildly. World War II had ended. It was my thirteenth birthday and that was its only celebration.

Chapter 2: SCIENCE IS MY PLAYGROUND

In the 1940s, graduates of Narbonne High School were not expected to go on to college. The course requirements reflected this. In the seventh and eighth grades, though hungry for academic learning, I was required to take practical subjects, including wood shop, metal shop, drafting, typing, print shop, and electric shop.

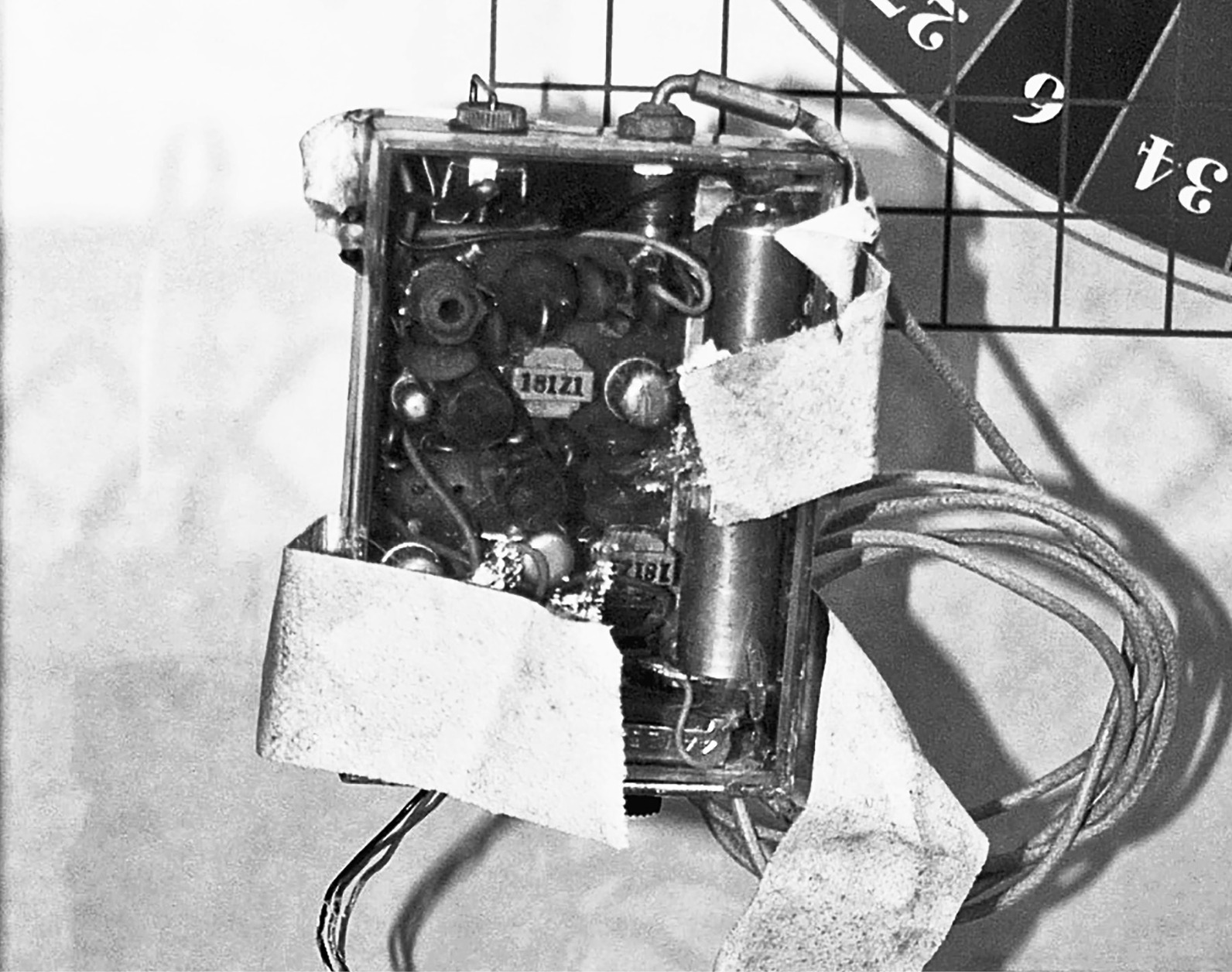

I wanted to pursue my interest in radio and electronics, which was sparked a couple of years earlier when I got one of the first simple radios, a crystal set. Made with a rectifier of galena, which was a shiny black crystal, a wire called a cat’s whisker for touching it in the right spot, and a coil of wire, it had earphones, an antenna wire, and a variable capacitor for tuning in different stations. Then like magic: Through my earphones came voices from the air!

The mechanical world of wheels, pulleys, pendulums, and gears was ordinary. I could see, touch, and watch it in action. But this new world was one of invisible waves that traveled through space. You had to figure out through experiments that it was actually there and then use logic to grasp how it worked.

It was no surprise that the required course that caught my interest was electric shop, where we each had to build a small operational electric motor. The teacher, Mr. Carver, was a universally liked, plump, avuncular man whom the other teachers called Bunny. I suspect that Jack Chasson had a word with him, for somehow he learned of my interest in electronics and told me about the world of amateur radio. At that time there already was a web of do-it-yourselfers who built or bought their own radio transmitters and receivers and talked night and day by voice or by Morse code all over the globe. It was in effect the first Internet. With less electricity than it takes to power a lightbulb, I could talk to people around the world. I asked Mr. Carver how I could be part of it. He told me that all I needed to do was pass what was then a rather difficult examination.

In those days the exam began with a series of written questions on radio theory. Next was a test on Morse code. That hurdle, since relaxed, was a major obstacle for most, and Mr. Carver warned me about the long, tedious hours of practice needed for proficiency. We had to copy code as well as send it with a telegrapher’s key at an error-free rate of thirteen words per minute. A word meant any five characters, so this was sixty-five characters a minute, or a little faster than one per second. I thought about it, then went out and bought a used “tape machine” for what was then the enormous sum of $15, almost three weeks’ income from delivering newspapers. The machine looked like a stubby black shoe box. The lid unclipped to reveal two spindles. It came with a collection of reels of pale-yellow paper tapes. These tapes had short holes for the “dots” and long holes for the “dashes.” You could look at them and read off the code for letters, and thus “read” the tape. The machine wound the tape from one spindle to the other, like the old reel-to-reel high-fidelity music tape players and the later cassette-tape machines. For power, you simply wound up the machine with a crank. It was simple, low-tech, and effective. When a hole moved past a spring contact, the circuit closed for the length of time its journey took. Long holes gave dashes, and short holes gave dots. The box was hooked to a simple device, an “audio oscillator,” that emitted a fixed tone such as the piano middle C. As the tape ran, the contact in the box switched the oscillator alternately on and off, sending dots and dashes.

The great thing about the machine as a teaching aid was that its speed was adjustable, from the slow rate of one word per minute up to fast rates like twenty-five words per minute. My plan was to understand every tape at a slow rate, then speed the tapes up slightly and master them again. To motivate our class and give us a benchmark, Mr. Carver showed us a chart of the rate of progress of World War II army trainees in radio code. These students were at least a few years older than we were and under wartime pressure to learn quickly. Previous classes found it a difficult standard to match. So did our class—but my plan worked for me. I drew a graph of the hours I spent versus my speed and found that using my method I learned four times as fast per hour spent as did the army trainees.

I brought my code speed up to twenty-one words a minute to give myself a margin of safety. The American Radio Relay League, an organization of amateurs, provided guidance on preparing for the theory part of the exam. Feeling ready, I signed up for the test and, one summer Saturday morning, took the twenty-mile bus ride to a federal building in downtown Los Angeles. A twelve-year-old in an old flannel shirt and worn jeans, I nervously joined a group of about fifty adults. We sat at long wooden tables on hard chairs in a room with bare painted walls. Closely supervised and monitored, we worked for two hours in library-like silence, broken only by the sounds of Morse code during that part of the exam. On the bus ride home, as I ate my bag lunch, I speculated that I probably had passed but, not knowing how harshly they graded, couldn’t be sure.

For the next few weeks I expectantly checked the mail until, a few days after the war ended, I got an official government envelope with the results. I was now amateur radio operator W6VVM. I was one of the youngest amateurs, or “hams,” the age record at the time being eleven years and some months. There were then about two hundred thousand amateurs in the United States and a comparable number in the rest of the world. I was thrilled to know that I could talk to people in this web who might be anywhere on earth.

Meanwhile American troops had liberated the survivors of my mother’s family from a Japanese prison camp in the Philippines. Now my grandmother, my mother’s youngest brother, and two of her sisters and their families came over from the Philippines to stay with us. They told us that my aunt Nona and her husband had been beheaded in front of their children by the Japanese and that my grandfather had died painfully of prostate cancer in the camp just a week before liberation. My uncle Sam, a pre-med student before the war, told us how he could do nothing but offer comfort to my dying grandfather, denied both medicine and surgical facilities.

To house everyone, my father, in between graveyard shifts at work, built out the attic, adding two bedrooms and a stairway. I shared one bedroom with my brother, James (Jimmy); the other was Sam’s. Having ten extra residents packed into the house along with our family of four brought difficulties in addition to overcrowding and the economic burden of supporting them. One aunt, with her husband and three-year-old son, had contracted tuberculosis while they were prisoners of the Japanese. To protect the rest of us from catching the disease, they ate from a separate set of tableware, with possible severe penalties for us if they made a mistake. Of course, we shared the same air, so we still risked infection from their coughs and sneezes. Decades later, my first lung X-rays showed a small lesion, which remained stable. My doctor thought it came from my earlier tuberculosis exposure.

The other aunt staying with us brought her spouse and three children. The husband, a fascistic martinet who abused his compliant wife, required that she and the children obey his every command. It may have been this, as well as everything the family experienced at the hands of the Japanese, that turned the oldest boy into what I viewed as a sociopath. He told my brother that he wanted to kill me. I had no clue then or later as to why. Though Frank, as I’ll call him, was older and larger, I had no intention of backing down if we had a confrontation. As a precaution, I kept with me a squirt bottle of full-strength household ammonia, the most benign of my array of chemical weapons. We never met again after his family moved out, but our relatives told me he later went to war in Korea. They said he enjoyed killing so much that he reenlisted. Another first cousin who saw him years later with his seven-year-old son was shocked to see the little fellow ordered about in military fashion. When Frank died in 2012, his obituary mentioned that he had become a well-known practitioner and teacher of martial arts.

Seeing what World War II had done to my relatives, and how World War I plus the Great Depression had limited my father’s future, I determined to do better for myself and the children I hoped to have.

Despite the horrors my relatives suffered it never occurred to me, then or later, to blame or discriminate against Japanese Americans. I only became aware of the US government’s treatment of them after they were interned in isolated special camps, their land and homes expropriated and sold by the authorities, their children disappearing from my classes. Jack Chasson educated my close friends Dick Clair and Jim Hart and me, along with other students and faculty, about this injustice. After the war, when some of the imprisoned students returned to school, Jack told me about one whose IQ score was 71, ranking him in the lowest 3 percent. But Jack, who had a degree in psychology, said he could see that this student was unusually smart, attributing his low score to difficulty understanding English. Would I tutor him during lunch hours? Of course. He was retested after a semester and scored 140, extremely gifted, in the upper 1 percent, and well above the threshold for the IQ society Mensa.

—

My focus on science developed rapidly as I used some of my paper-route money for electronic parts to build ham radio equipment, to buy chemicals by mail and from the local druggist, and to purchase lenses to build a cheap telescope from cardboard tubes.

Then, in November 1946, as a high school sophomore, I saw an advertisement by the Edmund Scientific Company for war-surplus weather balloons. Ever since I had been building model airplanes I had been thinking about ways to achieve my fantasy of a personal flying machine. One of my ideas was to construct the tiniest possible airplane, small, compact, and yet able to carry me. I also thought about building a little blimp, a one-person helicopter, and its variant, the flying platform. My plan was to build scale models as an easy and less costly way both to prove feasibility and to solve some of the practical problems. All this was beyond my financial capability, but flying with balloons was not. I visualized how to complete every step needed to succeed.

Imagining myself drifting up into the sky, I ordered ten balloons, each eight feet tall, for a total cost of $29.95, which is like $360 today. I knew, from the chemistry I was teaching myself, that each eight-footer, filled with hydrogen, would lift about fourteen pounds. Since I weighed 95 pounds, eight of the balloons, with a total lifting power of 112 pounds, should carry me plus a harness and ballast. I didn’t know how to get the hydrogen I needed at a price I could afford, so I turned to the family stove: It was powered by natural gas, whose main constituent was methane, with a little less than half the lifting power of hydrogen. If my tests succeeded I could always buy more balloons. I imagined myself tethered to sixteen or more eight-foot balloons, slowly ascending over my house and looking out first over my neighborhood and then miles in all directions over Southern California. I planned to carry bags of sand to use as ballast. When I wanted to go higher, I’d just lighten up by spilling a little sand, which wouldn’t injure anyone below. If I wanted to go lower or land, I had designed a valve system for each balloon that would let me release its gas in a controlled manner.

After a wait that seemed endless, but was only a couple of weeks, the balloons arrived and I set to work. One quiet Saturday when my family wasn’t around, I connected the gas line for the stove to my balloon and blew it up to about four feet in diameter, which was the largest size I could squash through the kitchen door to get it outside. As predicted, it had a lifting power of nearly one pound. I went to an open field and sent the balloon up to about fifteen hundred feet tethered to strong kite string. Everything was working as expected, and I enjoyed watching a small plane from the nearby local airport “buzz” my balloon. About forty-five minutes later the plane returned, flew near my balloon, and then the balloon suddenly popped. The plane appeared to have shot it down, although I had no idea how or why.

This gave me pause. I pictured myself tied to a flock of eight-foot balloons, making an irresistible target, and being shot down by the local kids who owned air rifles (then known as BB guns). Too risky, I decided. However, that original balloon ad must have been wonderfully successful, for I saw it many times down through the years and it was still running under the banner PROFESSIONAL WEATHER BALLOONS fifty-four years later, with much the same wording. Almost forty years after my experiment, “Lawnchair Larry” tied a cluster of four-foot helium-filled balloons to a chair and ascended several thousand feet.

Disappointed, I wondered what else I could do with the balloons. The first idea came one day when my father brought home some wartime parachute flares from surplus lifeboats. They came in metal canisters that looked like shell casings, and could be fired high into the sky by a special gun. The blazing flare illuminated a large area as it slowly drifted down on its parachute. One night I attached a homemade slow-burning fuse to one of these flares. Then I hung the flare with its fuse on one of my giant balloons and went to a quiet intersection near our house. I lit the fuse and sent the gas-filled balloon up on several hundred feet of cord. I loosely noosed the cord around a telephone pole so, as the balloon rose, the noose slid up the pole, causing the apparatus to be tethered from the top, well out of reach. Then I backed off a block or so and waited. In a few minutes the sky lit up with a dazzling brilliance. A crowd gathered and police cars converged on the telephone pole. A few minutes later the light in the sky went out. The police cars left, the crowd dispersed, and all was as before. A second slow-burning fuse then severed the cord and balloon, so the evidence leapt into the sky, traveling off to I know not where.

Pranks and experiments were part of learning science my way. As I came to understand the theory, I tested it by doing experiments, many of which were fun things I invented. I was learning to work things out for myself, not limited by prompting from teachers, parents, or the school curriculum. I relished the power of pure thought combined with the logic and predictability of science. I loved visualizing an idea, and then making it happen.

In the upstairs bedroom I shared with my brother, I set up a two-meter (wavelength) amateur radio station, complete with a rotating directional beam antenna in the area not filled with beds. I also had created a laboratory space at the far end of a narrow laundry room attached to the rear of the garage. This was where I did many of my investigations in chemistry, some of which went awry. For instance, having read that hydrogen gas would burn in air with a pale-bluish flame, I decided to see for myself. To generate the gas, I poured hydrochloric acid onto zinc metal in a glass flask, sealing it with a rubber stopper that had a tube through it from which the gas would be emitted. I hoped there would be so much hydrogen produced that it would “wash” all the air out of the system before I attempted to ignite the hydrogen coming out the end of the tube. Otherwise—boom. With safety goggles and protective clothing, I was just attempting to ignite the hydrogen as my brother burst in. Unable to stop my hand with the match I screamed “DOWN” as he ducked and the apparatus blew apart. After this, I painted a white “no trespassing” line across the floor to mark off my zone, about five feet wide and ten feet long, lined with shelves I built and stocked with chemicals and glassware. The frequent fumes and explosions ensured voluntary compliance.

I had plenty of other enthusiasms. For instance, at thirteen I was seriously exploring explosives. My experiments had begun a couple of years earlier when I found a recipe for gunpowder in an old Funk and Wagnalls encyclopedia. The ingredients were a mixture of potassium nitrate (commonly known as saltpeter), charcoal, and sulfur (which we were told to put in our dog’s food to make his coat shiny). A batch ignited accidentally while I was working on it, burning the skin of my entire left hand to a gray-black, brittle crust. My father soaked my hand in cold tea, after which I wore a tea-soaked bandage for a week. The healing liquid worked: When we removed the bandage and the crusted skin came off, I was overjoyed to see total recovery.

With my well-stocked homemade chemistry lab as a base, I made large quantities of gunpowder and used it either to launch homemade rockets or to shoot model rocket cars down the street in front of my house. The cars had balsa-wood bodies, lightweight wheels from a hobby shop, and a “motor” that consisted of a carbon dioxide or CO2 cartridge like the ones used nowadays to carbonate drinks or power air rifles. These cartridges were being discarded as war surplus, so my father brought them home from the shipyard. Only I didn’t use the CO2 to propel my cars. I drilled out the seal at the end of the cartridge, to the rush of escaping gas. A cold white powder of solid CO2 would collect as the gas expanded and cooled. Once emptied, I filled the cartridge with my homemade gunpowder, inserted a fuse, and tucked my new supermotor into a slot in the back of the tiny vehicle. As the motors sometimes exploded, casting shrapnel, I wore safety goggles and kept myself and the neighborhood kids well back. When it all worked, the cars were astonishingly fast. One moment they would be there, then they weren’t, reappearing after a second or so a couple of blocks away. Noting the motors’ tendency to blow up, I built and tested bigger versions designed to explode, bombs made from short lengths of steel plumbing pipes, which I used to blow craters in cliff faces at the nearby undeveloped Palos Verdes Peninsula.

The next challenge was guncotton, or nitrocellulose. It’s the basis for the so-called smokeless powder. The encyclopedia again gave me the recipe: Slowly add one part of cold concentrated sulfuric acid to two parts of cold concentrated nitric acid. Whenever the mixture becomes warm, chill everything before continuing. Into the brew I added ordinary surgical cotton, again chilling the mixture as it became warm. Then I let it stew in our refrigerator with a DON’T TOUCH sign attached. By now my family knew such signs meant serious business, so I could rely on them to keep away from my projects. After twenty-four hours I removed the cotton, rinsing and drying it. I verified that it was no longer ordinary cotton by dissolving some in acetone. I continued to make more guncotton in my refrigerator “factory” and began a series of experiments. Guncotton explodes, but not easily, and typically requires a detonator. I didn’t have one, so I put a small wad on the sidewalk and whacked it with a sledgehammer. There was a whum-m-p and the sledgehammer leapt up and back over my shoulder while I hung on. The sidewalk now sported a palm-sized crater. After blowing a few more craters in the sidewalk, I used guncotton in rockets and pipe bombs, where it was more predictable and more effective than gunpowder.

Finally I felt ready to try “the big one,” nitroglycerine. The recipe and procedure followed that for guncotton, with just one seemingly minor change, the substitution of ordinary glycerine for the cotton. The result was a pale, almost colorless liquid that floated on top, which I removed carefully, since it was a violent and treacherous explosive that had killed many people in the past.

One quiet Saturday I bundled myself up, put on a safety visor, and moistened the tip of a glass tube with nitro. Using far less than a drop, surely a safe amount, I heated it over the gas flame and suddenly there came a CRACK!—with a duration much shorter than and violently different from all my other slower-acting explosives. Tiny bits of glass were embedded in my hand and arm, blood seeping from the myriad holes. I picked the bits out with a needle over the next few days as I found them. Next I put some nitro on the sidewalk and used the sledgehammer to blow another crater. But nitroglycerine’s dangerous instability worried me and I discarded the rest of my stockpile.

Where did a fourteen-year-old get such powerful and dangerous chemicals? From my local pharmacist, who sold them to me privately at a nice markup. My parents worked long hours and when they were home they were either seeing to the needs of the ten refugee relatives who were staying with us, taking care of household logistics, or falling into an exhausted sleep. I and my brother were left to manage on our own. I didn’t volunteer any information about my experiments. If they had realized the full extent of what I was up to, they would have shut it down.

By the time I took chemistry in the eleventh grade, I had been doing experiments for a couple of years. Enjoying the theory as well as having fun, I read a high school chemistry book from cover to cover. I fell asleep at night mentally reviewing the material, a habit that proved, both then and later, remarkably effective for understanding and permanently remembering what I had learned. Our teacher was Mr. Stump, a short bespectacled man in his fifties. He loved his subject and wanted us to learn it properly. Moreover, he had always longed to produce a student good enough to be one of the fifteen winners in the annual Southern California American Chemical Society high school chemistry contest. This was a three-hour examination given in the spring, and typically attracted about two hundred of the top high school chemistry students from all over Southern California. But after twenty or so years at our academically impoverished working-class school—that year it ranked thirty-first out of the thirty-two schools in the Los Angeles district on standardized achievement tests—he had given up hope of ever realizing his dream.

Among the thirty or so students that showed up for class, Mr. Stump saw a thin younger student with dark curly hair who volunteered to answer every question. He had heard about this kid before from other teachers—the smart ones who enjoyed him and the dull ones whom he filled with dread. Sure, the kid might have picked up a little chemistry and could answer the easy questions during the first couple of weeks, but Mr. Stump had seen others start strong and quickly fade. He warned us about the first exam and how hard it was going to be. When he returned our tests the other students’ scores ranged from zero to thirty-three out of one hundred. My score was ninety-nine. I had his attention now.

I went to talk to him about the chemistry contest. Mr. Stump had saved every old exam for the last twenty years. I wanted to borrow them to study for the contest. He was reluctant to give them up and pointed out the huge odds against me: I was taking the exam in my junior year, whereas most others waited until they were seniors. I’d skipped a grade, which meant I’d be a fifteen-year-old up against a field of seventeen- and eighteen-year-olds. And I had only five months to prepare. Besides, our school’s facilities were inferior and I had no peers to study with or to push me to a higher level. Few from our school had ever been audacious enough to enter and none of them had placed. “Why not wait a year?” suggested Mr. Stump.

But I was determined. The winners usually got scholarships to the California college or university of their choice. An academic life was becoming my dream. I liked all the science experiments I was doing and the knowledge they led to. If I could have a career continuing this kind of playing, I would be very happy. And the way to have that kind of life was by joining the academic world where they had the laboratories, the kinds of experiments and projects I enjoyed, and maybe the chance to work with other people like me. But I couldn’t afford the education I needed for an advanced degree. Here was a way.

After Mr. Stump talked with English teacher Jack Chasson, he agreed to lend me ten of the tests from alternate years, from which I could determine their range and difficulty, and any trend toward changes that had occurred over the years. Mr. Stump held back the other ten so he could check my level of preparedness.

Alongside my high school chemistry book, I worked through two college chemistry texts. When a concept wasn’t clear in one of them, it was generally clarified in another. With my background in experiments and my previous reading, the subject sang to me. Every night I spent an hour on theory, then fell asleep mentally reviewing the periodic table, valences, allowable chemical reactions, Gay-Lussac’s law, Charles’s law, Avogadro’s number, and so on. I also continued my experiments—and my pranks.

One great trick began when I read about a powerful dye called aniline red. It turned water a deep blood color in the astonishing ratio of six million grams of water for each gram of dye! I obtained twenty grams of the dye for experiments.

My homemade chemistry lab, as I’ve mentioned, was located in the laundry room tacked onto the back of our garage, which in turn opened onto our backyard. And in the middle of that yard was our kidney-shaped goldfish pool, about ten feet by five feet and a foot deep. That’s a little less than one and a half cubic meters. Now, one gram of this dye would color six cubic meters of water a deep red, so a mere pinch, one-quarter of a gram of dye, ought to do the job on the pool.