Think Again

Prologue

After a bumpy flight, fifteen men dropped from the Montana sky. They weren’t skydivers. They were smokejumpers: elite wildland firefighters parachuting in to extinguish a forest fire started by lightning the day before. In a matter of minutes, they would be racing for their lives.

The smokejumpers landed near the top of Mann Gulch late on a scorching August afternoon in 1949. With the fire visible across the gulch, they made their way down the slope toward the Missouri River. Their plan was to dig a line in the soil around the fire to contain it and direct it toward an area where there wasn’t much to burn.

After hiking about a quarter mile, the foreman, Wagner Dodge, saw that the fire had leapt across the gulch and was heading straight at them. The flames stretched as high as 30 feet in the air. Soon the fire would be blazing fast enough to cross the length of two football fields in less than a minute.

By 5:45 p.m. it was clear that even containing the fire was off the table. Realizing it was time to shift gears from fight to flight, Dodge immediately turned the crew around to run back up the slope. The smokejumpers had to bolt up an extremely steep incline, through knee-high grass on rocky terrain. Over the next eight minutes they traveled nearly 500 yards, leaving the top of the ridge less than 200 yards away.

With safety in sight but the fire swiftly advancing, Dodge did something that baffled his crew. Instead of trying to outrun the fire, he stopped and bent over. He took out a matchbook, started lighting matches, and threw them into the grass. “We thought he must have gone nuts,” one later recalled. “With the fire almost on our back, what the hell is the boss doing lighting another fire in front of us?” He thought to himself: That bastard Dodge is trying to burn me to death. It’s no surprise that the crew didn’t follow Dodge when he waved his arms toward his fire and yelled, “Up! Up this way!”

What the smokejumpers didn’t realize was that Dodge had devised a survival strategy: he was building an escape fire. By burning the grass ahead of him, he cleared the area of fuel for the wildfire to feed on. He then poured water from his canteen onto his handkerchief, covered his mouth with it, and lay facedown in the charred area for the next fifteen minutes. As the wildfire raged directly above him, he survived in the oxygen close to the ground.

Tragically, twelve of the smokejumpers perished. A pocket watch belonging to one of the victims was later found with the hands melted at 5:56 p.m.

Why did only three of the smokejumpers survive? Physical fitness might have been a factor; the other two survivors managed to outrun the fire and reach the crest of the ridge. But Dodge prevailed because of his mental fitness.

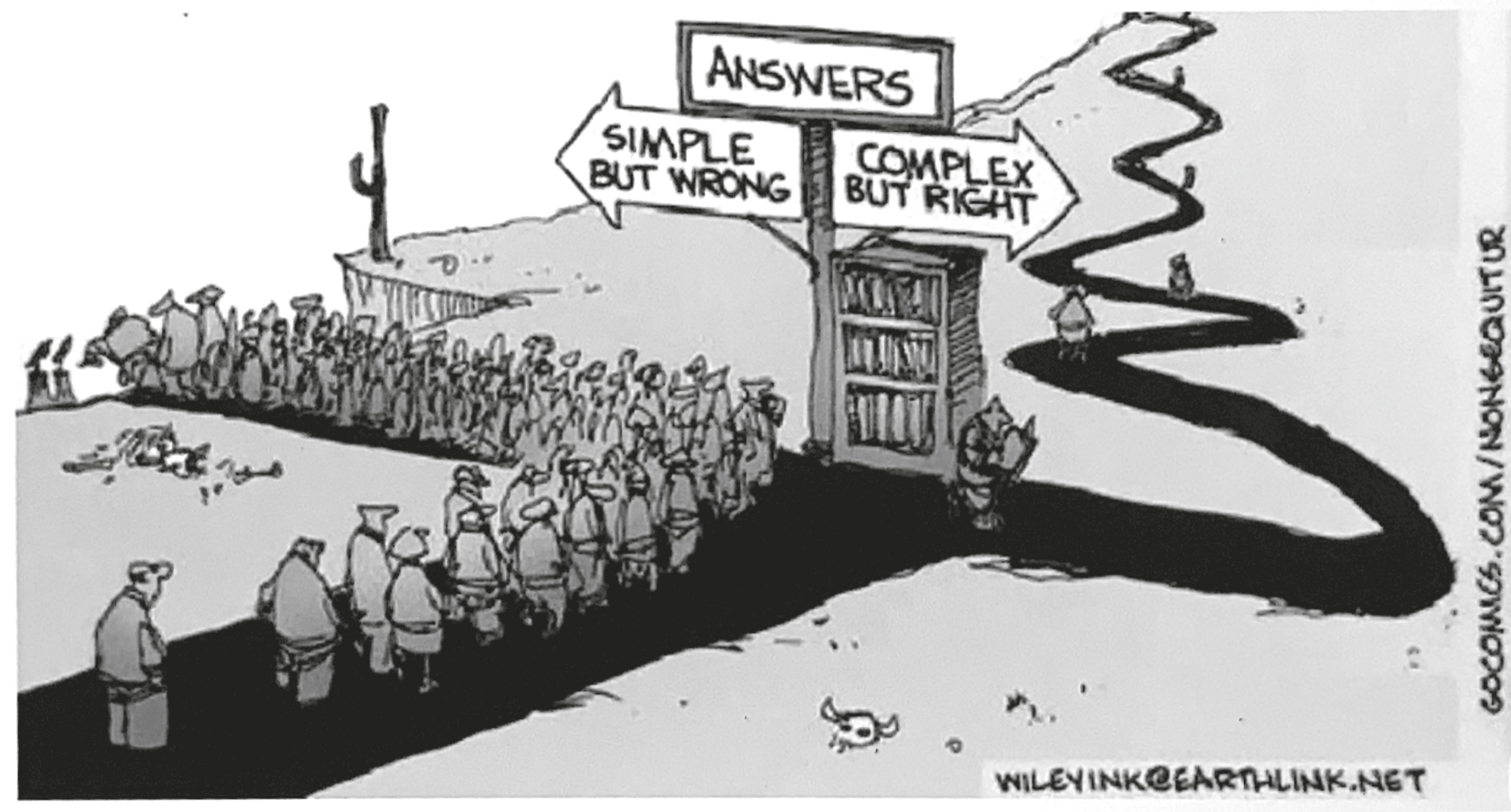

when people reflect on what it takes to be mentally fit, the first idea that comes to mind is usually intelligence. The smarter you are, the more complex the problems you can solve—and the faster you can solve them. Intelligence is traditionally viewed as the ability to think and learn. Yet in a turbulent world, there’s another set of cognitive skills that might matter more: the ability to rethink and unlearn.

Imagine that you’ve just finished taking a multiple-choice test, and you start to second-guess one of your answers. You have some extra time—should you stick with your first instinct or change it?

About three quarters of students are convinced that revising their answer will hurt their score. Kaplan, the big test-prep company, once warned students to “exercise great caution if you decide to change an answer. Experience indicates that many students who change answers change to the wrong answer.”

With all due respect to the lessons of experience, I prefer the rigor of evidence. When a trio of psychologists conducted a comprehensive review of thirty-three studies, they found that in every one, the majority of answer revisions were from wrong to right. This phenomenon is known as the first-instinct fallacy.

In one demonstration, psychologists counted eraser marks on the exams of more than 1,500 students in Illinois. Only a quarter of the changes were from right to wrong, while half were from wrong to right. I’ve seen it in my own classroom year after year: my students’ final exams have surprisingly few eraser marks, but those who do rethink their first answers rather than staying anchored to them end up improving their scores.

Of course, it’s possible that second answers aren’t inherently better; they’re only better because students are generally so reluctant to switch that they only make changes when they’re fairly confident. But recent studies point to a different explanation: it’s not so much changing your answer that improves your score as considering whether you should change it.

We don’t just hesitate to rethink our answers. We hesitate at the very idea of rethinking. Take an experiment where hundreds of college students were randomly assigned to learn about the first-instinct fallacy. The speaker taught them about the value of changing their minds and gave them advice about when it made sense to do so. On their next two tests, they still weren’t any more likely to revise their answers.

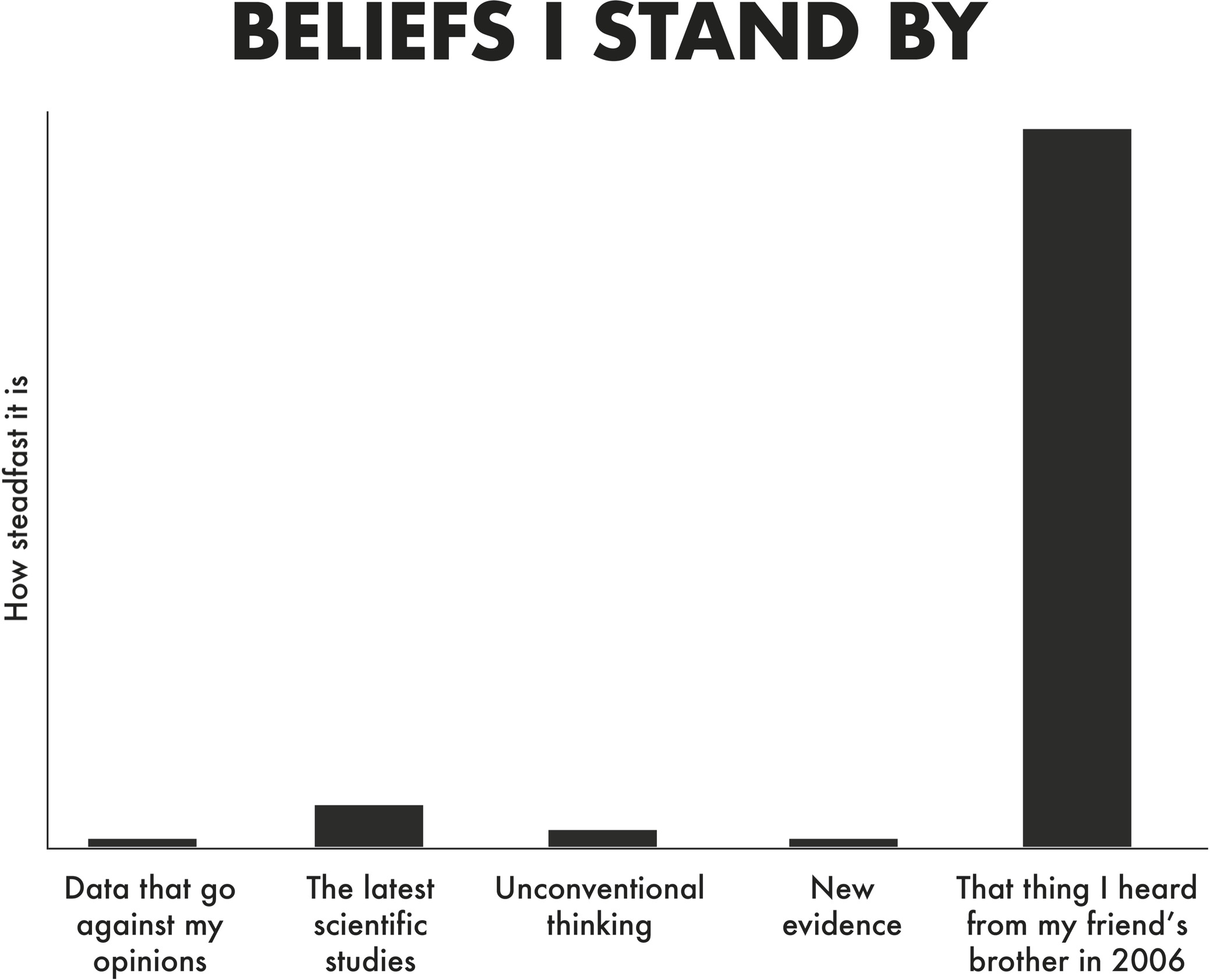

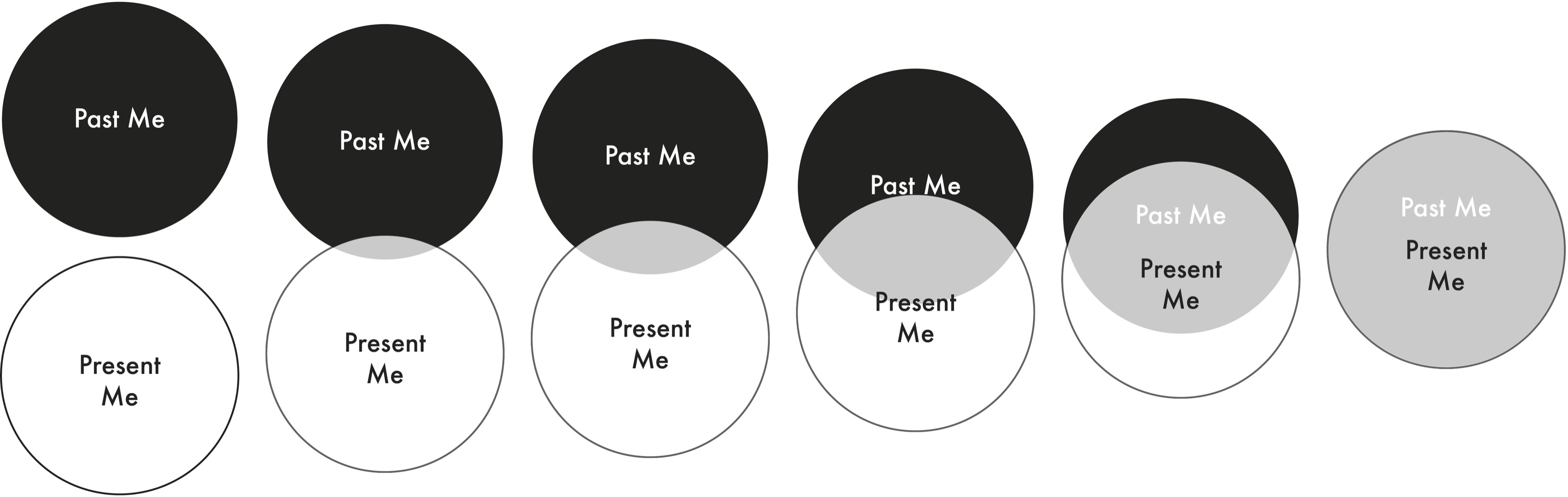

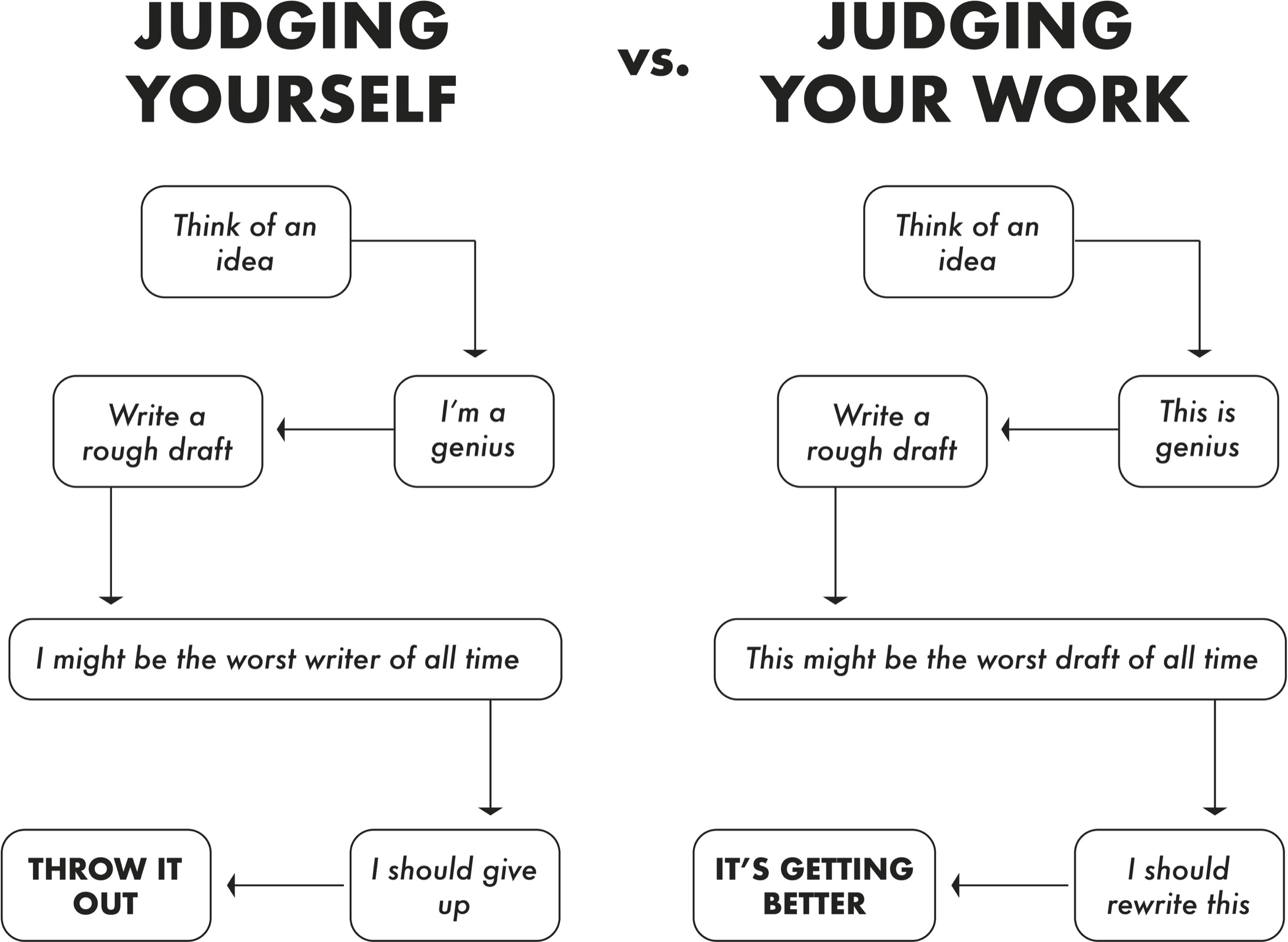

Part of the problem is cognitive laziness. Some psychologists point out that we’re mental misers: we often prefer the ease of hanging on to old views over the difficulty of grappling with new ones. Yet there are also deeper forces behind our resistance to rethinking. Questioning ourselves makes the world more unpredictable. It requires us to admit that the facts may have changed, that what was once right may now be wrong. Reconsidering something we believe deeply can threaten our identities, making it feel as if we’re losing a part of ourselves.

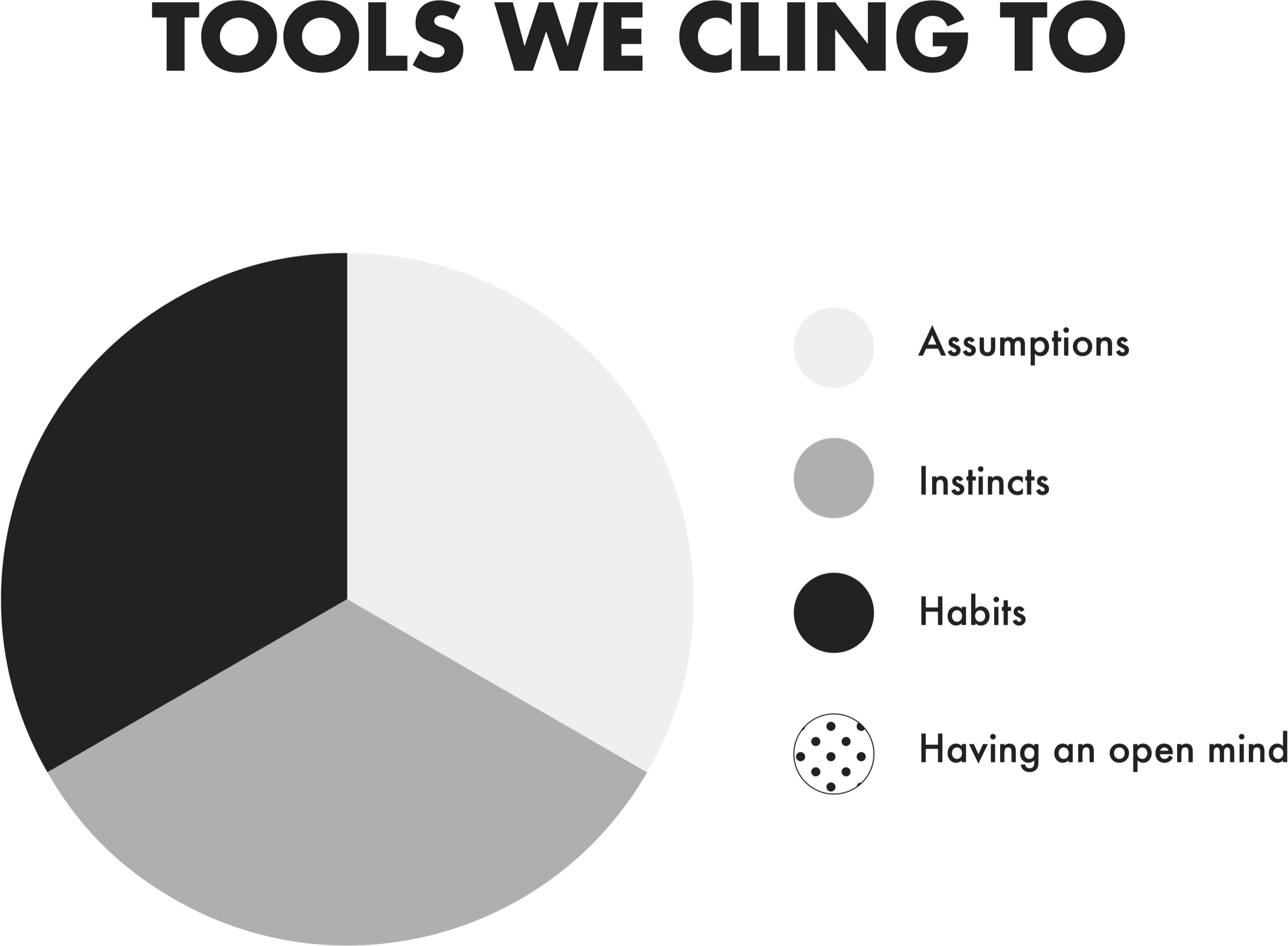

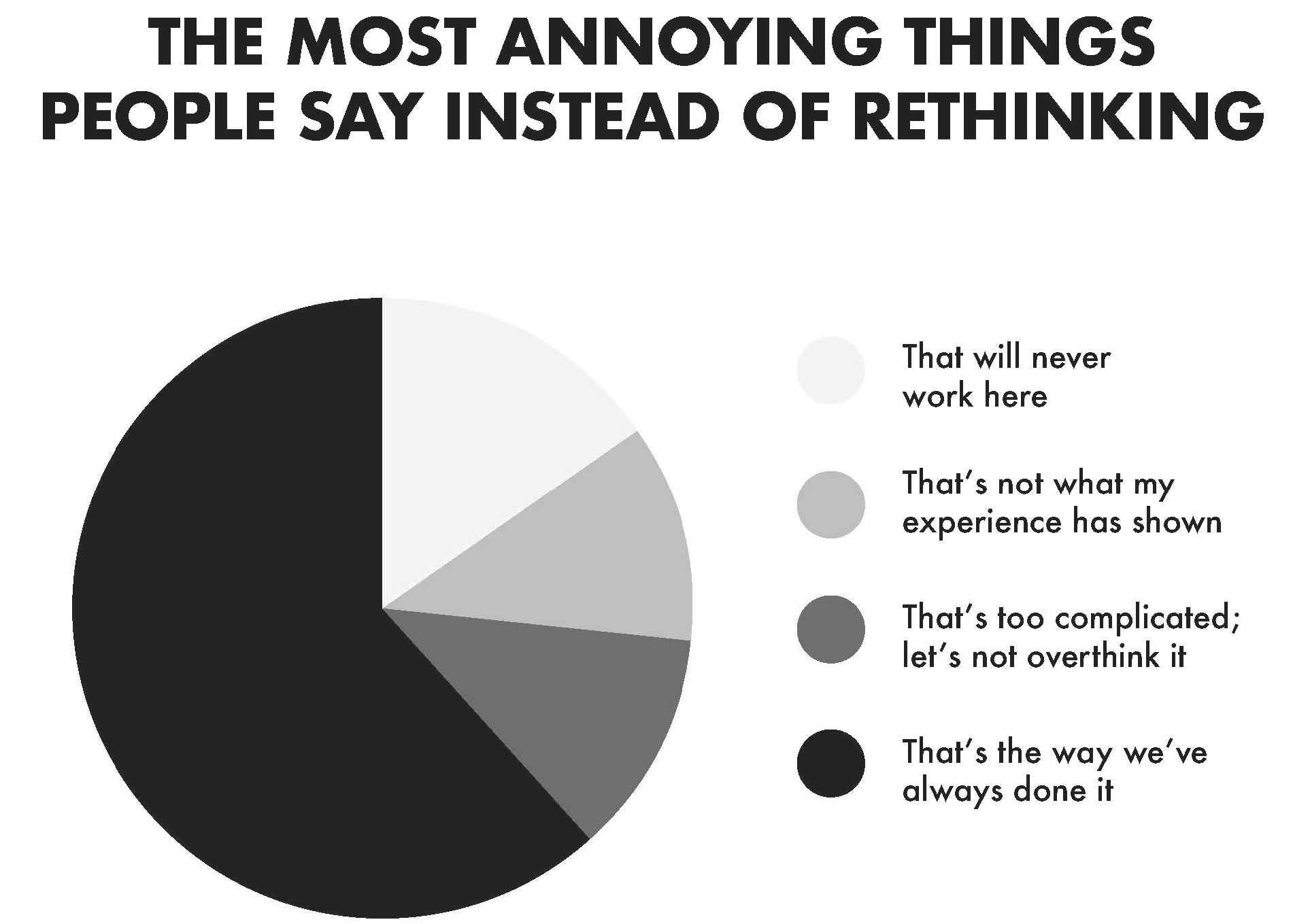

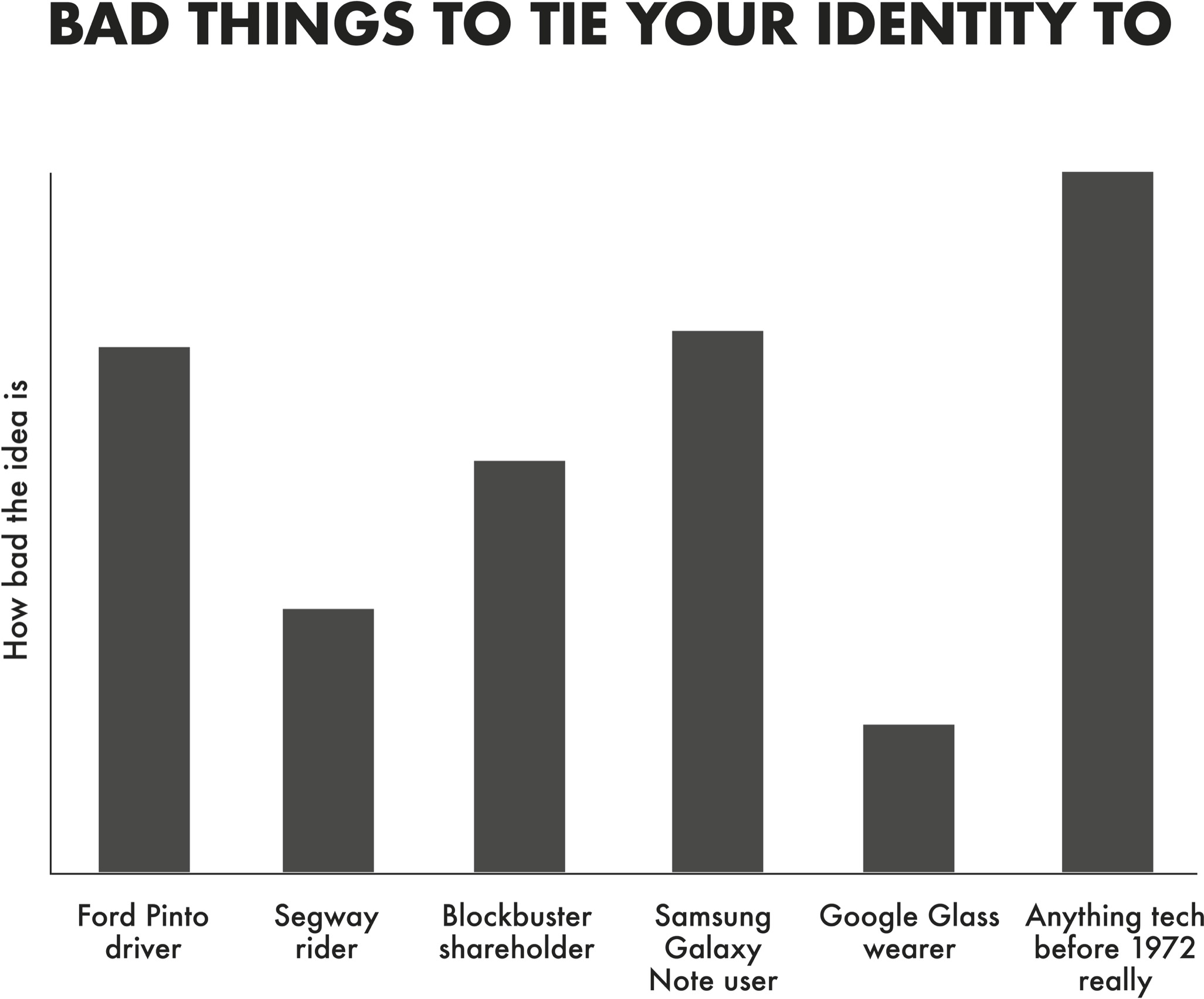

Rethinking isn’t a struggle in every part of our lives. When it comes to our possessions, we update with fervor. We refresh our wardrobes when they go out of style and renovate our kitchens when they’re no longer in vogue. When it comes to our knowledge and opinions, though, we tend to stick to our guns. Psychologists call this seizing and freezing. We favor the comfort of conviction over the discomfort of doubt, and we let our beliefs get brittle long before our bones. We laugh at people who still use Windows 95, yet we still cling to opinions that we formed in 1995. We listen to views that make us feel good, instead of ideas that make us think hard.

At some point, you’ve probably heard that if you drop a frog in a pot of scalding hot water, it will immediately leap out. But if you drop the frog in lukewarm water and gradually raise the temperature, the frog will die. It lacks the ability to rethink the situation, and doesn’t realize the threat until it’s too late.

I did some research on this popular story recently and discovered a wrinkle: it isn’t true.

Tossed into the scalding pot, the frog will get burned badly and may or may not escape. The frog is actually better off in the slow-boiling pot: it will leap out as soon as the water starts to get uncomfortably warm.

It’s not the frogs who fail to reevaluate. It’s us. Once we hear the story and accept it as true, we rarely bother to question it.

as the mann gulch wildfire raced toward them, the smokejumpers had a decision to make. In an ideal world, they would have had enough time to pause, analyze the situation, and evaluate their options. With the fire raging less than 100 yards behind, there was no chance to stop and think. “On a big fire there is no time and no tree under whose shade the boss and the crew can sit and have a Platonic dialogue about a blowup,” scholar and former firefighter Norman Maclean wrote in Young Men and Fire, his award-winning chronicle of the disaster. “If Socrates had been foreman on the Mann Gulch fire, he and his crew would have been cremated while they were sitting there considering it.”

Dodge didn’t survive as a result of thinking slower. He made it out alive thanks to his ability to rethink the situation faster. Twelve smokejumpers paid the ultimate price because Dodge’s behavior didn’t make sense to them. They couldn’t rethink their assumptions in time.

Under acute stress, people typically revert to their automatic, well-learned responses. That’s evolutionarily adaptive—as long as you find yourself in the same kind of environment in which those reactions were necessary. If you’re a smokejumper, your well-learned response is to put out a fire, not start another one. If you’re fleeing for your life, your well-learned response is to run away from the fire, not toward it. In normal circumstances, those instincts might save your life. Dodge survived Mann Gulch because he swiftly overrode both of those responses.

No one had taught Dodge to build an escape fire. He hadn’t even heard of the concept; it was pure improvisation. Later, the other two survivors testified under oath that nothing resembling an escape fire was covered in their training. Many experts had spent their entire careers studying wildfires without realizing it was possible to stay alive by burning a hole through the blaze.

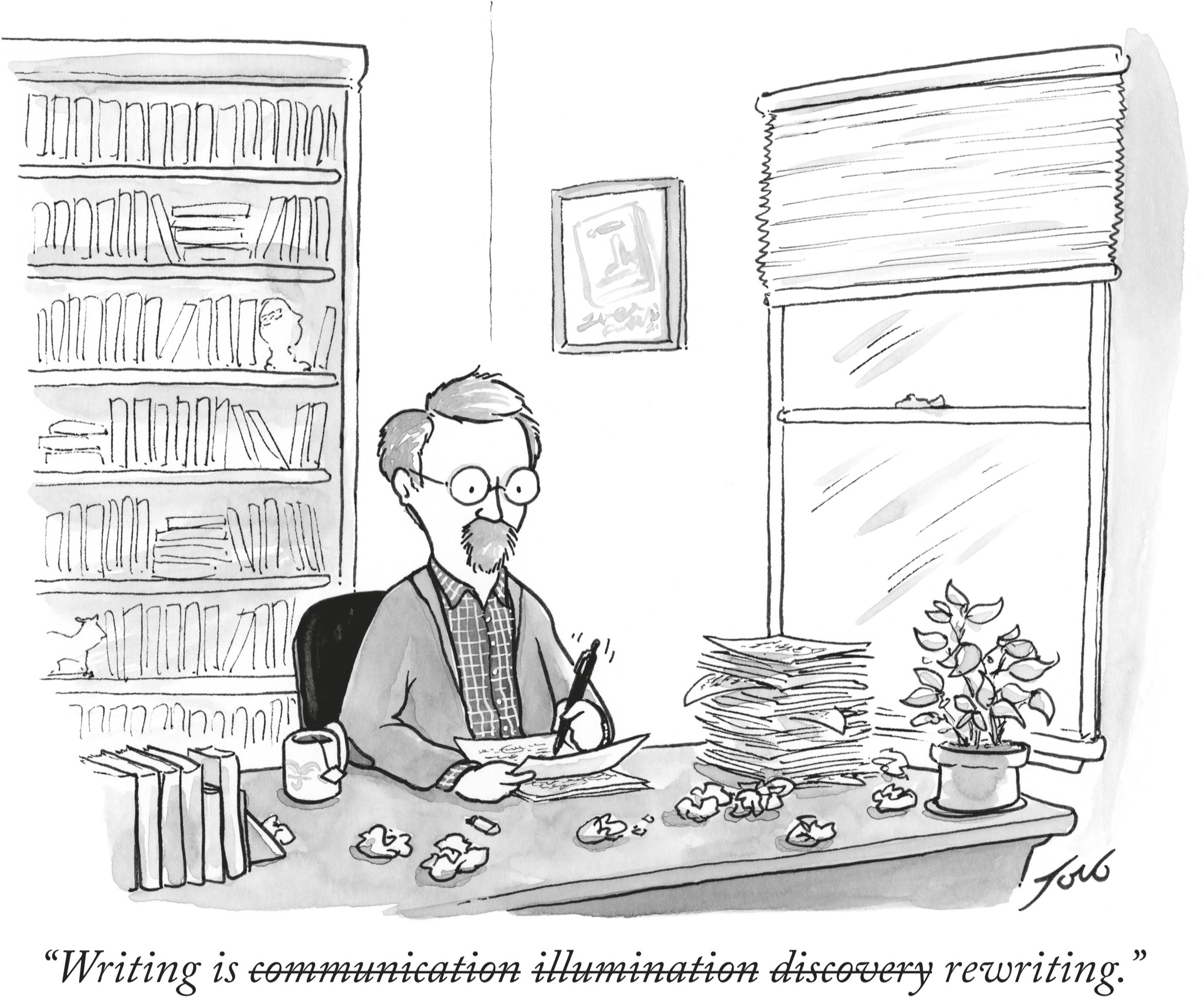

When I tell people about Dodge’s escape, they usually marvel at his resourcefulness under pressure. That was genius! Their astonishment quickly melts into dejection as they conclude that this kind of eureka moment is out of reach for mere mortals. I got stumped by my fourth grader’s math homework. Yet most acts of rethinking don’t require any special skill or ingenuity.

Moments earlier at Mann Gulch, the smokejumpers missed another opportunity to think again—and that one was right at their fingertips. Just before Dodge started tossing matches into the grass, he ordered his crew to drop their heavy equipment. They had spent the past eight minutes racing uphill while still carrying axes, saws, shovels, and 20-pound packs.

If you’re running for your life, it might seem obvious that your first move would be to drop anything that might slow you down. For firefighters, though, tools are essential to doing their jobs. Carrying and taking care of equipment is deeply ingrained in their training and experience. It wasn’t until Dodge gave his order that most of the smokejumpers set down their tools—and even then, one firefighter hung on to his shovel until a colleague took it out of his hands. If the crew had abandoned their tools sooner, would it have been enough to save them?

We’ll never know for certain, but Mann Gulch wasn’t an isolated incident. Between 1990 and 1995 alone, a total of twenty-three wildland firefighters perished trying to outrace fires uphill even though dropping their heavy equipment could have made the difference between life and death. In 1994, on Storm King Mountain in Colorado, high winds caused a fire to explode across a gulch. Running uphill on rocky ground with safety in view just 200 feet away, fourteen smokejumpers and wildland firefighters—four women, ten men—lost their lives.

Later, investigators calculated that without their tools and backpacks, the crew could have moved 15 to 20 percent faster. “Most would have lived had they simply dropped their gear and run for safety,” one expert wrote. Had they “dropped their packs and tools,” the U.S. Forest Service concurred, “the firefighters would have reached the top of the ridge before the fire.”

It’s reasonable to assume that at first the crew might have been running on autopilot, not even aware that they were still carrying their packs and tools. “About three hundred yards up the hill,” one of the Colorado survivors testified, “I then realized I still had my saw over my shoulder!” Even after making the wise decision to ditch the 25-pound chainsaw, he wasted valuable time: “I irrationally started looking for a place to put it down where it wouldn’t get burned. . . . I remember thinking, ‘I can’t believe I’m putting down my saw.’” One of the victims was found wearing his backpack, still clutching the handle of his chainsaw. Why would so many firefighters cling to a set of tools even though letting go might save their lives?

If you’re a firefighter, dropping your tools doesn’t just require you to unlearn habits and disregard instincts. Discarding your equipment means admitting failure and shedding part of your identity. You have to rethink your goal in your job—and your role in life. “Fires are not fought with bodies and bare hands, they are fought with tools that are often distinctive trademarks of firefighters,” organizational psychologist Karl Weick explains: “They are the firefighter’s reason for being deployed in the first place. . . . Dropping one’s tools creates an existential crisis. Without my tools, who am I?”

Wildland fires are relatively rare. Most of our lives don’t depend on split-second decisions that force us to reimagine our tools as a source of danger and a fire as a path to safety. Yet the challenge of rethinking assumptions is surprisingly common—maybe even common to all humans.

We all make the same kind of mistakes as smokejumpers and firefighters, but the consequences are less dire and therefore often go unnoticed. Our ways of thinking become habits that can weigh us down, and we don’t bother to question them until it’s too late. Expecting your squeaky brakes to keep working until they finally fail on the freeway. Believing the stock market will keep going up after analysts warn of an impending real estate bubble. Assuming your marriage is fine despite your partner’s increasing emotional distance. Feeling secure in your job even though some of your colleagues have been laid off.

This book is about the value of rethinking. It’s about adopting the kind of mental flexibility that saved Wagner Dodge’s life. It’s also about succeeding where he failed: encouraging that same agility in others.

You may not carry an ax or a shovel, but you do have some cognitive tools that you use regularly. They might be things you know, assumptions you make, or opinions you hold. Some of them aren’t just part of your job—they’re part of your sense of self.

Consider a group of students who built what has been called Harvard’s first online social network. Before they arrived at college, they had already connected more than an eighth of the entering freshman class in an “e-group.” But once they got to Cambridge, they abandoned the network and shut it down. Five years later Mark Zuckerberg started Facebook on the same campus.

From time to time, the students who created the original e-group have felt some pangs of regret. I know, because I was one of the cofounders of that group.

Let’s be clear: I never would have had the vision for what Facebook became. In hindsight, though, my friends and I clearly missed a series of chances for rethinking the potential of our platform. Our first instinct was to use the e-group to make new friends for ourselves; we didn’t consider whether it would be of interest to students at other schools or in life beyond school. Our well-learned habit was to use online tools to connect with people far away; once we lived within walking distance on the same campus, we figured we no longer needed the e-group. Although one of the cofounders was studying computer science and another early member had already founded a successful tech startup, we made the flawed assumption that an online social network was a passing hobby, not a huge part of the future of the internet. Since I didn’t know how to code, I didn’t have the tools to build something more sophisticated. Launching a company wasn’t part of my identity anyway: I saw myself as a college freshman, not a budding entrepreneur.

Since then, rethinking has become central to my sense of self. I’m a psychologist but I’m not a fan of Freud, I don’t have a couch in my office, and I don’t do therapy. As an organizational psychologist at Wharton, I’ve spent the past fifteen years researching and teaching evidence-based management. As an entrepreneur of data and ideas, I’ve been called by organizations like Google, Pixar, the NBA, and the Gates Foundation to help them reexamine how they design meaningful jobs, build creative teams, and shape collaborative cultures. My job is to think again about how we work, lead, and live—and enable others to do the same.

I can’t think of a more vital time for rethinking. As the coronavirus pandemic unfolded, many leaders around the world were slow to rethink their assumptions—first that the virus wouldn’t affect their countries, next that it would be no deadlier than the flu, and then that it could only be transmitted by people with visible symptoms. The cost in human life is still being tallied.

In the past year we’ve all had to put our mental pliability to the test. We’ve been forced to question assumptions that we had long taken for granted: That it’s safe to go to the hospital, eat in a restaurant, and hug our parents or grandparents. That live sports will always be on TV and most of us will never have to work remotely or homeschool our kids. That we can get toilet paper and hand sanitizer whenever we need them.

In the midst of the pandemic, multiple acts of police brutality led many people to rethink their views on racial injustice and their roles in fighting it. The senseless deaths of three Black citizens—George Floyd, Breonna Taylor, and Ahmaud Arbery—left millions of white people realizing that just as sexism is not only a women’s issue, racism is not only an issue for people of color. As waves of protest swept the nation, across the political spectrum, support for the Black Lives Matter movement climbed nearly as much in the span of two weeks as it had in the previous two years. Many of those who had long been unwilling or unable to acknowledge it quickly came to grips with the harsh reality of systemic racism that still pervades America. Many of those who had long been silent came to reckon with their responsibility to become antiracists and act against prejudice.

Despite these shared experiences, we live in an increasingly divisive time. For some people a single mention of kneeling during the national anthem is enough to end a friendship. For others a single ballot at a voting booth is enough to end a marriage. Calcified ideologies are tearing American culture apart. Even our great governing document, the U.S. Constitution, allows for amendments. What if we were quicker to make amendments to our own mental constitutions?

My aim in this book is to explore how rethinking happens. I sought out the most compelling evidence and some of the world’s most skilled rethinkers. The first section focuses on opening our own minds. You’ll find out why a forward-thinking entrepreneur got trapped in the past, why a long-shot candidate for public office came to see impostor syndrome as an advantage, how a Nobel Prize–winning scientist embraces the joy of being wrong, how the world’s best forecasters update their views, and how an Oscar-winning filmmaker has productive fights.

The second section examines how we can encourage other people to think again. You’ll learn how an international debate champion wins arguments and a Black musician persuades white supremacists to abandon hate. You’ll discover how a special kind of listening helped a doctor open parents’ minds about vaccines, and helped a legislator convince a Ugandan warlord to join her in peace talks. And if you’re a Yankees fan, I’m going to see if I can convince you to root for the Red Sox.

The third section is about how we can create communities of lifelong learners. In social life, a lab that specializes in difficult conversations will shed light on how we can communicate better about polarizing issues like abortion and climate change. In schools, you’ll find out how educators teach kids to think again by treating classrooms like museums, approaching projects like carpenters, and rewriting time-honored textbooks. At work, you’ll explore how to build learning cultures with the first Hispanic woman in space, who took the reins at NASA to prevent accidents after space shuttle Columbia disintegrated. I close by reflecting on the importance of reconsidering our best-laid plans.

It’s a lesson that firefighters have learned the hard way. In the heat of the moment, Wagner Dodge’s impulse to drop his heavy tools and take shelter in a fire of his own making made the difference between life and death. But his inventiveness wouldn’t have even been necessary if not for a deeper, more systemic failure to think again. The greatest tragedy of Mann Gulch is that a dozen smokejumpers died fighting a fire that never needed to be fought.

As early as the 1880s, scientists had begun highlighting the important role that wildfires play in the life cycles of forests. Fires remove dead matter, send nutrients into the soil, and clear a path for sunlight. When fires are suppressed, forests are left too dense. The accumulation of brush, dry leaves, and twigs becomes fuel for more explosive wildfires.

Yet it wasn’t until 1978 that the U.S. Forest Service put an end to its policy that every fire spotted should be extinguished by 10:00 a.m. the following day. The Mann Gulch wildfire took place in a remote area where human lives were not at risk. The smokejumpers were called in anyway because no one in their community, their organization, or their profession had done enough to question the assumption that wildfires should not be allowed to run their course.

This book is an invitation to let go of knowledge and opinions that are no longer serving you well, and to anchor your sense of self in flexibility rather than consistency. If you can master the art of rethinking, I believe you’ll be better positioned for success at work and happiness in life. Thinking again can help you generate new solutions to old problems and revisit old solutions to new problems. It’s a path to learning more from the people around you and living with fewer regrets. A hallmark of wisdom is knowing when it’s time to abandon some of your most treasured tools—and some of the most cherished parts of your identity.

PART I

Individual Rethinking

Updating Our Own Views

CHAPTER 1

A Preacher, a Prosecutor, a Politician, and a Scientist Walk into Your Mind

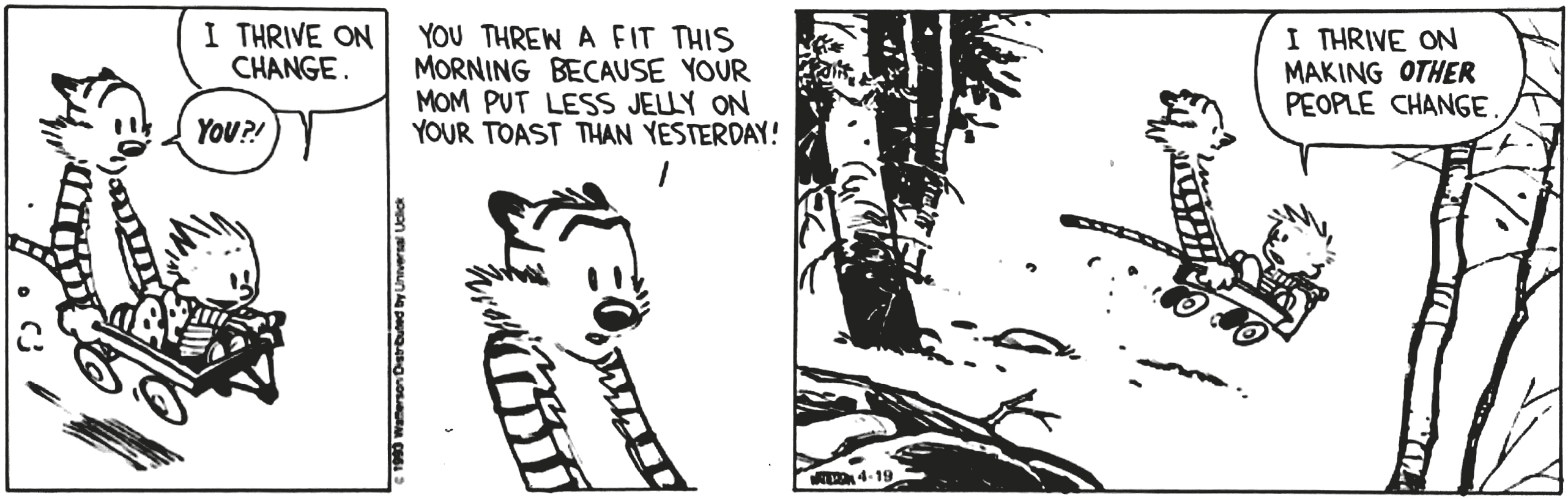

Progress is impossible without change; and those who cannot change their minds cannot change anything.

—george bernard shaw

You probably don’t recognize his name, but Mike Lazaridis has had a defining impact on your life. From an early age, it was clear that Mike was something of an electronics wizard. By the time he turned four, he was building his own record player out of Legos and rubber bands. In high school, when his teachers had broken TVs, they called Mike to fix them. In his spare time, he built a computer and designed a better buzzer for high school quiz-bowl teams, which ended up paying for his first year of college. Just months before finishing his electrical engineering degree, Mike did what so many great entrepreneurs of his era would do: he dropped out of college. It was time for this son of immigrants to make his mark on the world.

Mike’s first success came when he patented a device for reading the bar codes on movie film, which was so useful in Hollywood that it won an Emmy and an Oscar for technical achievement. That was small potatoes compared to his next big invention, which made his firm the fastest-growing company on the planet. Mike’s flagship device quickly attracted a cult following, with loyal customers ranging from Bill Gates to Christina Aguilera. “It’s literally changed my life,” Oprah Winfrey gushed. “I cannot live without this.” When he arrived at the White House, President Obama refused to relinquish his to the Secret Service.

Mike Lazaridis dreamed up the idea for the BlackBerry as a wireless communication device for sending and receiving emails. As of the summer of 2009, it accounted for nearly half of the U.S. smartphone market. By 2014, its market share had plummeted to less than 1 percent.

When a company takes a nosedive like that, we can never pinpoint a single cause of its downfall, so we tend to anthropomorphize it: BlackBerry failed to adapt. Yet adapting to a changing environment isn’t something a company does—it’s something people do in the multitude of decisions they make every day. As the cofounder, president, and co-CEO, Mike was in charge of all the technical and product decisions on the BlackBerry. Although his thinking may have been the spark that ignited the smartphone revolution, his struggles with rethinking ended up sucking the oxygen out of his company and virtually extinguishing his invention. Where did he go wrong?

Most of us take pride in our knowledge and expertise, and in staying true to our beliefs and opinions. That makes sense in a stable world, where we get rewarded for having conviction in our ideas. The problem is that we live in a rapidly changing world, where we need to spend as much time rethinking as we do thinking.

Rethinking is a skill set, but it’s also a mindset. We already have many of the mental tools we need. We just have to remember to get them out of the shed and remove the rust.

SECOND THOUGHTS

With advances in access to information and technology, knowledge isn’t just increasing. It’s increasing at an increasing rate. In 2011, you consumed about five times as much information per day as you would have just a quarter century earlier. As of 1950, it took about fifty years for knowledge in medicine to double. By 1980, medical knowledge was doubling every seven years, and by 2010, it was doubling in half that time. The accelerating pace of change means that we need to question our beliefs more readily than ever before.

This is not an easy task. As we sit with our beliefs, they tend to become more extreme and more entrenched. I’m still struggling to accept that Pluto may not be a planet. In education, after revelations in history and revolutions in science, it often takes years for a curriculum to be updated and textbooks to be revised. Researchers have recently discovered that we need to rethink widely accepted assumptions about such subjects as Cleopatra’s roots (her father was Greek, not Egyptian, and her mother’s identity is unknown); the appearance of dinosaurs (paleontologists now think some tyrannosaurs had colorful feathers on their backs); and what’s required for sight (blind people have actually trained themselves to “see”—sound waves can activate the visual cortex and create representations in the mind’s eye, much like how echolocation helps bats navigate in the dark).* Vintage records, classic cars, and antique clocks might be valuable collectibles, but outdated facts are mental fossils that are best abandoned.

We’re swift to recognize when other people need to think again. We question the judgment of experts whenever we seek out a second opinion on a medical diagnosis. Unfortunately, when it comes to our own knowledge and opinions, we often favor feeling right over being right. In everyday life, we make many diagnoses of our own, ranging from whom we hire to whom we marry. We need to develop the habit of forming our own second opinions.

Imagine you have a family friend who’s a financial adviser, and he recommends investing in a retirement fund that isn’t in your employer’s plan. You have another friend who’s fairly knowledgeable about investing, and he tells you that this fund is risky. What would you do?

When a man named Stephen Greenspan found himself in that situation, he decided to weigh his skeptical friend’s warning against the data available. His sister had been investing in the fund for several years, and she was pleased with the results. A number of her friends had been, too; although the returns weren’t extraordinary, they were consistently in the double digits. The financial adviser was enough of a believer that he had invested his own money in the fund. Armed with that information, Greenspan decided to go forward. He made a bold move, investing nearly a third of his retirement savings in the fund. Before long, he learned that his portfolio had grown by 25 percent.

Then he lost it all overnight when the fund collapsed. It was the Ponzi scheme managed by Bernie Madoff.

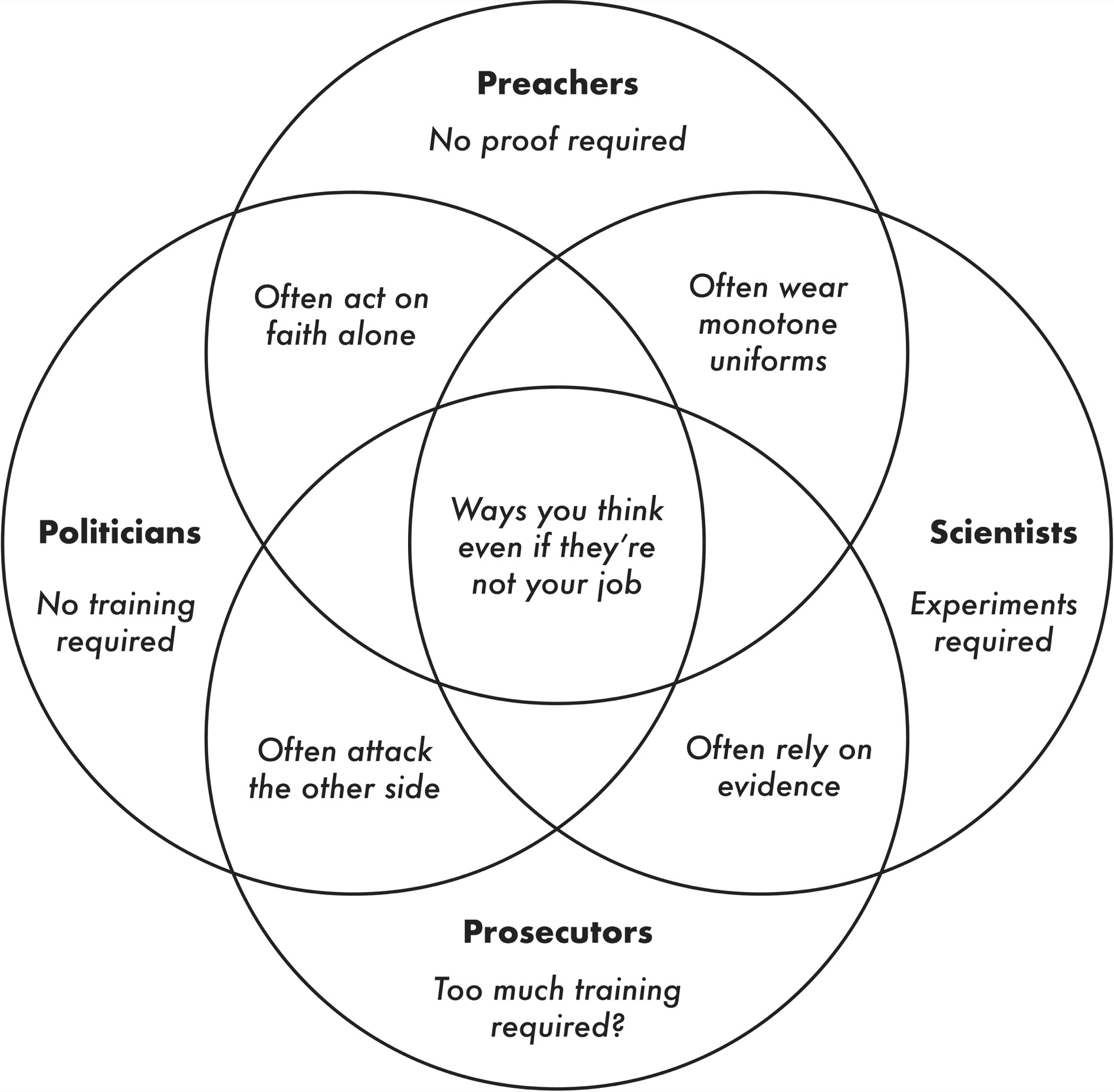

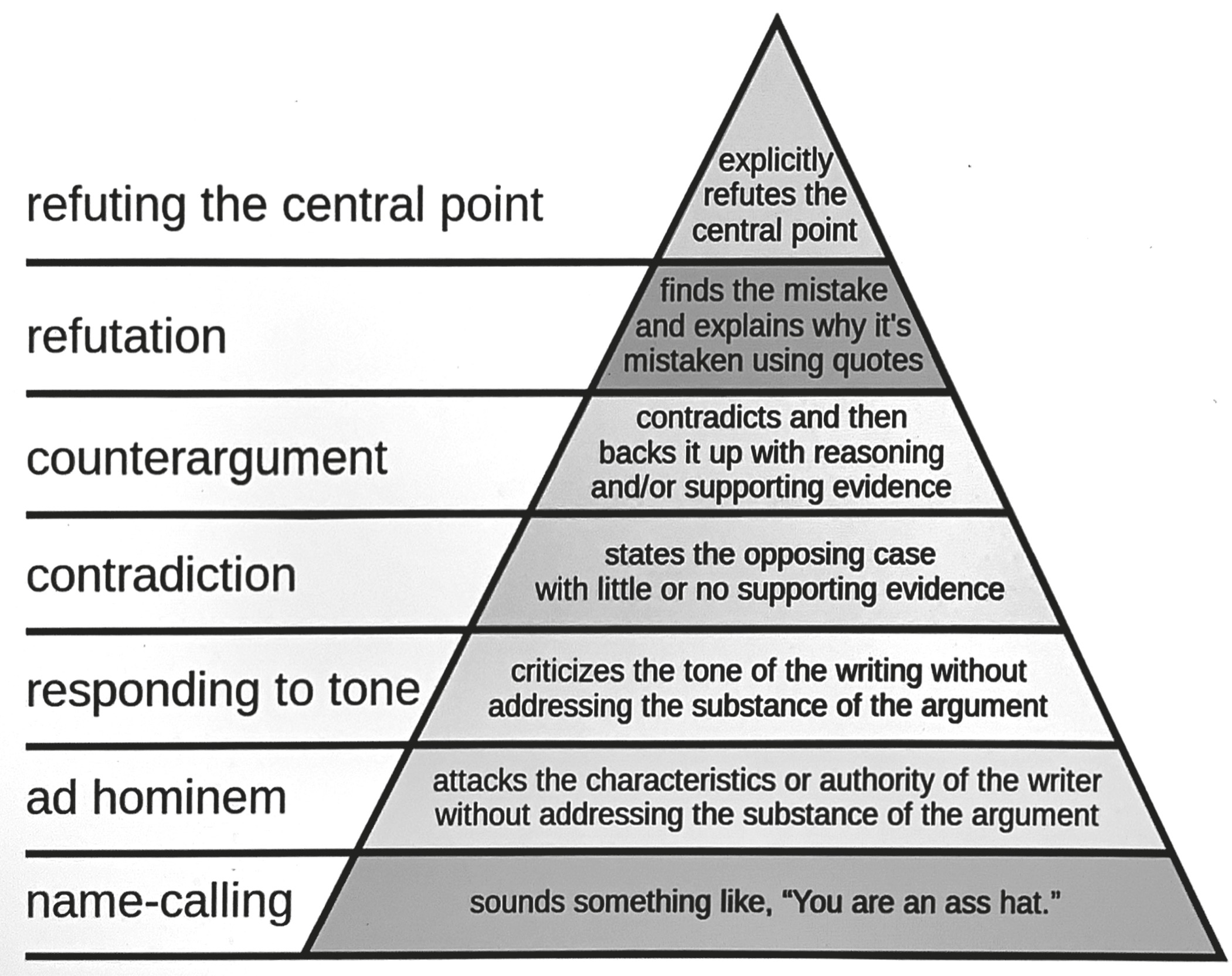

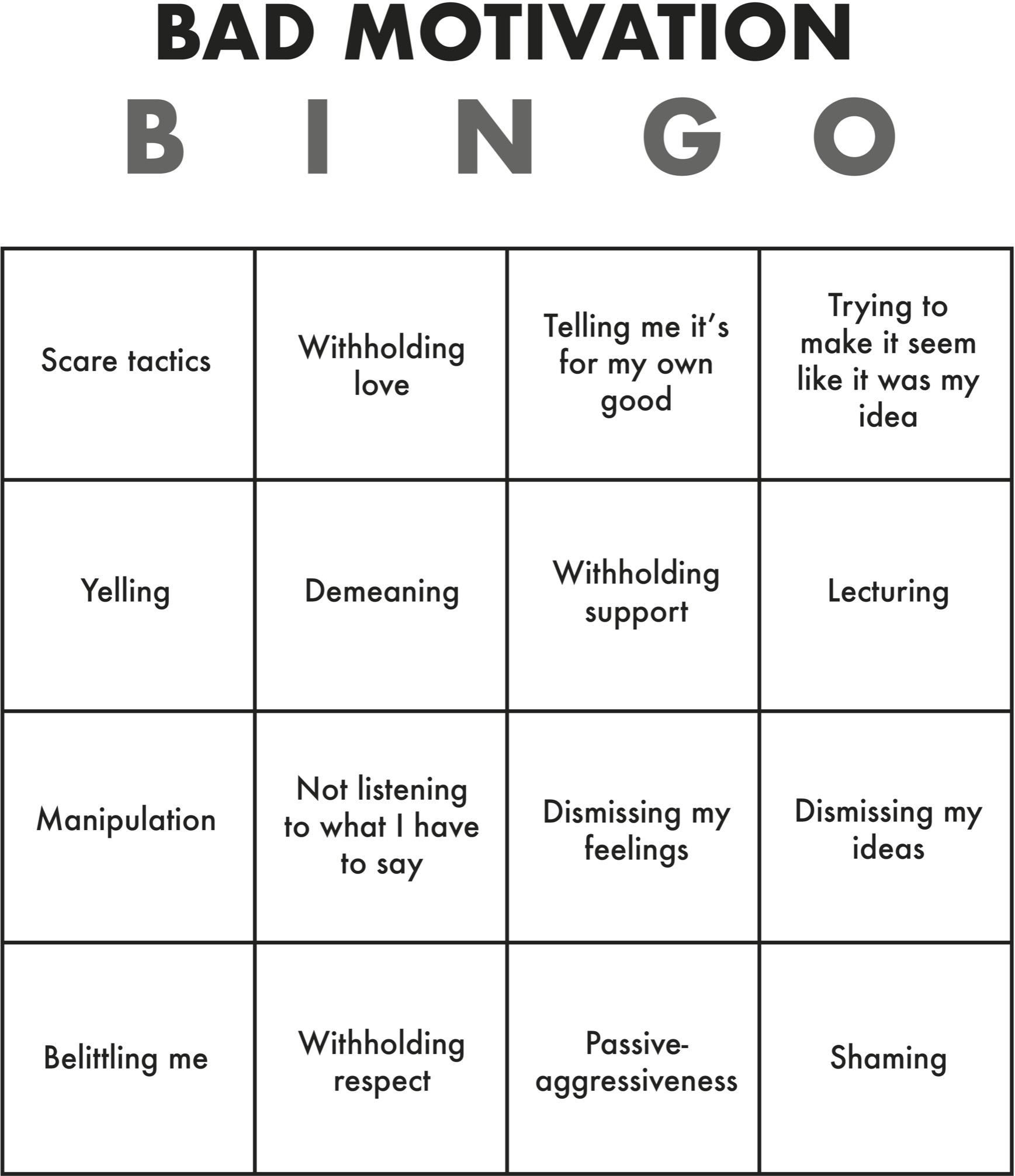

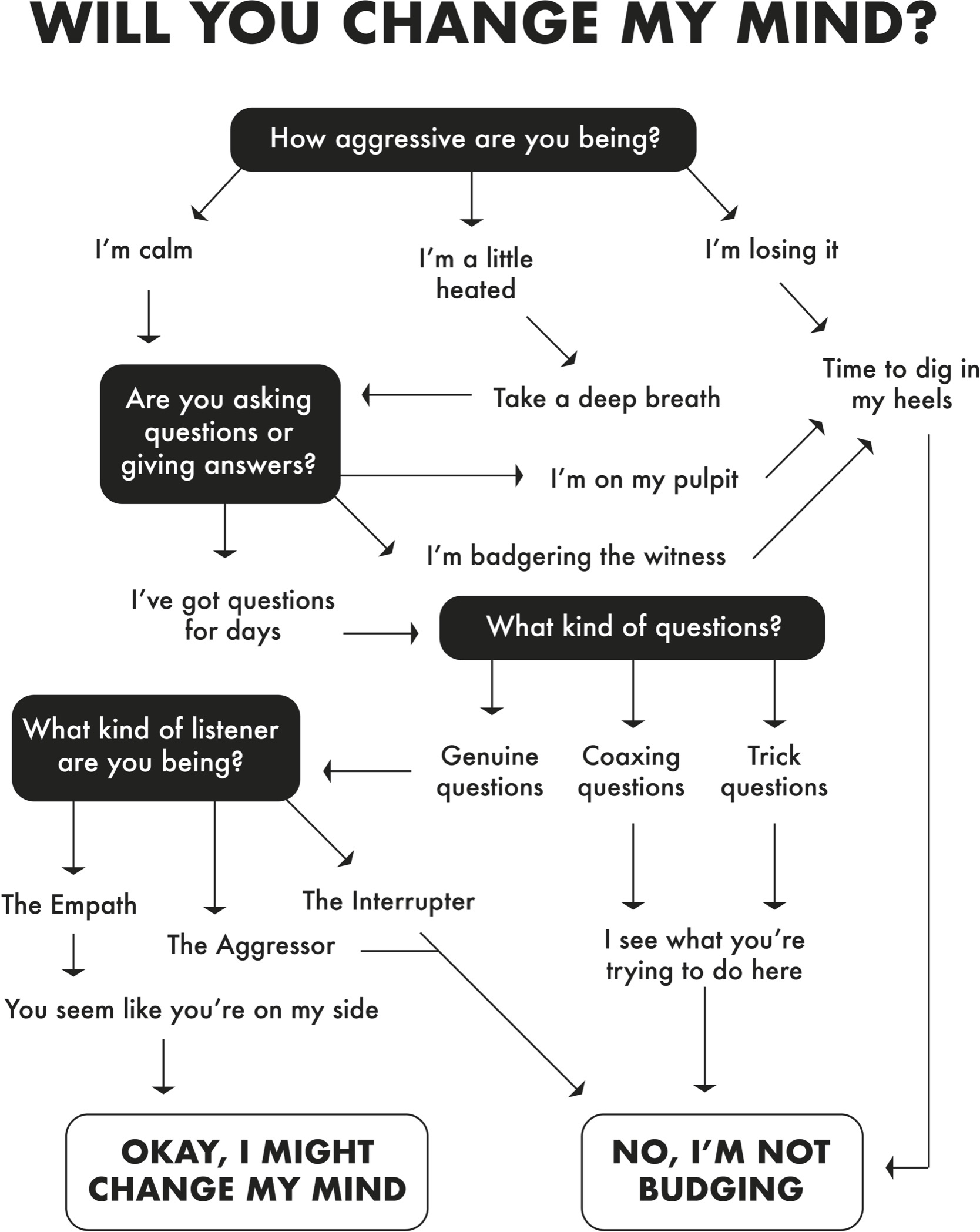

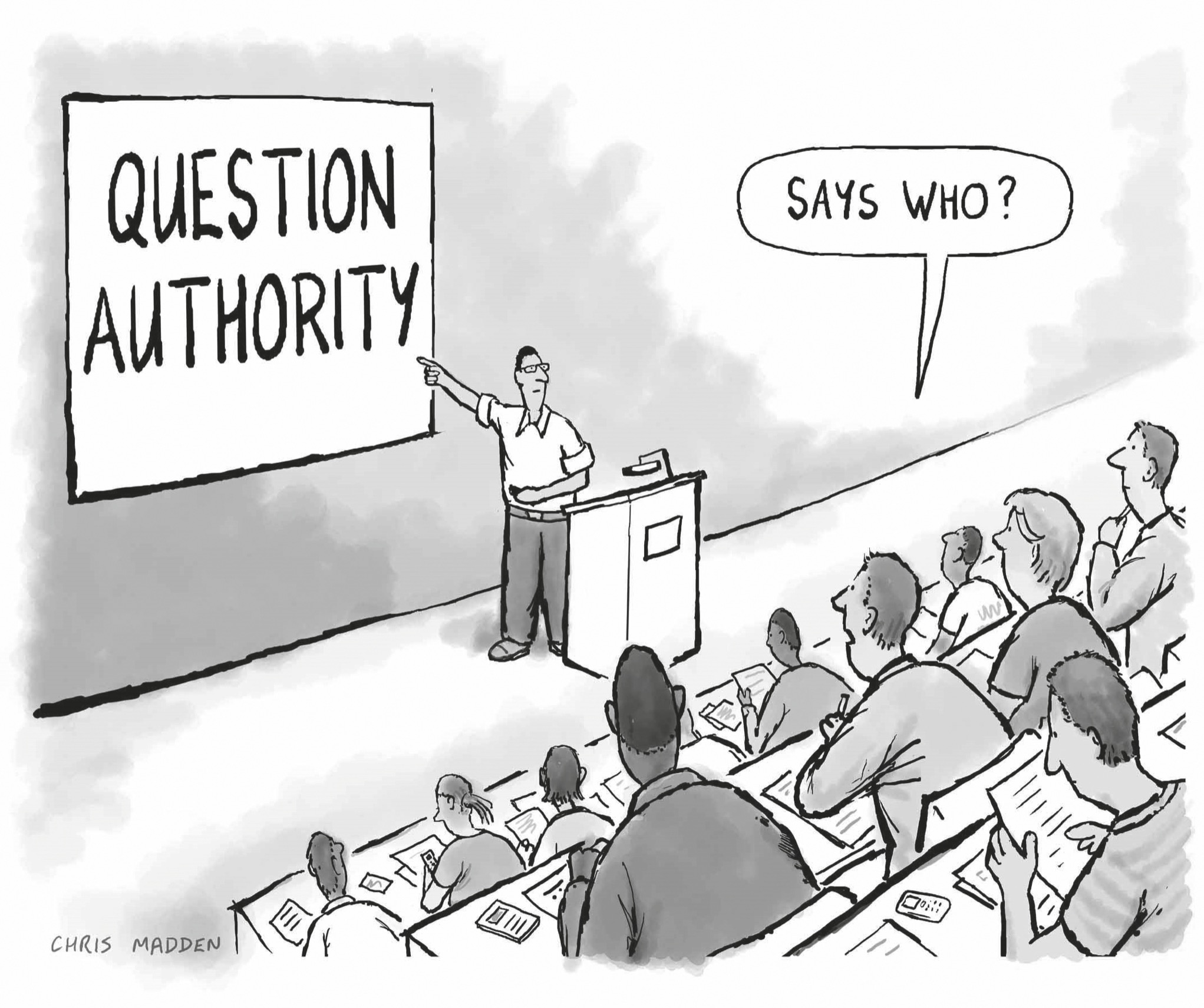

Two decades ago my colleague Phil Tetlock discovered something peculiar. As we think and talk, we often slip into the mindsets of three different professions: preachers, prosecutors, and politicians. In each of these modes, we take on a particular identity and use a distinct set of tools. We go into preacher mode when our sacred beliefs are in jeopardy: we deliver sermons to protect and promote our ideals. We enter prosecutor mode when we recognize flaws in other people’s reasoning: we marshal arguments to prove them wrong and win our case. We shift into politician mode when we’re seeking to win over an audience: we campaign and lobby for the approval of our constituents. The risk is that we become so wrapped up in preaching that we’re right, prosecuting others who are wrong, and politicking for support that we don’t bother to rethink our own views.

When Stephen Greenspan and his sister made the choice to invest with Bernie Madoff, it wasn’t because they relied on just one of those mental tools. All three modes together contributed to their ill-fated decision. When his sister told him about the money she and her friends had made, she was preaching about the merits of the fund. Her confidence led Greenspan to prosecute the friend who warned him against investing, deeming the friend guilty of “knee-jerk cynicism.” Greenspan was in politician mode when he let his desire for approval sway him toward a yes—the financial adviser was a family friend whom he liked and wanted to please.

Any of us could have fallen into those traps. Greenspan says that he should’ve known better, though, because he happens to be an expert on gullibility. When he decided to go ahead with the investment, he had almost finished writing a book on why we get duped. Looking back, he wishes he had approached the decision with a different set of tools. He might have analyzed the fund’s strategy more systematically instead of simply trusting in the results. He could have sought out more perspectives from credible sources. He would have experimented with investing smaller amounts over a longer period of time before gambling so much of his life’s savings.

That would have put him in the mode of a scientist.

A DIFFERENT PAIR OF GOGGLES

If you’re a scientist by trade, rethinking is fundamental to your profession. You’re paid to be constantly aware of the limits of your understanding. You’re expected to doubt what you know, be curious about what you don’t know, and update your views based on new data. In the past century alone, the application of scientific principles has led to dramatic progress. Biological scientists discovered penicillin. Rocket scientists sent us to the moon. Computer scientists built the internet.

But being a scientist is not just a profession. It’s a frame of mind—a mode of thinking that differs from preaching, prosecuting, and politicking. We move into scientist mode when we’re searching for the truth: we run experiments to test hypotheses and discover knowledge. Scientific tools aren’t reserved for people with white coats and beakers, and using them doesn’t require toiling away for years with a microscope and a petri dish. Hypotheses have as much of a place in our lives as they do in the lab. Experiments can inform our daily decisions. That makes me wonder: is it possible to train people in other fields to think more like scientists, and if so, do they end up making smarter choices?

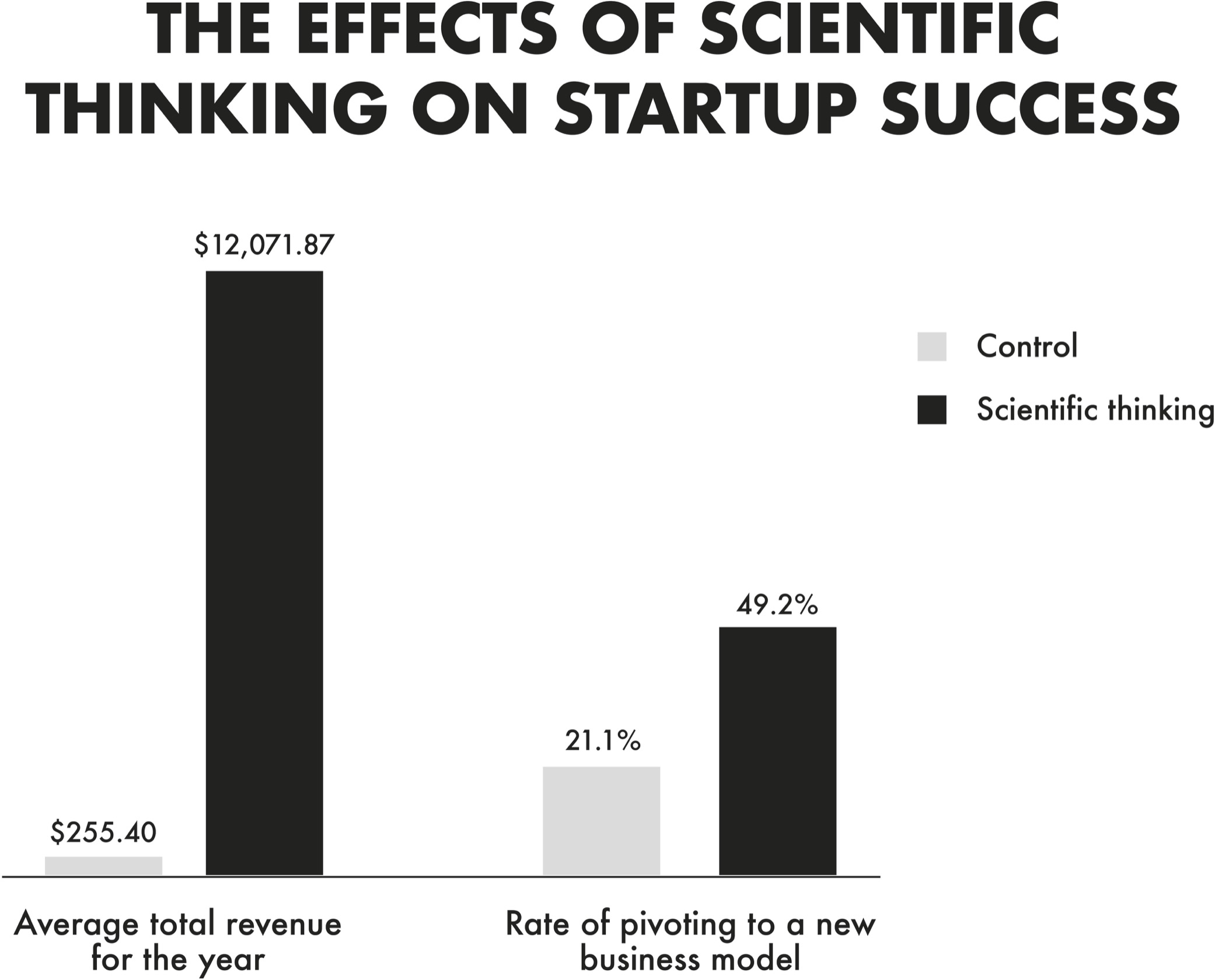

Recently, a quartet of European researchers decided to find out. They ran a bold experiment with more than a hundred founders of Italian startups in technology, retail, furniture, food, health care, leisure, and machinery. Most of the founders’ businesses had yet to bring in any revenue, making it an ideal setting to investigate how teaching scientific thinking would influence the bottom line.

The entrepreneurs arrived in Milan for a training program in entrepreneurship. Over the course of four months, they learned to create a business strategy, interview customers, build a minimum viable product, and then refine a prototype. What they didn’t know was that they’d been randomly assigned to either a “scientific thinking” group or a control group. The training for both groups was identical, except that one was encouraged to view startups through a scientist’s goggles. From that perspective, their strategy is a theory, customer interviews help to develop hypotheses, and their minimum viable product and prototype are experiments to test those hypotheses. Their task is to rigorously measure the results and make decisions based on whether their hypotheses are supported or refuted.

Over the following year, the startups in the control group averaged under $300 in revenue. The startups in the scientific thinking group averaged over $12,000 in revenue. They brought in revenue more than twice as fast—and attracted customers sooner, too. Why? The entrepreneurs in the control group tended to stay wedded to their original strategies and products. It was too easy to preach the virtues of their past decisions, prosecute the vices of alternative options, and politick by catering to advisers who favored the existing direction. The entrepreneurs who had been taught to think like scientists, in contrast, pivoted more than twice as often. When their hypotheses weren’t supported, they knew it was time to rethink their business models.

What’s surprising about these results is that we typically celebrate great entrepreneurs and leaders for being strong-minded and clear-sighted. They’re supposed to be paragons of conviction: decisive and certain. Yet evidence reveals that when business executives compete in tournaments to price products, the best strategists are actually slow and unsure. Like careful scientists, they take their time so they have the flexibility to change their minds. I’m beginning to think decisiveness is overrated . . . but I reserve the right to change my mind.

Just as you don’t have to be a professional scientist to reason like one, being a professional scientist doesn’t guarantee that someone will use the tools of their training. Scientists morph into preachers when they present their pet theories as gospel and treat thoughtful critiques as sacrilege. They veer into politician terrain when they allow their views to be swayed by popularity rather than accuracy. They enter prosecutor mode when they’re hell-bent on debunking and discrediting rather than discovering. After upending physics with his theories of relativity, Einstein opposed the quantum revolution: “To punish me for my contempt of authority, Fate has made me an authority myself.” Sometimes even great scientists need to think more like scientists.

Decades before becoming a smartphone pioneer, Mike Lazaridis was recognized as a science prodigy. In middle school, he made the local news for building a solar panel at the science fair and won an award for reading every science book in the public library. If you open his eighth-grade yearbook, you’ll see a cartoon showing Mike as a mad scientist, with bolts of lightning shooting out of his head.

When Mike created the BlackBerry, he was thinking like a scientist. Existing devices for wireless email featured a stylus that was too slow or a keyboard that was too small. People had to clunkily forward their work emails to their mobile device in-boxes, and they took forever to download. He started generating hypotheses and sent his team of engineers off to test them. What if people could hold the device in their hands and type with their thumbs rather than their fingers? What if there was a single mailbox synchronized across devices? What if messages could be relayed through a server and appear on the device only after they were decrypted?

As other companies followed BlackBerry’s lead, Mike would take their smartphones apart and study them. Nothing really impressed him until the summer of 2007, when he was stunned by the computing power inside the first iPhone. “They’ve put a Mac in this thing,” he said. What Mike did next might have been the beginning of the end for the BlackBerry. If the BlackBerry’s rise was due in large part to his success in scientific thinking as an engineer, its demise was in many ways the result of his failure in rethinking as a CEO.

As the iPhone skyrocketed onto the scene, Mike maintained his belief in the features that had made the BlackBerry a sensation in the past. He was confident that people wanted a wireless device for work emails and calls, not an entire computer in their pocket with apps for home entertainment. As early as 1997, one of his top engineers wanted to add an internet browser, but Mike told him to focus only on email. A decade later, Mike was still certain that a powerful internet browser would drain the battery and strain the bandwidth of wireless networks. He didn’t test the alternative hypotheses.

By 2008, the company’s valuation exceeded $70 billion, but the BlackBerry remained the company’s sole product, and it still lacked a reliable browser. In 2010, when his colleagues pitched a strategy to feature encrypted text messages, Mike was receptive but expressed concerns that allowing messages to be exchanged on competitors’ devices would render the BlackBerry obsolete. As his reservations gained traction within the firm, the company abandoned instant messaging, missing an opportunity that WhatsApp later seized for upwards of $19 billion. As gifted as Mike was at rethinking the design of electronic devices, he wasn’t willing to rethink the market for his baby. Intelligence was no cure—it might have been more of a curse.

THE SMARTER THEY ARE, THE HARDER THEY FAIL

Mental horsepower doesn’t guarantee mental dexterity. No matter how much brainpower you have, if you lack the motivation to change your mind, you’ll miss many occasions to think again. Research reveals that the higher you score on an IQ test, the more likely you are to fall for stereotypes, because you’re faster at recognizing patterns. And recent experiments suggest that the smarter you are, the more you might struggle to update your beliefs.

One study investigated whether being a math whiz makes you better at analyzing data. The answer is yes—if you’re told the data are about something bland, like a treatment for skin rashes. But what if the exact same data are labeled as focusing on an ideological issue that activates strong emotions—like gun laws in the United States?

Being a quant jock makes you more accurate in interpreting the results—as long as they support your beliefs. Yet if the empirical pattern clashes with your ideology, math prowess is no longer an asset; it actually becomes a liability. The better you are at crunching numbers, the more spectacularly you fail at analyzing patterns that contradict your views. If they were liberals, math geniuses did worse than their peers at evaluating evidence that gun bans failed. If they were conservatives, they did worse at assessing evidence that gun bans worked.

In psychology there are at least two biases that drive this pattern. One is confirmation bias: seeing what we expect to see. The other is desirability bias: seeing what we want to see. These biases don’t just prevent us from applying our intelligence. They can actually contort our intelligence into a weapon against the truth. We find reasons to preach our faith more deeply, prosecute our case more passionately, and ride the tidal wave of our political party. The tragedy is that we’re usually unaware of the resulting flaws in our thinking.

My favorite bias is the “I’m not biased” bias, in which people believe they’re more objective than others. It turns out that smart people are more likely to fall into this trap. The brighter you are, the harder it can be to see your own limitations. Being good at thinking can make you worse at rethinking.

When we’re in scientist mode, we refuse to let our ideas become ideologies. We don’t start with answers or solutions; we lead with questions and puzzles. We don’t preach from intuition; we teach from evidence. We don’t just have healthy skepticism about other people’s arguments; we dare to disagree with our own arguments.

Thinking like a scientist involves more than just reacting with an open mind. It means being actively open-minded. It requires searching for reasons why we might be wrong—not for reasons why we must be right—and revising our views based on what we learn.

That rarely happens in the other mental modes. In preacher mode, changing our minds is a mark of moral weakness; in scientist mode, it’s a sign of intellectual integrity. In prosecutor mode, allowing ourselves to be persuaded is admitting defeat; in scientist mode, it’s a step toward the truth. In politician mode, we flip-flop in response to carrots and sticks; in scientist mode, we shift in the face of sharper logic and stronger data.

I’ve done my best to write this book in scientist mode.* I’m a teacher, not a preacher. I can’t stand politics, and I hope a decade as a tenured professor has cured me of whatever temptation I once felt to appease my audience. Although I’ve spent more than my share of time in prosecutor mode, I’ve decided that in a courtroom I’d rather be the judge. I don’t expect you to agree with everything I think. My hope is that you’ll be intrigued by how I think—and that the studies, stories, and ideas covered here will lead you to do some rethinking of your own. After all, the purpose of learning isn’t to affirm our beliefs; it’s to evolve our beliefs.

One of my beliefs is that we shouldn’t be open-minded in every circumstance. There are situations where it might make sense to preach, prosecute, and politick. That said, I think most of us would benefit from being more open more of the time, because it’s in scientist mode that we gain mental agility.

When psychologist Mihaly Csikszentmihalyi studied eminent scientists like Linus Pauling and Jonas Salk, he concluded that what differentiated them from their peers was their cognitive flexibility, their willingness “to move from one extreme to the other as the occasion requires.” The same pattern held for great artists, and in an independent study of highly creative architects.

We can even see it in the Oval Office. Experts assessed American presidents on a long list of personality traits and compared them to rankings by independent historians and political scientists. Only one trait consistently predicted presidential greatness after controlling for factors like years in office, wars, and scandals. It wasn’t whether presidents were ambitious or forceful, friendly or Machiavellian; it wasn’t whether they were attractive, witty, poised, or polished.

What set great presidents apart was their intellectual curiosity and openness. They read widely and were as eager to learn about developments in biology, philosophy, architecture, and music as in domestic and foreign affairs. They were interested in hearing new views and revising their old ones. They saw many of their policies as experiments to run, not points to score. Although they might have been politicians by profession, they often solved problems like scientists.

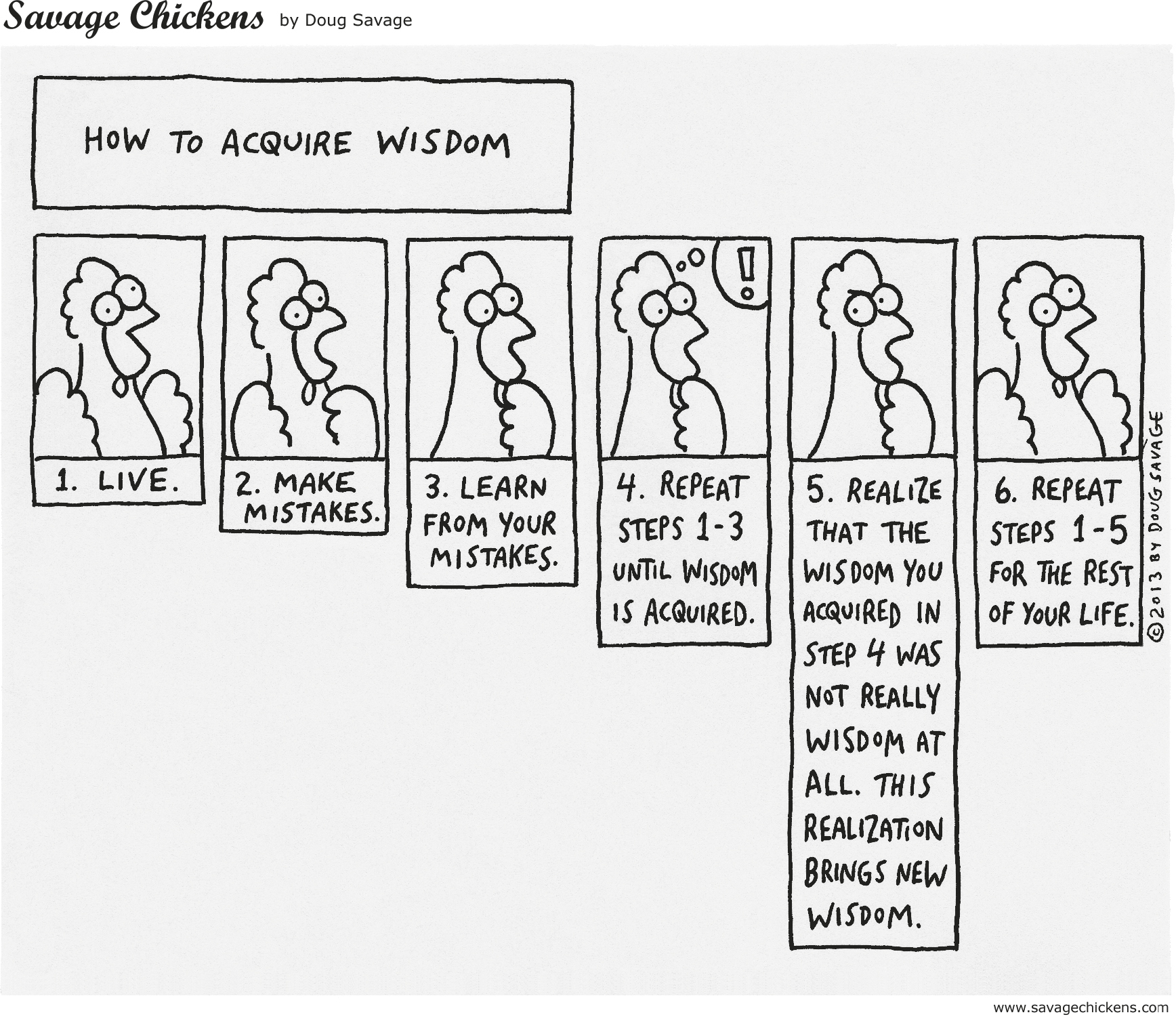

DON’T STOP UNBELIEVING

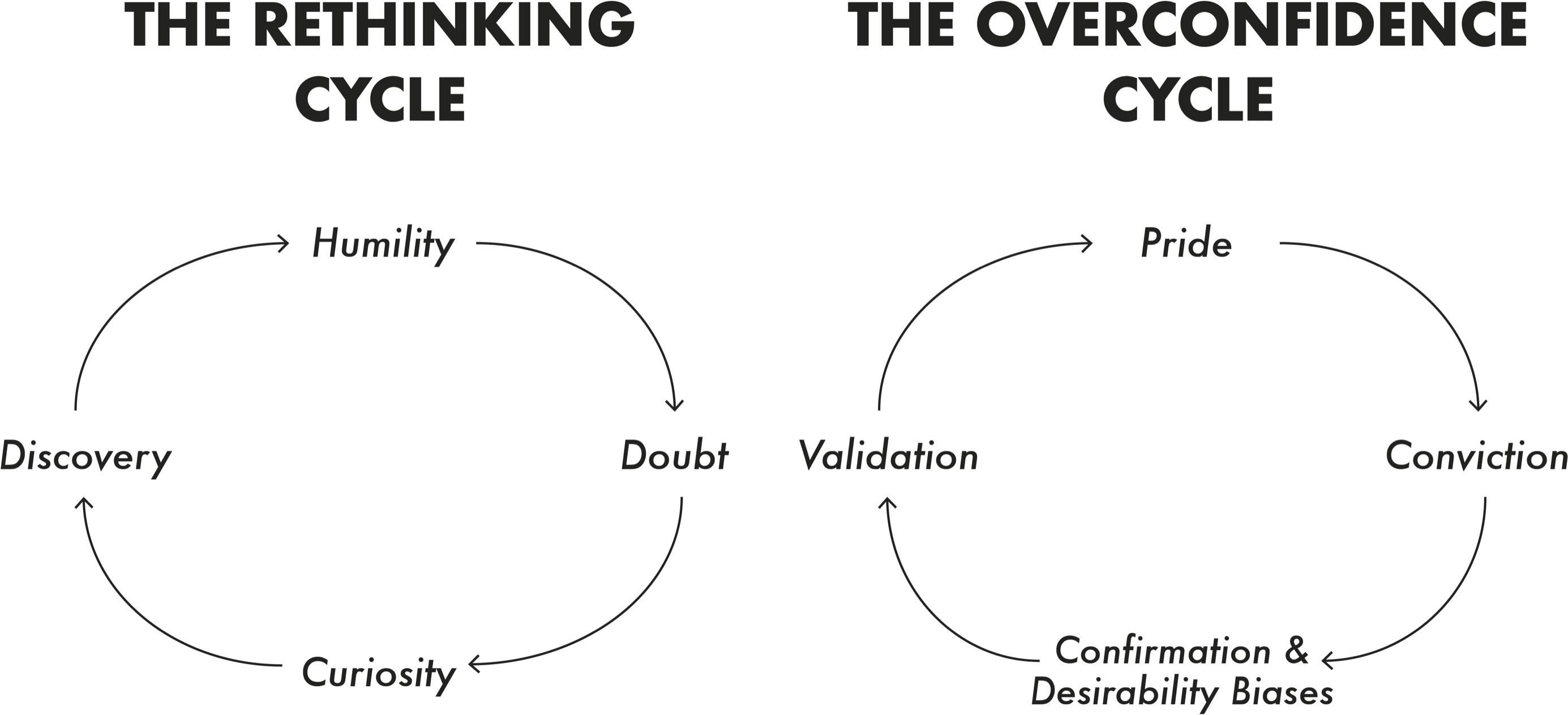

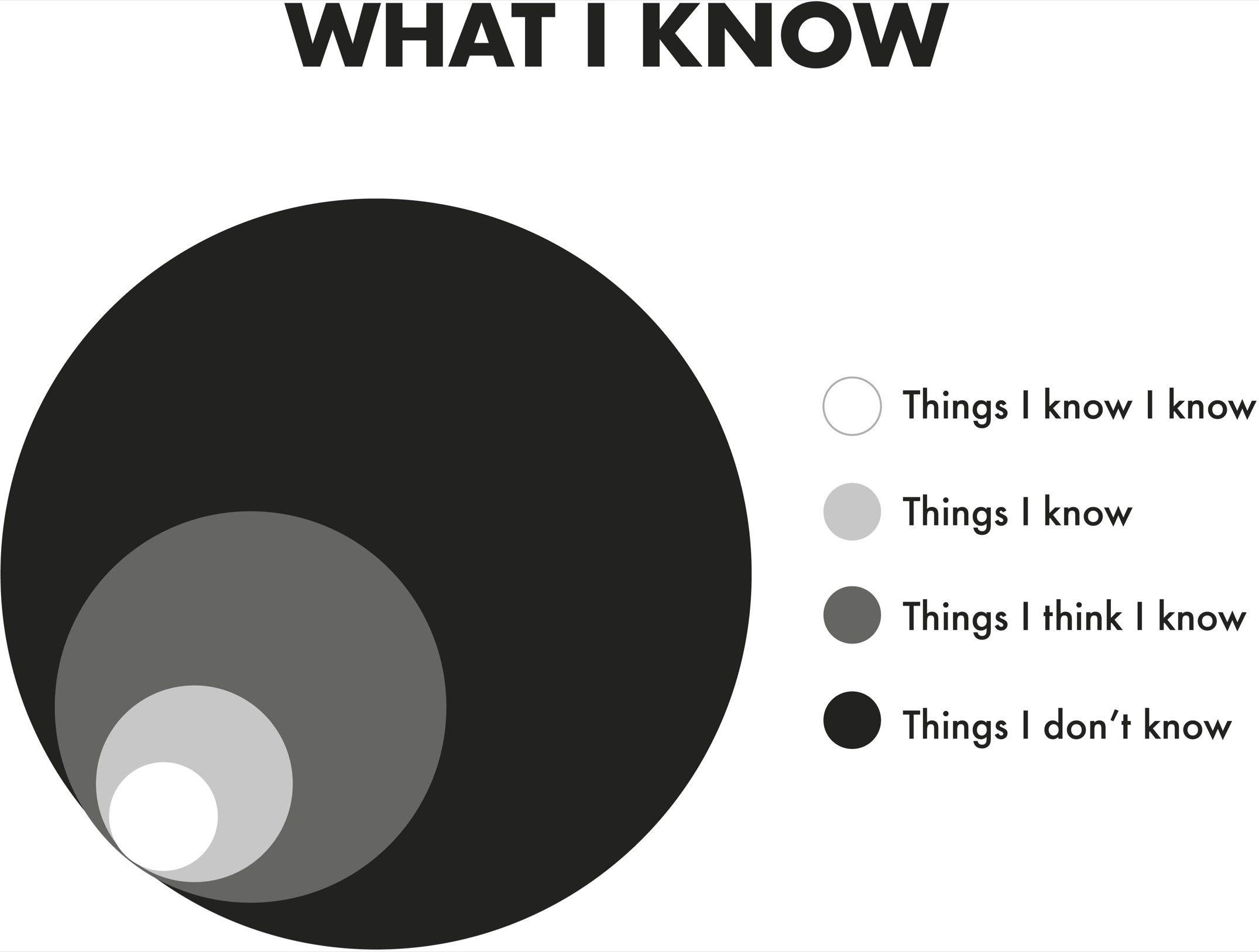

As I’ve studied the process of rethinking, I’ve found that it often unfolds in a cycle. It starts with intellectual humility—knowing what we don’t know. We should all be able to make a long list of areas where we’re ignorant. Mine include art, financial markets, fashion, chemistry, food, why British accents turn American in songs, and why it’s impossible to tickle yourself. Recognizing our shortcomings opens the door to doubt. As we question our current understanding, we become curious about what information we’re missing. That search leads us to new discoveries, which in turn maintain our humility by reinforcing how much we still have to learn. If knowledge is power, knowing what we don’t know is wisdom.

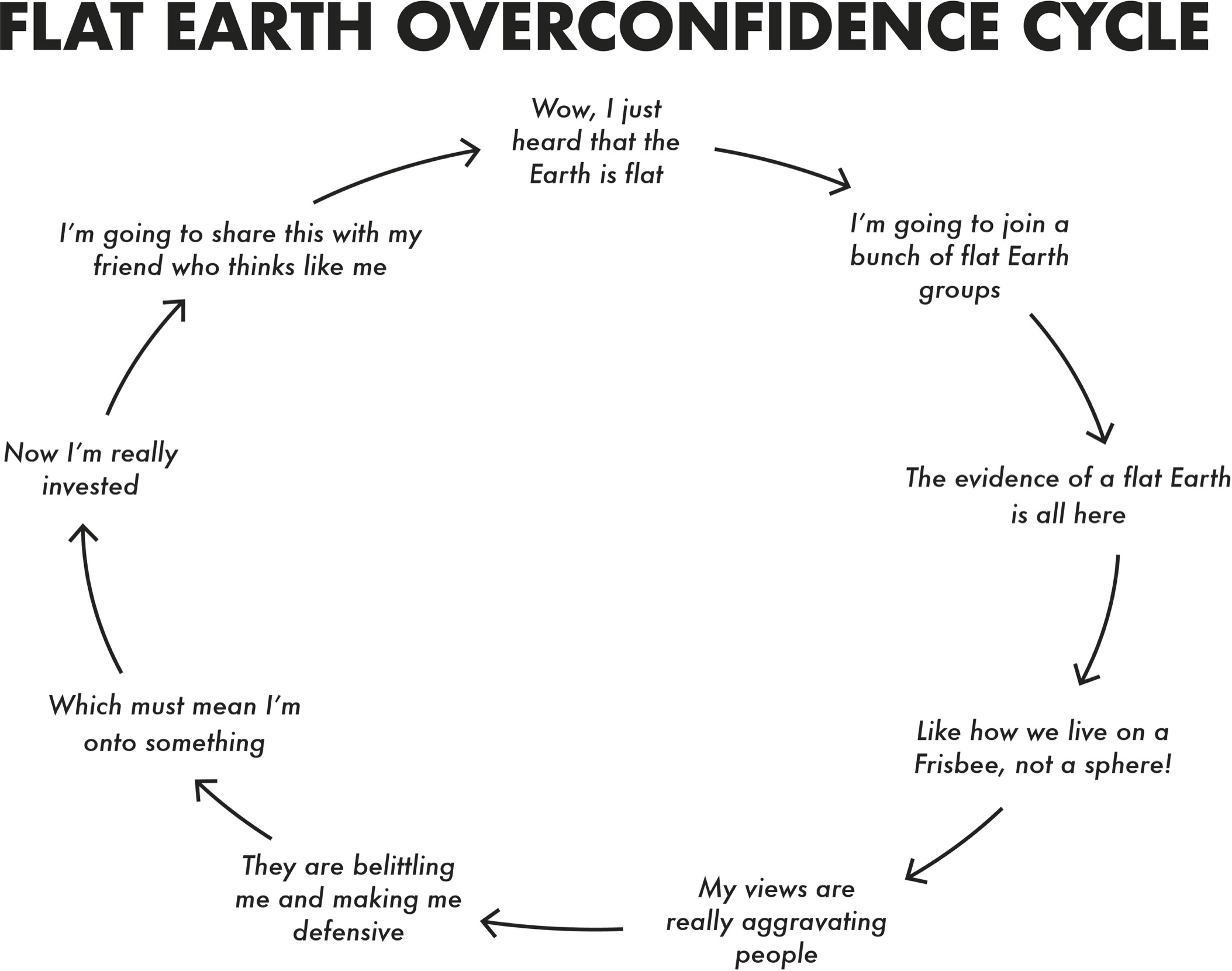

Scientific thinking favors humility over pride, doubt over certainty, curiosity over closure. When we shift out of scientist mode, the rethinking cycle breaks down, giving way to an overconfidence cycle. If we’re preaching, we can’t see gaps in our knowledge: we believe we’ve already found the truth. Pride breeds conviction rather than doubt, which makes us prosecutors: we might be laser-focused on changing other people’s minds, but ours is set in stone. That launches us into confirmation bias and desirability bias. We become politicians, ignoring or dismissing whatever doesn’t win the favor of our constituents—our parents, our bosses, or the high school classmates we’re still trying to impress. We become so busy putting on a show that the truth gets relegated to a backstage seat, and the resulting validation can make us arrogant. We fall victim to the fat-cat syndrome, resting on our laurels instead of pressure-testing our beliefs.

In the case of the BlackBerry, Mike Lazaridis was trapped in an overconfidence cycle. Taking pride in his successful invention gave him too much conviction. Nowhere was that clearer than in his preference for the keyboard over a touchscreen. It was a BlackBerry virtue he loved to preach—and an Apple vice he was quick to prosecute. As his company’s stock fell, Mike got caught up in confirmation bias and desirability bias, and fell victim to validation from fans. “It’s an iconic product,” he said of the BlackBerry in 2011. “It’s used by business, it’s used by leaders, it’s used by celebrities.” By 2012, the iPhone had captured a quarter of the global smartphone market, but Mike was still resisting the idea of typing on glass. “I don’t get this,” he said at a board meeting, pointing at a phone with a touchscreen. “The keyboard is one of the reasons they buy BlackBerrys.” Like a politician who campaigns only to his base, he focused on the keyboard taste of millions of existing users, neglecting the appeal of a touchscreen to billions of potential users. For the record, I still miss the keyboard, and I’m excited that it’s been licensed for an attempted comeback.

When Mike finally started reimagining the screen and software, some of his engineers didn’t want to abandon their past work. The failure to rethink was widespread. In 2011, an anonymous high-level employee inside the firm wrote an open letter to Mike and his co-CEO. “We laughed and said they are trying to put a computer on a phone, that it won’t work,” the letter read. “We are now 3–4 years too late.”

Our convictions can lock us in prisons of our own making. The solution is not to decelerate our thinking—it’s to accelerate our rethinking. That’s what resurrected Apple from the brink of bankruptcy to become the world’s most valuable company.

The legend of Apple’s renaissance revolves around the lone genius of Steve Jobs. It was his conviction and clarity of vision, the story goes, that gave birth to the iPhone. The reality is that he was dead-set against the mobile phone category. His employees had the vision for it, and it was their ability to change his mind that really revived Apple. Although Jobs knew how to “think different,” it was his team that did much of the rethinking.

In 2004, a small group of engineers, designers, and marketers pitched Jobs on turning their hit product, the iPod, into a phone. “Why the f@*& would we want to do that?” Jobs snapped. “That is the dumbest idea I’ve ever heard.” The team had recognized that mobile phones were starting to feature the ability to play music, but Jobs was worried about cannibalizing Apple’s thriving iPod business. He hated cell-phone companies and didn’t want to design products within the constraints that carriers imposed. When his calls dropped or the software crashed, he would sometimes smash his phone to pieces in frustration. In private meetings and on public stages, he swore over and over that he would never make a phone.

Yet some of Apple’s engineers were already doing research in that area. They worked together to persuade Jobs that he didn’t know what he didn’t know and urged him to doubt his convictions. It might be possible, they argued, to build a smartphone that everyone would love using—and to get the carriers to do it Apple’s way.

Research shows that when people are resistant to change, it helps to reinforce what will stay the same. Visions for change are more compelling when they include visions of continuity. Although our strategy might evolve, our identity will endure.

The engineers who worked closely with Jobs understood that this was one of the best ways to convince him. They assured him that they weren’t trying to turn Apple into a phone company. It would remain a computer company—they were just taking their existing products and adding a phone on the side. Apple was already putting twenty thousand songs in your pocket, so why wouldn’t they put everything else in your pocket, too? They needed to rethink their technology, but they would preserve their DNA. After six months of discussion, Jobs finally became curious enough to give the effort his blessing, and two different teams were off to the races in an experiment to test whether they should add calling capabilities to the iPod or turn the Mac into a miniature tablet that doubled as a phone. Just four years after it launched, the iPhone accounted for half of Apple’s revenue.

The iPhone represented a dramatic leap in rethinking the smartphone. Since its inception, smartphone innovation has been much more incremental, with different sizes and shapes, better cameras, and longer battery life, but few fundamental changes to the purpose or user experience. Looking back, if Mike Lazaridis had been more open to rethinking his pet product, would BlackBerry and Apple have compelled each other to reimagine the smartphone multiple times by now?

The curse of knowledge is that it closes our minds to what we don’t know. Good judgment depends on having the skill—and the will—to open our minds. I’m pretty confident that in life, rethinking is an increasingly important habit. Of course, I might be wrong. If I am, I’ll be quick to think again.

CHAPTER 2

The Armchair Quarterback and the Impostor

Finding the Sweet Spot of Confidence

Ignorance more frequently begets confidence than does knowledge.

—charles darwin

When Ursula Mercz was admitted to the clinic, she complained of headaches, back pain, and dizziness severe enough that she could no longer work. Over the following month her condition deteriorated. She struggled to locate the glass of water she put next to her bed. She couldn’t find the door to her room. She walked directly into her bed frame.

Ursula was a seamstress in her midfifties, and she hadn’t lost her dexterity: she was able to cut different shapes out of paper with scissors. She could easily point to her nose, mouth, arms, and legs, and had no difficulty describing her home and her pets. For an Austrian doctor named Gabriel Anton, she presented a curious case. When Anton put a red ribbon and scissors on the table in front of her, she couldn’t name them, even though “she confirmed, calmly and faithfully, that she could see the presented objects.”

She was clearly having problems with language production, which she acknowledged, and with spatial orientation. Yet something else was wrong: Ursula could no longer tell the difference between light and dark. When Anton held up an object and asked her to describe it, she didn’t even try to look at it but instead reached out to touch it. Tests showed that her eyesight was severely impaired. Oddly, when Anton asked her about the deficit, she insisted she could see. Eventually, when she lost her vision altogether, she remained completely unaware of it. “It was now extremely astonishing,” Anton wrote, “that the patient did not notice her massive and later complete loss of her ability to see . . . she was mentally blind to her blindness.”

It was the late 1800s, and Ursula wasn’t alone. A decade earlier a neuropathologist in Zurich had reported a case of a man who suffered an accident that left him blind but was unaware of it despite being “intellectually unimpaired.” Although he didn’t blink when a fist was placed in front of his face and couldn’t see the food on his plate, “he thought he was in a dark humid hole or cellar.”

Half a century later, a pair of doctors reported six cases of people who had gone blind but claimed otherwise. “One of the most striking features in the behavior of our patients was their inability to learn from their experiences,” the doctors wrote:

As they were not aware of their blindness when they walked about, they bumped into the furniture and walls but did not change their behavior. When confronted with their blindness in a rather pointed fashion, they would either deny any visual difficulty or remark: “It is so dark in the room; why don’t they turn the light on?”; “I forgot my glasses,” or “My vision is not too good, but I can see all right.” The patients would not accept any demonstration or assurance which would prove their blindness.

This phenomenon was first described by the Roman philosopher Seneca, who wrote of a woman who was blind but complained that she was simply in a dark room. It’s now accepted in the medical literature as Anton’s syndrome—a deficit of self-awareness in which a person is oblivious to a physical disability but otherwise doing fairly well cognitively. It’s known to be caused by damage to the occipital lobe of the brain. Yet I’ve come to believe that even when our brains are functioning normally, we’re all vulnerable to a version of Anton’s syndrome.

We all have blind spots in our knowledge and opinions. The bad news is that they can leave us blind to our blindness, which gives us false confidence in our judgment and prevents us from rethinking. The good news is that with the right kind of confidence, we can learn to see ourselves more clearly and update our views. In driver’s training we were taught to identify our visual blind spots and eliminate them with the help of mirrors and sensors. In life, since our minds don’t come equipped with those tools, we need to learn to recognize our cognitive blind spots and revise our thinking accordingly.

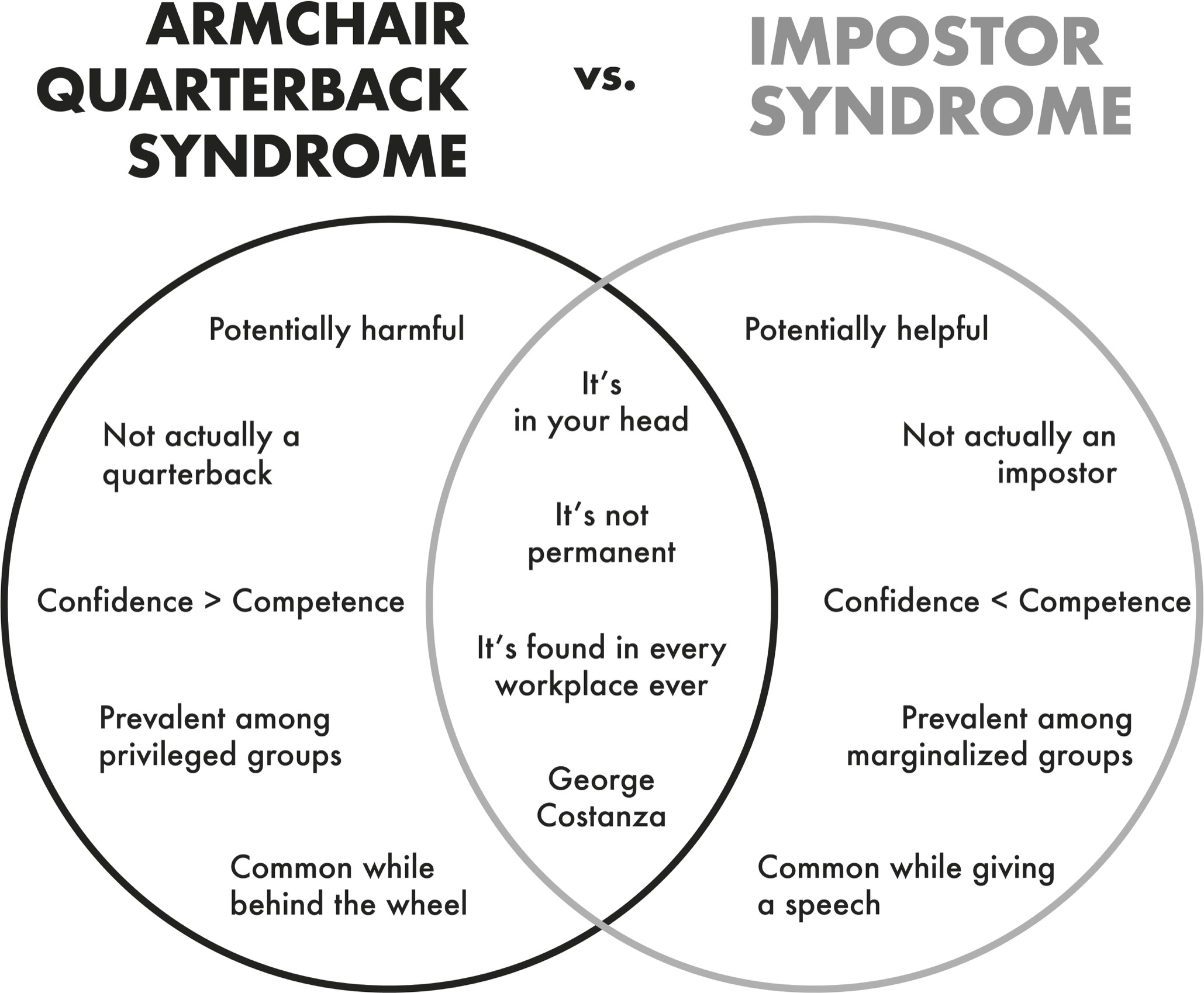

A TALE OF TWO SYNDROMES

On the first day of December 2015, Halla Tómasdóttir got a call she never expected. The roof of Halla’s house had just given way to a thick layer of snow and ice. As she watched water pouring down one of the walls, the friend on the other end of the line asked if Halla had seen the Facebook posts about her. Someone had started a petition for Halla to run for the presidency of Iceland.

Halla’s first thought was, Who am I to be president? She had helped start a university and then cofounded an investment firm in 2007. When the 2008 financial crisis rocked the world, Iceland was hit particularly hard; all three of its major private commercial banks defaulted and its currency collapsed. Relative to the size of its economy, the country faced the worst financial meltdown in human history, but Halla demonstrated her leadership skills by guiding her firm successfully through the crisis. Even with that accomplishment, she didn’t feel prepared for the presidency. She had no political background; she had never served in government or in any kind of public-sector role.

It wasn’t the first time Halla had felt like an impostor. At the age of eight, her piano teacher had placed her on a fast track and frequently asked her to play in concerts, but she never felt she was worthy of the honor—and so, before every concert, she felt sick. Although the stakes were much higher now, the self-doubt felt familiar. “I had a massive pit in my stomach, like the piano recital but much bigger,” Halla told me. “It’s the worst case of adult impostor syndrome I’ve ever had.” For months, she struggled with the idea of becoming a candidate. As her friends and family encouraged her to recognize that she had some relevant skills, Halla was still convinced that she lacked the necessary experience and confidence. She tried to persuade other women to run—one of whom ended up ascending to a different office, as the prime minister of Iceland.

Yet the petition didn’t go away, and Halla’s friends, family, and colleagues didn’t stop urging her on. Eventually, she found herself asking, Who am I not to serve? She ultimately decided to go for it, but the odds were heavily stacked against her. She was running as an unknown independent candidate in a field of more than twenty contenders. One of her competitors was particularly powerful—and particularly dangerous.

When an economist was asked to name the three people most responsible for Iceland’s bankruptcy, she nominated Davíð Oddsson for all three spots. As Iceland’s prime minister from 1991 to 2004, Oddsson put the country’s banks in jeopardy by privatizing them. Then, as governor of Iceland’s central bank from 2005 to 2009, he allowed the banks’ balance sheets to balloon to more than ten times the national GDP. When the people protested his mismanagement, Oddsson refused to resign and had to be forced out by Parliament. Time magazine later identified him as one of the twenty-five people to blame for the financial crisis worldwide. Nevertheless, in 2016 Oddsson announced his candidacy for the presidency of Iceland: “My experience and knowledge, which is considerable, could go well with this office.”

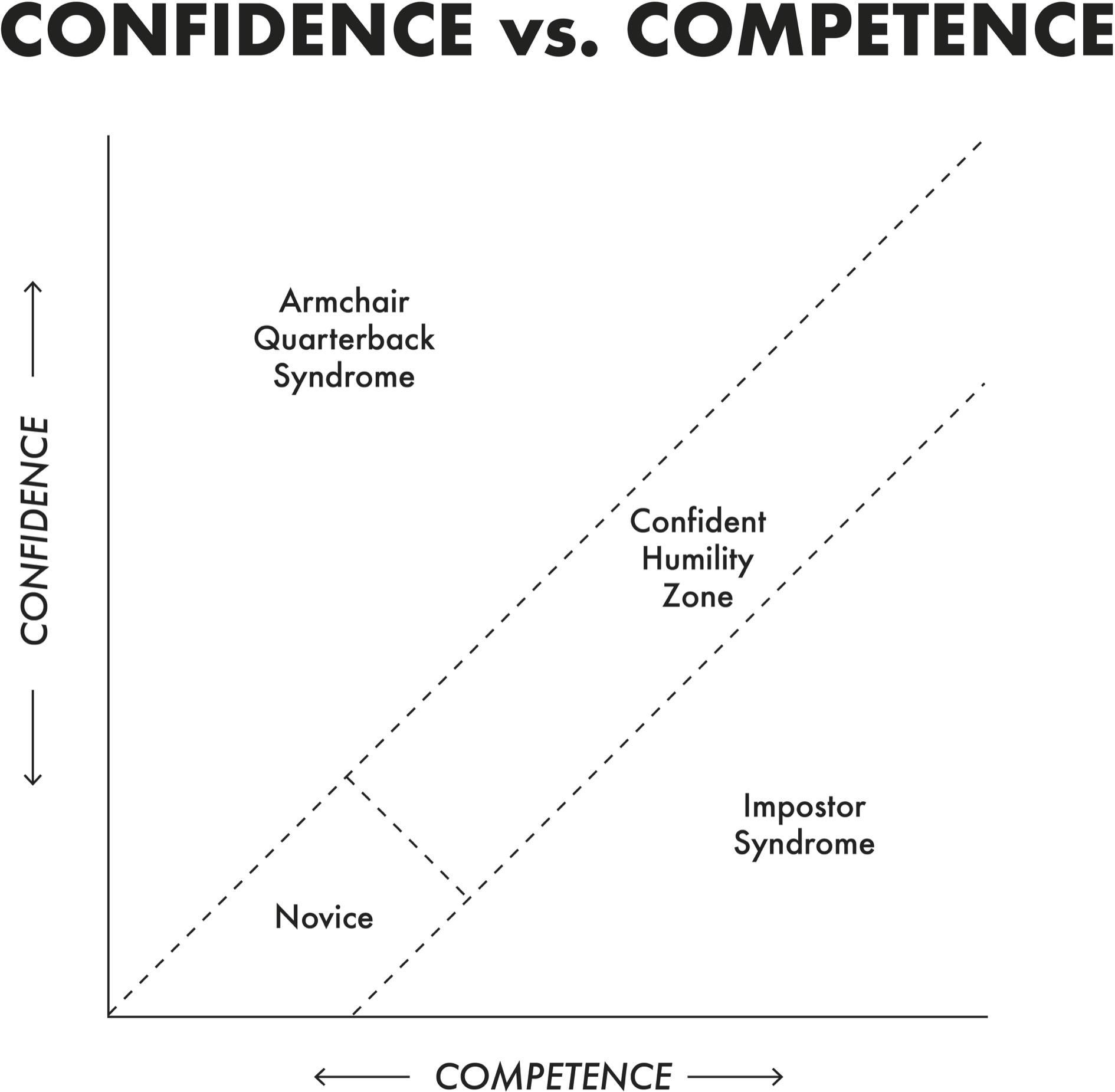

In theory, confidence and competence go hand in hand. In practice, they often diverge. You can see it when people rate their own leadership skills and are also evaluated by their colleagues, supervisors, or subordinates. In a meta-analysis of ninety-five studies involving over a hundred thousand people, women typically underestimated their leadership skills, while men overestimated their skills.

You’ve probably met some football fans who are convinced they know more than the coaches on the sidelines. That’s the armchair quarterback syndrome, where confidence exceeds competence. Even after calling financial plays that destroyed an economy, Davíð Oddsson still refused to acknowledge that he wasn’t qualified to coach—let alone quarterback. He was blind to his weaknesses.

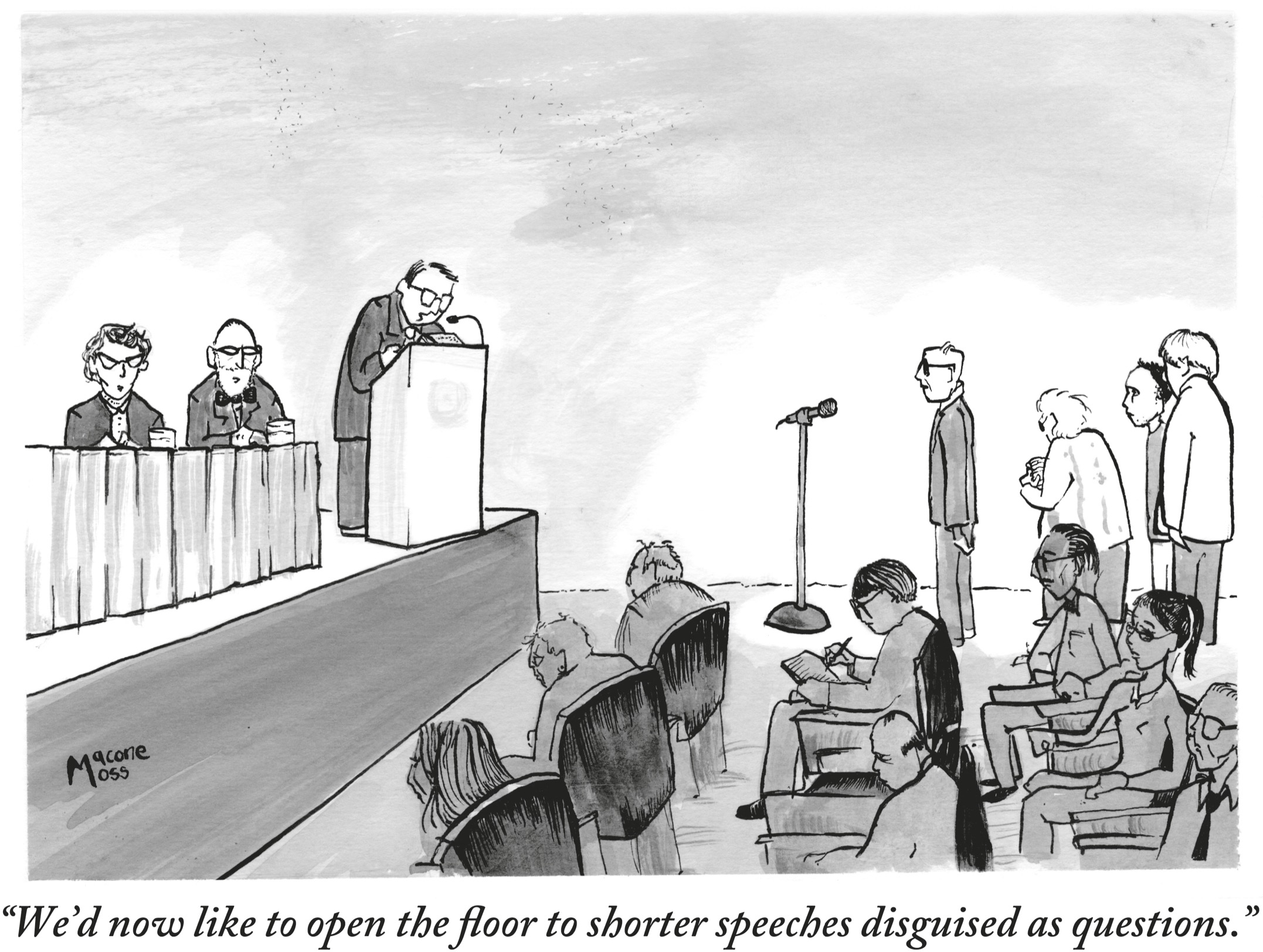

Jason Adam Katzenstein/The New Yorker Collection/The Cartoon Bank; © Condé Nast

The opposite of armchair quarterback syndrome is impostor syndrome, where competence exceeds confidence. Think of the people you know who believe that they don’t deserve their success. They’re genuinely unaware of just how intelligent, creative, or charming they are, and no matter how hard you try, you can’t get them to rethink their views. Even after an online petition proved that many others had confidence in her, Halla Tómasdóttir still wasn’t convinced she was qualified to lead her country. She was blind to her strengths.

Although they had opposite blind spots, being on the extremes of confidence left both candidates reluctant to rethink their plans. The ideal level of confidence probably lies somewhere between being an armchair quarterback and an impostor. How do we find that sweet spot?

THE IGNORANCE OF ARROGANCE

One of my favorite accolades is a satirical award for research that’s as entertaining as it is enlightening. It’s called the Ig™ Nobel Prize, and it’s handed out by actual Nobel laureates. One autumn in college, I raced to the campus theater to watch the ceremony along with over a thousand fellow nerds. The winners included a pair of physicists who created a magnetic field to levitate a live frog, a trio of chemists who discovered that the biochemistry of romantic love has something in common with obsessive-compulsive disorder, and a computer scientist who invented PawSense—software that detects cat paws on a keyboard and makes an annoying noise to deter them. Unclear whether it also worked with dogs.

Several of the awards made me laugh, but the honorees who made me think the most were two psychologists, David Dunning and Justin Kruger. They had just published a “modest report” on skill and confidence that would soon become famous. They found that in many situations, those who can’t . . . don’t know they can’t. According to what’s now known as the Dunning-Kruger effect, it’s when we lack competence that we’re most likely to be brimming with overconfidence.

In the original Dunning-Kruger studies, people who scored the lowest on tests of logical reasoning, grammar, and sense of humor had the most inflated opinions of their skills. On average, they believed they did better than 62 percent of their peers, but in reality outperformed only 12 percent of them. The less intelligent we are in a particular domain, the more we seem to overestimate our actual intelligence in that domain. In a group of football fans, the one who knows the least is the most likely to be the armchair quarterback, prosecuting the coach for calling the wrong play and preaching about a better playbook.

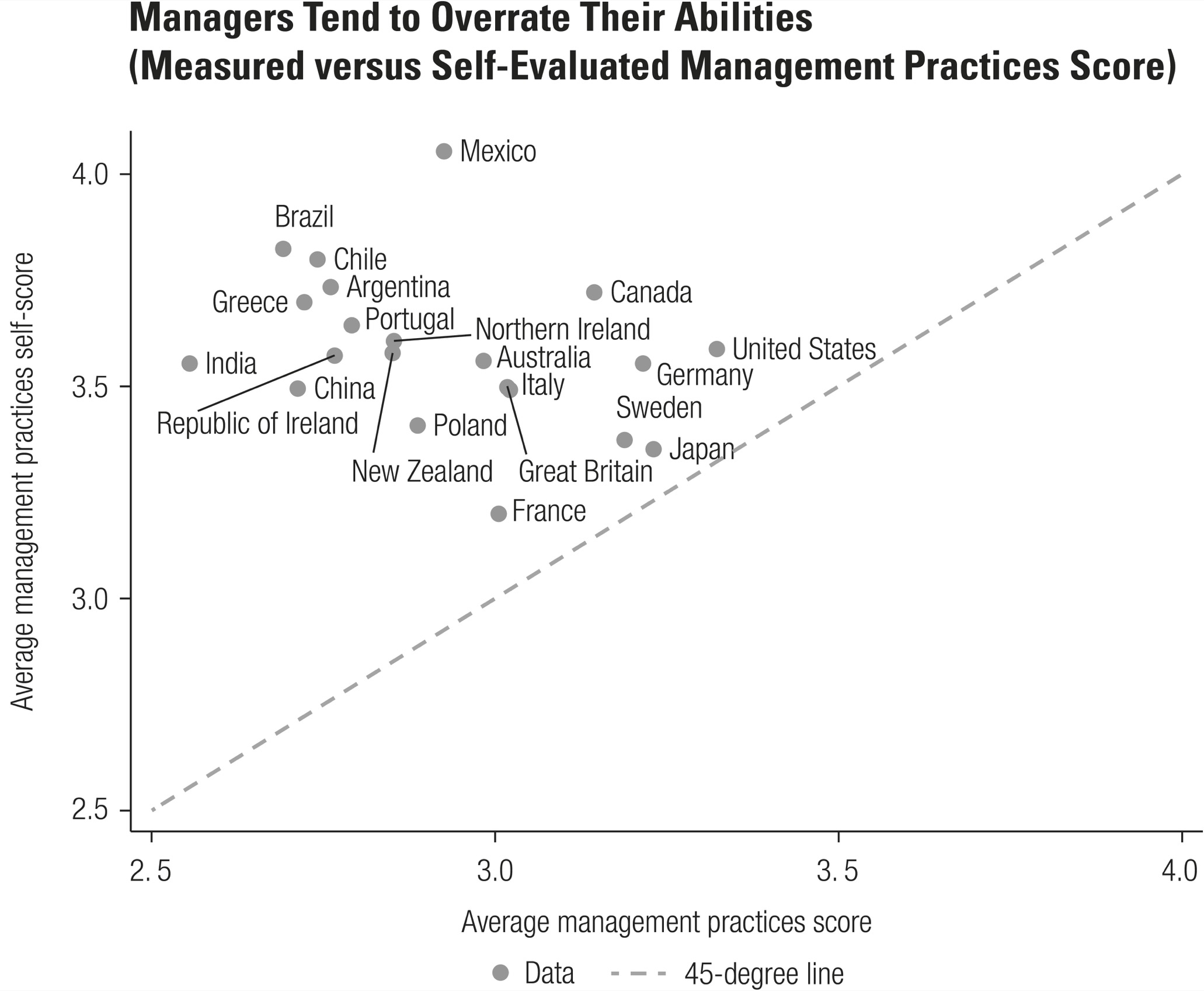

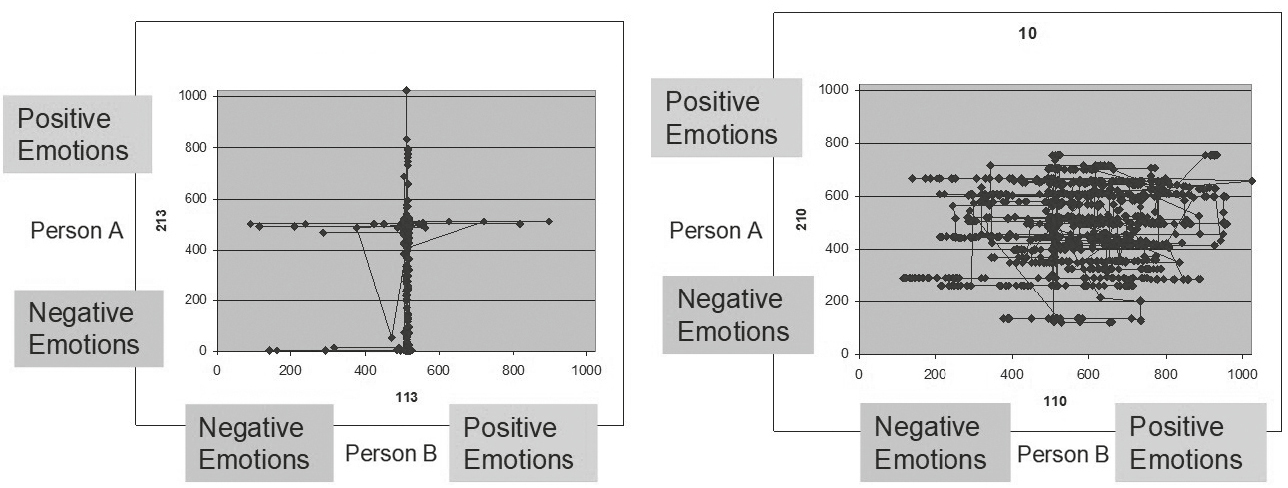

This tendency matters because it compromises self-awareness, and it trips us up across all kinds of settings. Look what happened when economists evaluated the operations and management practices of thousands of companies across a wide range of industries and countries, and compared their assessments with managers’ self-ratings:

Sources: World Management Survey; Bloom and Van Reenen 2007; and Maloney 2017b.

In this graph, if self-assessments of performance matched actual performance, every country would be on the dotted line. Overconfidence existed in every culture, and it was most rampant where management was the poorest.*

Of course, management skills can be hard to judge objectively. Knowledge should be easier—you were tested on yours throughout school. Compared to most people, how much do you think you know about each of the following topics—more, less, or the same?

- Why English became the official language of the United States

- Why women were burned at the stake in Salem

- What job Walt Disney had before he drew Mickey Mouse

- On which spaceflight humans first laid eyes on the Great Wall of China

- Why eating candy affects how kids behave

One of my biggest pet peeves is feigned knowledge, where people pretend to know things they don’t. It bothers me so much that at this very moment I’m writing an entire book about it. In a series of studies, people rated whether they knew more or less than most people about a range of topics like these, and then took a quiz to test their actual knowledge. The more superior participants thought their knowledge was, the more they overestimated themselves—and the less interested they were in learning and updating. If you think you know more about history or science than most people, chances are you know less than you think. As Dunning quips, “The first rule of the Dunning-Kruger club is you don’t know you’re a member of the Dunning-Kruger club.”*

On the questions above, if you felt you knew anything at all, think again. America has no official language, suspected witches were hanged in Salem but not burned, Walt Disney didn’t draw Mickey Mouse (it was the work of an animator named Ub Iwerks), you can’t actually see the Great Wall of China from space, and the average effect of sugar on children’s behavior is zero.

Although the Dunning-Kruger effect is often amusing in everyday life, it was no laughing matter in Iceland. Despite serving as governor of the central bank, Davíð Oddsson had no training in finance or economics. Before entering politics, he had created a radio comedy show, written plays and short stories, gone to law school, and worked as a journalist. During his reign as Iceland’s prime minister, Oddsson was so dismissive of experts that he disbanded the National Economic Institute. To force him out of his post at the central bank, Parliament had to pass an unconventional law: any governor would have to have at least a master’s degree in economics. That didn’t stop Oddsson from running for president a few years later. He seemed utterly blind to his blindness: he didn’t know what he didn’t know.

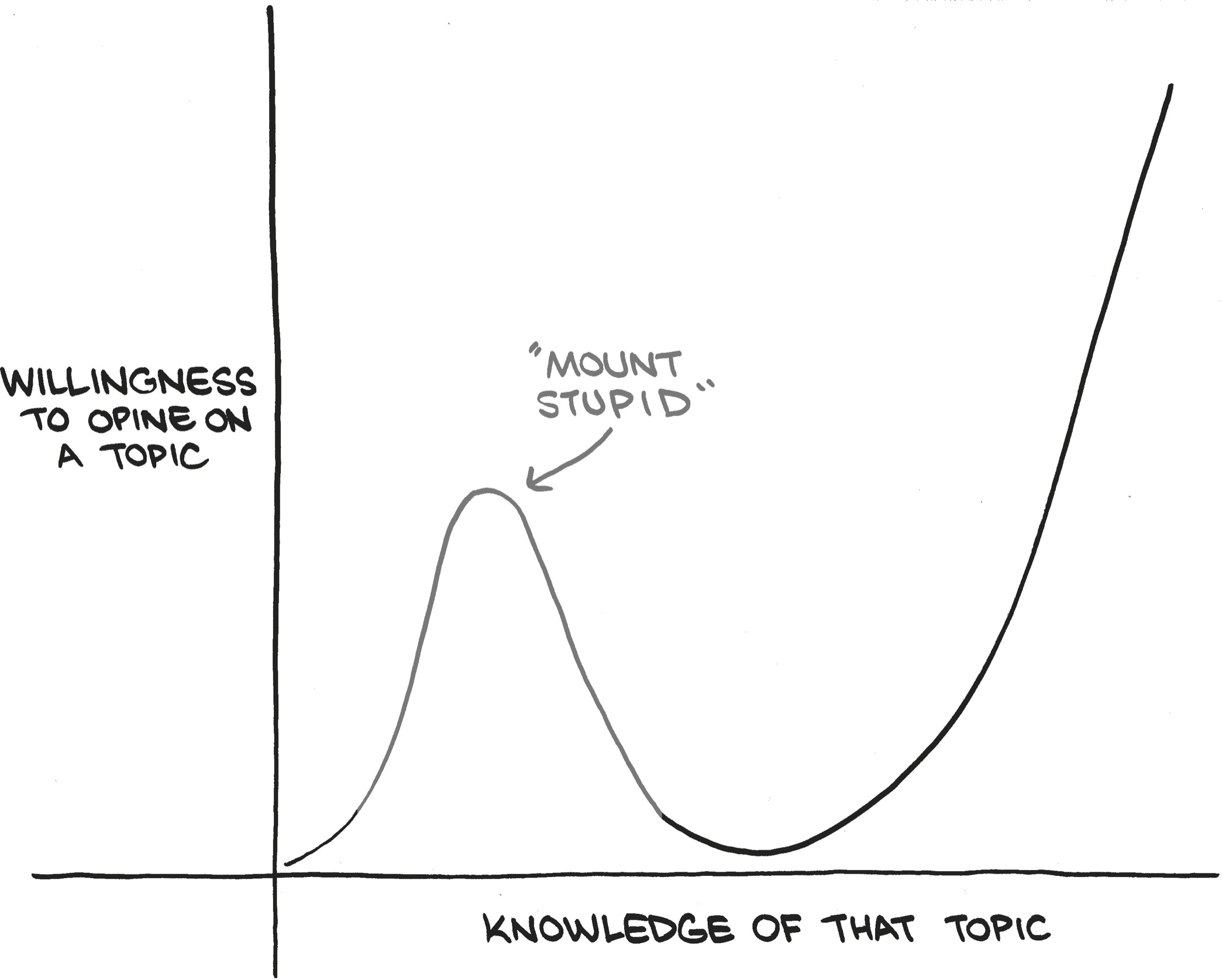

STRANDED AT THE SUMMIT OF MOUNT STUPID

The problem with armchair quarterback syndrome is that it stands in the way of rethinking. If we’re certain that we know something, we have no reason to look for gaps and flaws in our knowledge—let alone fill or correct them. In one study, the people who scored the lowest on an emotional intelligence test weren’t just the most likely to overestimate their skills. They were also the most likely to dismiss their scores as inaccurate or irrelevant—and the least likely to invest in coaching or self-improvement.

Yes, some of this comes down to our fragile egos. We’re driven to deny our weaknesses when we want to see ourselves in a positive light or paint a glowing picture of ourselves to others. A classic case is the crooked politician who claims to crusade against corruption, but is actually motivated by willful blindness or social deception. Yet motivation is only part of the story.*

There’s a less obvious force that clouds our vision of our abilities: a deficit in metacognitive skill, the ability to think about our thinking. Lacking competence can leave us blind to our own incompetence. If you’re a tech entrepreneur and you’re uninformed about education systems, you can feel certain that your master plan will fix them. If you’re socially awkward and you’re missing some insight on social graces, you can strut around believing you’re James Bond. In high school, a friend told me I didn’t have a sense of humor. What made her think that? “You don’t laugh at all my jokes.” I’m hilarious . . . said no funny person ever. I’ll leave it to you to decide who lacked the sense of humor.

When we lack the knowledge and skills to achieve excellence, we sometimes lack the knowledge and skills to judge excellence. This insight should immediately put your favorite confident ignoramuses in their place. Before we poke fun at them, though, it’s worth remembering that we all have moments when we are them.

We’re all novices at many things, but we’re not always blind to that fact. We tend to overestimate ourselves on desirable skills, like the ability to carry on a riveting conversation. We’re also prone to overconfidence in situations where it’s easy to confuse experience for expertise, like driving, typing, trivia, and managing emotions. Yet we underestimate ourselves when we can easily recognize that we lack experience—like painting, driving a race car, and rapidly reciting the alphabet backward. Absolute beginners rarely fall into the Dunning-Kruger trap. If you don’t know a thing about football, you probably don’t walk around believing you know more than the coach.

It’s when we progress from novice to amateur that we become overconfident. A bit of knowledge can be a dangerous thing. In too many domains of our lives, we never gain enough expertise to question our opinions or discover what we don’t know. We have just enough information to feel self-assured about making pronouncements and passing judgment, failing to realize that we’ve climbed to the top of Mount Stupid without making it over to the other side.

You can see this phenomenon in one of Dunning’s experiments that involved people playing the role of doctors in a simulated zombie apocalypse. When they’ve seen only a handful of injured victims, their perceived and actual skills match. Unfortunately, as they gain experience, their confidence climbs faster than their competence, and confidence remains higher than competence from that point on.

This might be one of the reasons that patient mortality rates in hospitals seem to spike in July, when new residents take over. It’s not their lack of skill alone that proves hazardous; it’s their overestimation of that skill.

Advancing from novice to amateur can break the rethinking cycle. As we gain experience, we lose some of our humility. We take pride in making rapid progress, which promotes a false sense of mastery. That jump-starts an overconfidence cycle, preventing us from doubting what we know and being curious about what we don’t. We get trapped in a beginner’s bubble of flawed assumptions, where we’re ignorant of our own ignorance.

That’s what happened in Iceland to Davíð Oddsson, whose arrogance was reinforced by cronies and unchecked by critics. He was known to surround himself with “fiercely loyal henchmen” from school and bridge matches, and to keep a checklist of friends and enemies. Months before the meltdown, Oddsson refused help from England’s central bank. Then, at the height of the crisis, he brashly declared in public that he had no intention of covering the debts of Iceland’s banks. Two years later an independent truth commission appointed by Parliament charged him with gross negligence. Oddsson’s downfall, according to one journalist who chronicled Iceland’s financial collapse, was “arrogance, his absolute conviction that he knew what was best for the island.”

What he lacked is a crucial nutrient for the mind: humility. The antidote to getting stuck on Mount Stupid is taking a regular dose of it. “Arrogance is ignorance plus conviction,” blogger Tim Urban explains. “While humility is a permeable filter that absorbs life experience and converts it into knowledge and wisdom, arrogance is a rubber shield that life experience simply bounces off of.”

WHAT GOLDILOCKS GOT WRONG

Many people picture confidence as a seesaw. Gain too much confidence, and we tip toward arrogance. Lose too much confidence, and we become meek. This is our fear with humility: that we’ll end up having a low opinion of ourselves. We want to keep the seesaw balanced, so we go into Goldilocks mode and look for the amount of confidence that’s just right. Recently, though, I learned that this is the wrong approach.

Humility is often misunderstood. It’s not a matter of having low self-confidence. One of the Latin roots of humility means “from the earth.” It’s about being grounded—recognizing that we’re flawed and fallible.

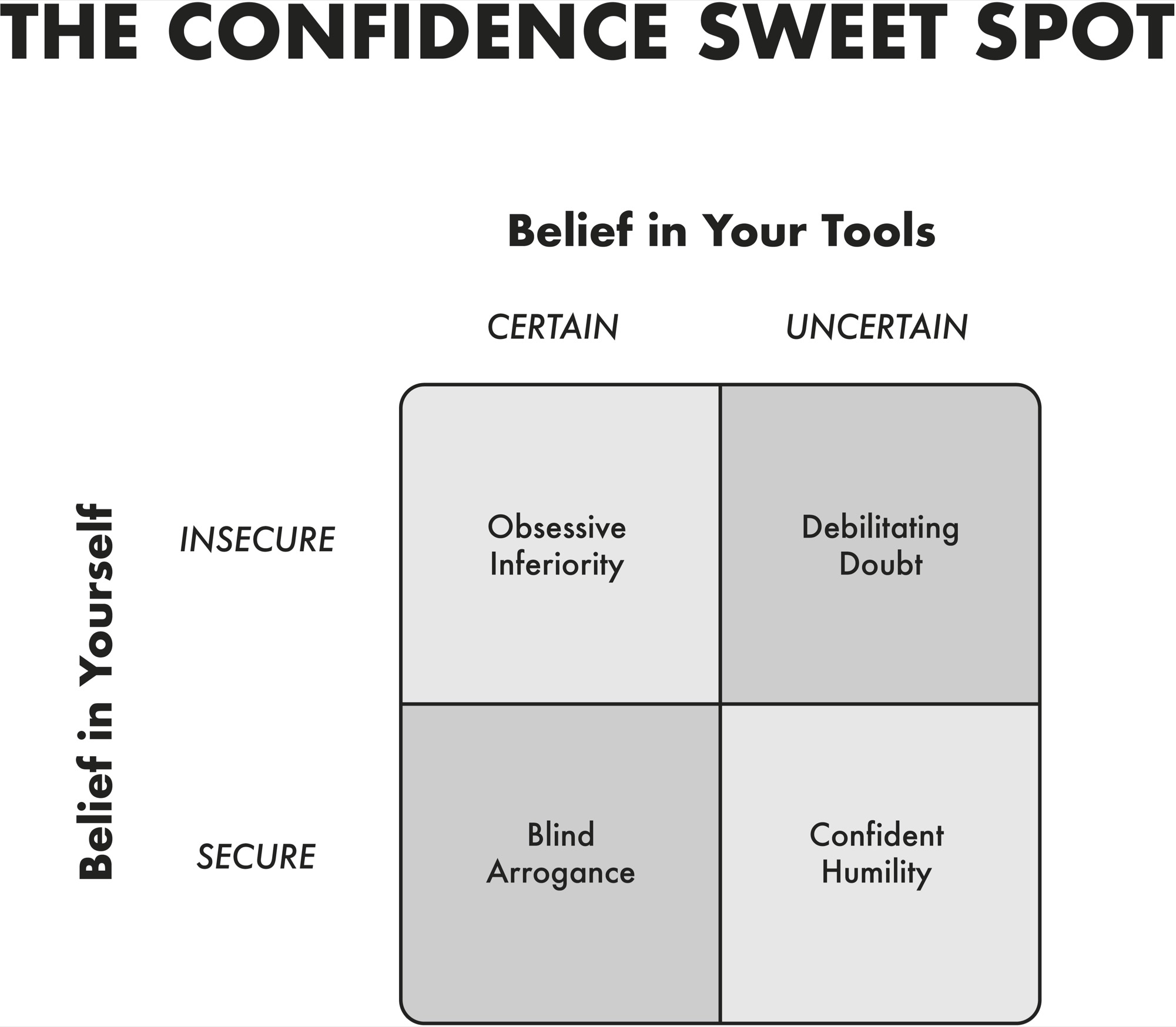

Confidence is a measure of how much you believe in yourself. Evidence shows that’s distinct from how much you believe in your methods. You can be confident in your ability to achieve a goal in the future while maintaining the humility to question whether you have the right tools in the present. That’s the sweet spot of confidence.

We become blinded by arrogance when we’re utterly convinced of our strengths and our strategies. We get paralyzed by doubt when we lack conviction in both. We can be consumed by an inferiority complex when we know the right method but feel uncertain about our ability to execute it. What we want to attain is confident humility: having faith in our capability while appreciating that we may not have the right solution or even be addressing the right problem. That gives us enough doubt to reexamine our old knowledge and enough confidence to pursue new insights.

When Spanx founder Sara Blakely had the idea for footless pantyhose, she believed in her ability to make the idea a reality, but she was full of doubt about her current tools. Her day job was selling fax machines door-to-door, and she was aware that she didn’t know anything about fashion, retail, or manufacturing. When she was designing the prototype, she spent a week driving around to hosiery mills to ask them for help. When she couldn’t afford a law firm to apply for a patent, she read a book on the topic and filled out the application herself. Her doubt wasn’t debilitating—she was confident she could overcome the challenges in front of her. Her confidence wasn’t in her existing knowledge—it was in her capacity to learn.

Confident humility can be taught. In one experiment, when students read a short article about the benefits of admitting what we don’t know rather than being certain about it, their odds of seeking extra help in an area of weakness spiked from 65 to 85 percent. They were also more likely to explore opposing political views to try to learn from the other side.

Confident humility doesn’t just open our minds to rethinking—it improves the quality of our rethinking. In college and graduate school, students who are willing to revise their beliefs get higher grades than their peers. In high school, students who admit when they don’t know something are rated by teachers as learning more effectively and by peers as contributing more to their teams. At the end of the academic year, they have significantly higher math grades than their more self-assured peers. Instead of just assuming they’ve mastered the material, they quiz themselves to test their understanding.

When adults have the confidence to acknowledge what they don’t know, they pay more attention to how strong evidence is and spend more time reading material that contradicts their opinions. In rigorous studies of leadership effectiveness across the United States and China, the most productive and innovative teams aren’t run by leaders who are confident or humble. The most effective leaders score high in both confidence and humility. Although they have faith in their strengths, they’re also keenly aware of their weaknesses. They know they need to recognize and transcend their limits if they want to push the limits of greatness.

If we care about accuracy, we can’t afford to have blind spots. To get an accurate picture of our knowledge and skills, it can help to assess ourselves like scientists looking through a microscope. But one of my newly formed beliefs is that we’re sometimes better off underestimating ourselves.

THE BENEFITS OF DOUBT

Just a month and a half before Iceland’s presidential election, Halla Tómasdóttir was polling at only 1 percent support. To focus on the most promising candidates, the network airing the first televised debate announced that they wouldn’t feature anyone with less than 2.5 percent of the vote. On the day of the debate, Halla ended up barely squeaking through. Over the following month her popularity skyrocketed. She wasn’t just a viable candidate; she was in the final four.

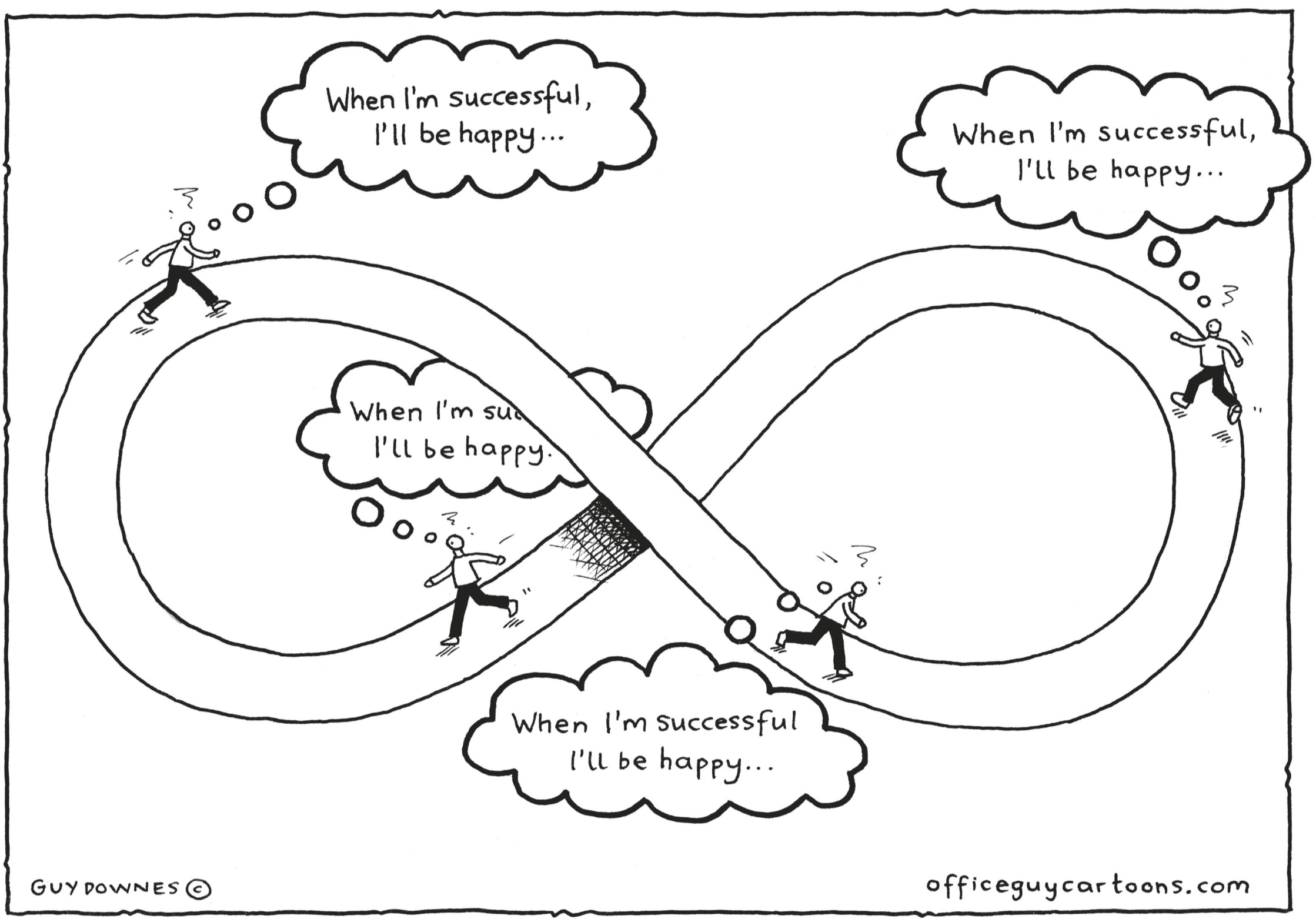

A few years later, when I invited her to speak to my class, Halla mentioned that the psychological fuel that propelled her meteoric rise was none other than impostor syndrome. Feeling like an impostor is typically viewed as a bad thing, and for good reason—a chronic sense of being unworthy can breed misery, crush motivation, and hold us back from pursuing our ambitions.

From time to time, though, a less crippling sense of doubt waltzes into many of our minds. Some surveys suggest that more than half the people you know have felt like impostors at some point in their careers. It’s thought to be especially common among women and marginalized groups. Strangely, it also seems to be particularly pronounced among high achievers.

I’ve taught students who earned patents before they could drink and became chess masters before they could drive, but these same individuals still wrestle with insecurity and constantly question their abilities. The standard explanation for their accomplishments is that they succeed in spite of their doubts, but what if their success is actually driven in part by those doubts?

To find out, Basima Tewfik—then a doctoral student at Wharton, now an MIT professor—recruited a group of medical students who were preparing to begin their clinical rotations. She had them interact for more than half an hour with actors who had been trained to play the role of patients presenting symptoms of various diseases. Basima observed how the medical students treated the patients—and also tracked whether they made the right diagnoses.

A week earlier the students had answered a survey about how often they entertained impostor thoughts like I am not as qualified as others think I am and People important to me think I am more capable than I think I am. Those who self-identified as impostors didn’t do any worse in their diagnoses, and they did significantly better when it came to bedside manner—they were rated as more empathetic, respectful, and professional, as well as more effective in asking questions and sharing information. In another study, Basima found a similar pattern with investment professionals: the more often they felt like impostors, the higher their performance reviews from their supervisors four months later.

This evidence is new, and we still have a lot to learn about when impostor syndrome is beneficial versus when it’s detrimental. Still, it leaves me wondering if we’ve been misjudging impostor syndrome by seeing it solely as a disorder.

When our impostor fears crop up, the usual advice is to ignore them—give ourselves the benefit of the doubt. Instead, we might be better off embracing those fears, because they can give us three benefits of doubt.

The first upside of feeling like an impostor is that it can motivate us to work harder. It’s probably not helpful when we’re deciding whether to start a race, but once we’ve stepped up to the starting line, it gives us the drive to keep running to the end so that we can earn our place among the finalists.* In some of my own research across call centers, military and government teams, and nonprofits, I’ve found that confidence can make us complacent. If we never worry about letting other people down, we’re more likely to actually do so. When we feel like impostors, we think we have something to prove. Impostors may be the last to jump in, but they may also be the last to bail out.

Second, impostor thoughts can motivate us to work smarter. When we don’t believe we’re going to win, we have nothing to lose by rethinking our strategy. Remember that total beginners don’t fall victim to the Dunning-Kruger effect. Feeling like an impostor puts us in a beginner’s mindset, leading us to question assumptions that others have taken for granted.

Third, feeling like an impostor can make us better learners. Having some doubts about our knowledge and skills takes us off a pedestal, encouraging us to seek out insights from others. As psychologist Elizabeth Krumrei Mancuso and her colleagues write, “Learning requires the humility to realize one has something to learn.”

Some evidence on this dynamic comes from a study by another of our former doctoral students at Wharton, Danielle Tussing—now a professor at SUNY Buffalo. Danielle gathered her data in a hospital where the leadership role of charge nurse is rotated between shifts, which means that nurses end up at the helm even if they have doubts about their capabilities. Nurses who felt some hesitations about assuming the mantle were actually more effective leaders, in part because they were more likely to seek out second opinions from colleagues. They saw themselves on a level playing field, and they knew that much of what they lacked in experience and expertise they could make up by listening. There’s no clearer case of that than Halla Tómasdóttir.

THE LEAGUE OF EXTRAORDINARY HUMILITY