A Thousand Brains

Foreword by Richard Dawkins

Don’t read this book at bedtime. Not that it’s frightening. It won’t give you nightmares. But it is so exhilarating, so stimulating, it’ll turn your mind into a whirling maelstrom of excitingly provocative ideas—you’ll want to rush out and tell someone rather than go to sleep. It is a victim of this maelstrom who writes the foreword, and I expect it’ll show.

Charles Darwin was unusual among scientists in having the means to work outside universities and without government research grants. Jeff Hawkins might not relish being called the Silicon Valley equivalent of a gentleman scientist but—well, you get the parallel. Darwin’s powerful idea was too revolutionary to catch on when expressed as a brief article, and the Darwin-Wallace joint papers of 1858 were all but ignored. As Darwin himself said, the idea needed to be expressed at book length. Sure enough, it was his great book that shook Victorian foundations, a year later. Book-length treatment, too, is needed for Jeff Hawkins’s Thousand Brains Theory. And for his notion of reference frames—“The very act of thinking is a form of movement”—bull’s-eye! These two ideas are each profound enough to fill a book. But that’s not all.

T. H. Huxley famously said, on closing On the Origin of Species, “How extremely stupid of me not to have thought of that.” I’m not suggesting that brain scientists will necessarily say the same when they close this book. It is a book of many exciting ideas, rather than one huge idea like Darwin’s.

I suspect that not just T. H. Huxley but his three brilliant grandsons would have loved it: Andrew because he discovered how the nerve impulse works (Hodgkin and Huxley are the Watson and Crick of the nervous system); Aldous because of his visionary and poetic voyages to the mind’s furthest reaches; and Julian because he wrote this poem, extolling the brain’s capacity to construct a model of reality, a microcosm of the universe:

The world of things entered your infant mind

To populate that crystal cabinet.

Within its walls the strangest partners met,

And things turned thoughts did propagate their kind.

For, once within, corporeal fact could find

A spirit. Fact and you in mutual debt

Built there your little microcosm—which yet

Had hugest tasks to its small self assigned.

Dead men can live there, and converse with stars:

Equator speaks with pole, and night with day;

Spirit dissolves the world’s material bars—

A million isolations burn away.

The Universe can live and work and plan,

At last made God within the mind of man.

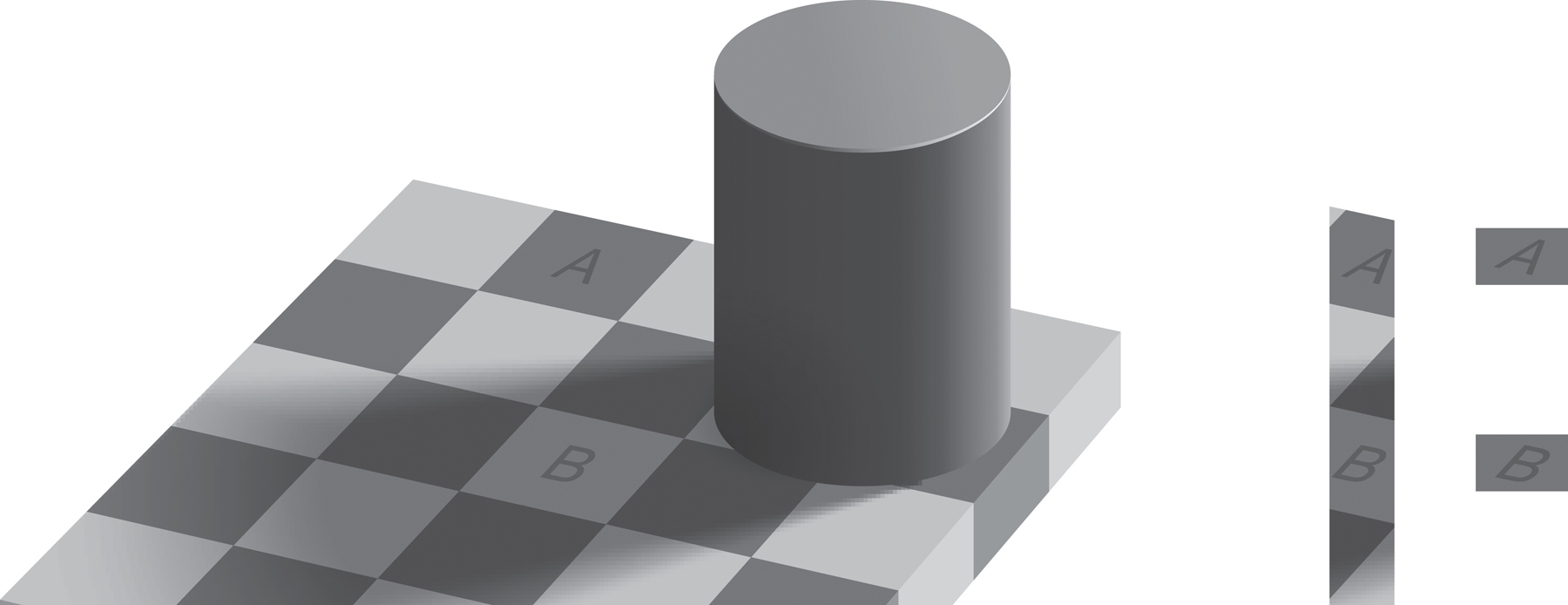

The brain sits in darkness, apprehending the outside world only through a hailstorm of Andrew Huxley’s nerve impulses. A nerve impulse from the eye is no different from one from the ear or the big toe. It’s where they end up in the brain that sorts them out. Jeff Hawkins is not the first scientist or philosopher to suggest that the reality we perceive is a constructed reality, a model, updated and informed by bulletins streaming in from the senses. But Hawkins is, I think, the first to give eloquent space to the idea that there is not one such model but thousands, one in each of the many neatly stacked columns that constitute the brain’s cortex. There are about 150,000 of these columns and they are the stars of the first section of the book, along with what he calls “frames of reference.” Hawkins’s thesis about both of these is provocative, and it’ll be interesting to see how it is received by other brain scientists: well, I suspect. Not the least fascinating of his ideas here is that the cortical columns, in their world-modeling activities, work semi-autonomously. What “we” perceive is a kind of democratic consensus from among them.

Democracy in the brain? Consensus, and even dispute? What an amazing idea. It is a major theme of the book. We human mammals are the victims of a recurrent dispute: a tussle between the old reptilian brain, which unconsciously runs the survival machine, and the mammalian neocortex sitting in a kind of driver’s seat atop it. This new mammalian brain—the cerebral cortex—thinks. It is the seat of consciousness. It is aware of past, present, and future, and it sends instructions to the old brain, which executes them.

The old brain, schooled by natural selection over millions of years when sugar was scarce and valuable for survival, says, “Cake. Want cake. Mmmm cake. Gimme.” The new brain, schooled by books and doctors over mere tens of years when sugar was over-plentiful, says, “No, no. Not cake. Mustn’t. Please don’t eat that cake.” Old brain says, “Pain, pain, horrible pain, stop the pain immediately.” New brain says, “No, no, bear the torture, don’t betray your country by surrendering to it. Loyalty to country and comrades comes before even your own life.”

The conflict between the old reptilian and the new mammalian brain furnishes the answer to such riddles as “Why does pain have to be so damn painful?” What, after all, is pain for? Pain is a proxy for death. It is a warning to the brain, “Don’t do that again: don’t tease a snake, pick up a hot ember, jump from a great height. This time it only hurt; next time it might kill you.” But now a designing engineer might say what we need here is the equivalent of a painless flag in the brain. When the flag shoots up, don’t repeat whatever you just did. But instead of the engineer’s easy and painless flag, what we actually get is pain—often excruciating, unbearable pain. Why? What’s wrong with the sensible flag?

The answer probably lies in the disputatious nature of the brain’s decision-making processes: the tussle between old brain and new brain. It being too easy for the new brain to overrule the vote of the old brain, the painless flag system wouldn’t work. Neither would torture.

The new brain would feel free to ignore my hypothetical flag and endure any number of bee stings or sprained ankles or torturers’ thumbscrews if, for some reason, it “wanted to.” The old brain, which really “cares” about surviving to pass on the genes, might “protest” in vain. Maybe natural selection, in the interests of survival, has ensured “victory” for the old brain by making pain so damn painful that the new brain cannot overrule it. As another example, if the old brain were “aware” of the betrayal of sex’s Darwinian purpose, the act of donning a condom would be unbearably painful.

Hawkins is on the side of the majority of informed scientists and philosophers who will have no truck with dualism: there is no ghost in the machine, no spooky soul so detached from hardware that it survives the hardware’s death, no Cartesian theatre (Dan Dennett’s term) where a colour screen displays a movie of the world to a watching self. Instead, Hawkins proposes multiple models of the world, constructed microcosms, informed and adjusted by the rain of nerve impulses pouring in from the senses. By the way, Hawkins doesn’t totally rule out the long-term future possibility of escaping death by uploading your brain to a computer, but he doesn’t think it would be much fun.

Among the more important of the brain’s models are models of the body itself, coping, as they must, with how the body’s own movement changes our perspective on the world outside the prison wall of the skull. And this is relevant to the major preoccupation of the middle section of the book, the intelligence of machines. Jeff Hawkins has great respect, as do I, for those smart people, friends of his and mine, who fear the approach of superintelligent machines to supersede us, subjugate us, or even dispose of us altogether. But Hawkins doesn’t fear them, partly because the faculties that make for mastery of chess or Go are not those that can cope with the complexity of the real world. Children who can’t play chess “know how liquids spill, balls roll, and dogs bark. They know how to use pencils, markers, paper, and glue. They know how to open books and that paper can rip.” And they have a self-image, a body image that emplaces them in the world of physical reality and allows them to navigate effortlessly through it.

It is not that Hawkins underestimates the power of artificial intelligence and the robots of the future. On the contrary. But he thinks most present-day research is going about it the wrong way. The right way, in his view, is to understand how the brain works and to borrow its ways but hugely speed them up.

And there is no reason to (indeed, please let’s not) borrow the ways of the old brain, its lusts and hungers, cravings and angers, feelings and fears, which can drive us along paths seen as harmful by the new brain. Harmful at least from the perspective that Hawkins and I, and almost certainly you, value. For he is very clear that our enlightened values must, and do, diverge sharply from the primary and primitive value of our selfish genes—the raw imperative to reproduce at all costs. Without an old brain, in his view (which I suspect may be controversial), there is no reason to expect an AI to harbour malevolent feelings toward us. By the same token, and also perhaps controversially, he doesn’t think switching off a conscious AI would be murder: Without an old brain, why would it feel fear or sadness? Why would it want to survive?

In the chapter “Genes Versus Knowledge,” we are left in no doubt about the disparity between the goals of old brain (serving selfish genes) and of the new brain (knowledge). It is the glory of the human cerebral cortex that it—unique among all animals and unprecedented in all geological time—has the power to defy the dictates of the selfish genes. We can enjoy sex without procreation. We can devote our lives to philosophy, mathematics, poetry, astrophysics, music, geology, or the warmth of human love, in defiance of the old brain’s genetic urging that these are a waste of time—time that “should” be spent fighting rivals and pursuing multiple sexual partners: “As I see it, we have a profound choice to make. It is a choice between favoring the old brain or favoring the new brain. More specifically, do we want our future to be driven by the processes that got us here, namely, natural selection, competition, and the drive of selfish genes? Or, do we want our future to be driven by intelligence and its desire to understand the world?”

I began by quoting T. H. Huxley’s endearingly humble remark on closing Darwin’s Origin. I’ll end with just one of Jeff Hawkins’s many fascinating ideas—he wraps it up in a mere couple of pages—which had me echoing Huxley. Feeling the need for a cosmic tombstone, something to let the galaxy know that we were once here and capable of announcing the fact, Hawkins notes that all civilisations are ephemeral. On the scale of universal time, the interval between a civilisation’s invention of electromagnetic communication and its extinction is like the flash of a firefly. The chance of any one flash coinciding with another is unhappily small. What we need, then—why I called it a tombstone—is a message that says not “We are here” but “We were once here.” And the tombstone must have cosmic-scale duration: not only must it be visible from parsecs away, it must last for millions if not billions of years, so that it is still proclaiming its message when other flashes of intellect intercept it long after our extinction. Broadcasting prime numbers or the digits of π won’t cut it. Not as a radio signal or a pulsed laser beam, anyway. They certainly proclaim biological intelligence, which is why they are the stock-in-trade of SETI (the search for extraterrestrial intelligence) and science fiction, but they are too brief, too in the present. So, what signal would last long enough and be detectable from a very great distance in any direction? This is where Hawkins provoked my inner Huxley.

It’s beyond us today, but in the future, before our firefly flash is spent, we could put into orbit around the Sun a series of satellites “that block a bit of the Sun’s light in a pattern that would not occur naturally. These orbiting Sun blockers would continue to orbit the Sun for millions of years, long after we are gone, and they could be detected from far away.” Even if the spacing of these umbral satellites is not literally a series of prime numbers, the message could be made unmistakable: “Intelligent Life Woz ’Ere.”

What I find rather pleasing—and I offer the vignette to Jeff Hawkins to thank him for the pleasure his brilliant book has given me—is that a cosmic message coded in the form of a pattern of intervals between spikes (or in his case anti-spikes, as his satellites dim the Sun) would be using the same kind of code as a neuron.

This is a book about how the brain works. It works the brain in a way that is nothing short of exhilarating.

PART 1

A New Understanding of the Brain

The cells in your head are reading these words. Think of how remarkable that is. Cells are simple. A single cell can’t read, or think, or do much of anything. Yet, if we put enough cells together to make a brain, they not only read books, they write them. They design buildings, invent technologies, and decipher the mysteries of the universe. How a brain made of simple cells creates intelligence is a profoundly interesting question, and it remains a mystery.

Understanding how the brain works is considered one of humanity’s grand challenges. The quest has spawned dozens of national and international initiatives, such as Europe’s Human Brain Project and the International Brain Initiative. Tens of thousands of neuroscientists work in dozens of specialties, in practically every country in the world, trying to understand the brain. Although neuroscientists study the brains of different animals and ask varied questions, the ultimate goal of neuroscience is to learn how the human brain gives rise to human intelligence.

You might be surprised by my claim that the human brain remains a mystery. Every year, new brain-related discoveries are announced, new brain books are published, and researchers in related fields such as artificial intelligence claim their creations are approaching the intelligence of, say, a mouse or a cat. It would be easy to conclude from this that scientists have a pretty good idea of how the brain works. But if you ask neuroscientists, almost all of them would admit that we are still in the dark. We have learned a tremendous amount of knowledge and facts about the brain, but we have little understanding of how the whole thing works.

In 1979, Francis Crick, famous for his work on DNA, wrote an essay about the state of brain science, titled “Thinking About the Brain.” He described the large quantity of facts that scientists had collected about the brain, yet, he concluded, “in spite of the steady accumulation of detailed knowledge, how the human brain works is still profoundly mysterious.” He went on to say, “What is conspicuously lacking is a broad framework of ideas in which to interpret these results.”

Crick observed that scientists had been collecting data on the brain for decades. They knew a great many facts. But no one had figured out how to assemble those facts into something meaningful. The brain was like a giant jigsaw puzzle with thousands of pieces. The puzzle pieces were sitting in front of us, but we could not make sense of them. No one knew what the solution was supposed to look like. According to Crick, the brain was a mystery not because we hadn’t collected enough data, but because we didn’t know how to arrange the pieces we already had. In the forty years since Crick wrote his essay there have been many significant discoveries about the brain, several of which I will talk about later, but overall his observation is still true. How intelligence arises from cells in your head is still a profound mystery. As more puzzle pieces are collected each year, it sometimes feels as if we are getting further from understanding the brain, not closer.

I read Crick’s essay when I was young, and it inspired me. I felt that we could solve the mystery of the brain in my lifetime, and I have pursued that goal ever since. For the past fifteen years, I have led a research team in Silicon Valley that studies a part of the brain called the neocortex. The neocortex occupies about 70 percent of the volume of a human brain and it is responsible for everything we associate with intelligence, from our senses of vision, touch, and hearing, to language in all its forms, to abstract thinking such as mathematics and philosophy. The goal of our research is to understand how the neocortex works in sufficient detail that we can explain the biology of the brain and build intelligent machines that work on the same principles.

In early 2016 the progress of our research changed dramatically. We had a breakthrough in our understanding. We realized that we and other scientists had missed a key ingredient. With this new insight, we saw how the pieces of the puzzle fit together. In other words, I believe we discovered the framework that Crick wrote about, a framework that not only explains the basics of how the neocortex works but also gives rise to a new way to think about intelligence. We do not yet have a complete theory of the brain—far from it. Scientific fields typically start with a theoretical framework and only later do the details get worked out. Perhaps the most famous example is Darwin’s theory of evolution. Darwin proposed a bold new way of thinking about the origin of species, but the details, such as how genes and DNA work, would not be known until many years later.

To be intelligent, the brain has to learn a great many things about the world. I am not just referring to what we learn in school, but to basic things, such as what everyday objects look, sound, and feel like. We have to learn how objects behave, from how doors open and close to what the apps on our smartphones do when we touch the screen. We need to learn where everything is located in the world, from where you keep your personal possessions in your home to where the library and post office are in your town. And of course, we learn higher-level concepts, such as the meaning of “compassion” and “government.” On top of all this, each of us learns the meaning of tens of thousands of words. Every one of us possesses a tremendous amount of knowledge about the world. Some of our basic skills are determined by our genes, such as how to eat or how to recoil from pain. But most of what we know about the world is learned.

Scientists say that the brain learns a model of the world. The word “model” implies that what we know is not just stored as a pile of facts but is organized in a way that reflects the structure of the world and everything it contains. For example, to know what a bicycle is, we don’t remember a list of facts about bicycles. Instead, our brain creates a model of bicycles that includes the different parts, how the parts are arranged relative to each other, and how the different parts move and work together. To recognize something, we need to first learn what it looks and feels like, and to achieve goals we need to learn how things in the world typically behave when we interact with them. Intelligence is intimately tied to the brain’s model of the world; therefore, to understand how the brain creates intelligence, we have to figure out how the brain, made of simple cells, learns a model of the world and everything in it.

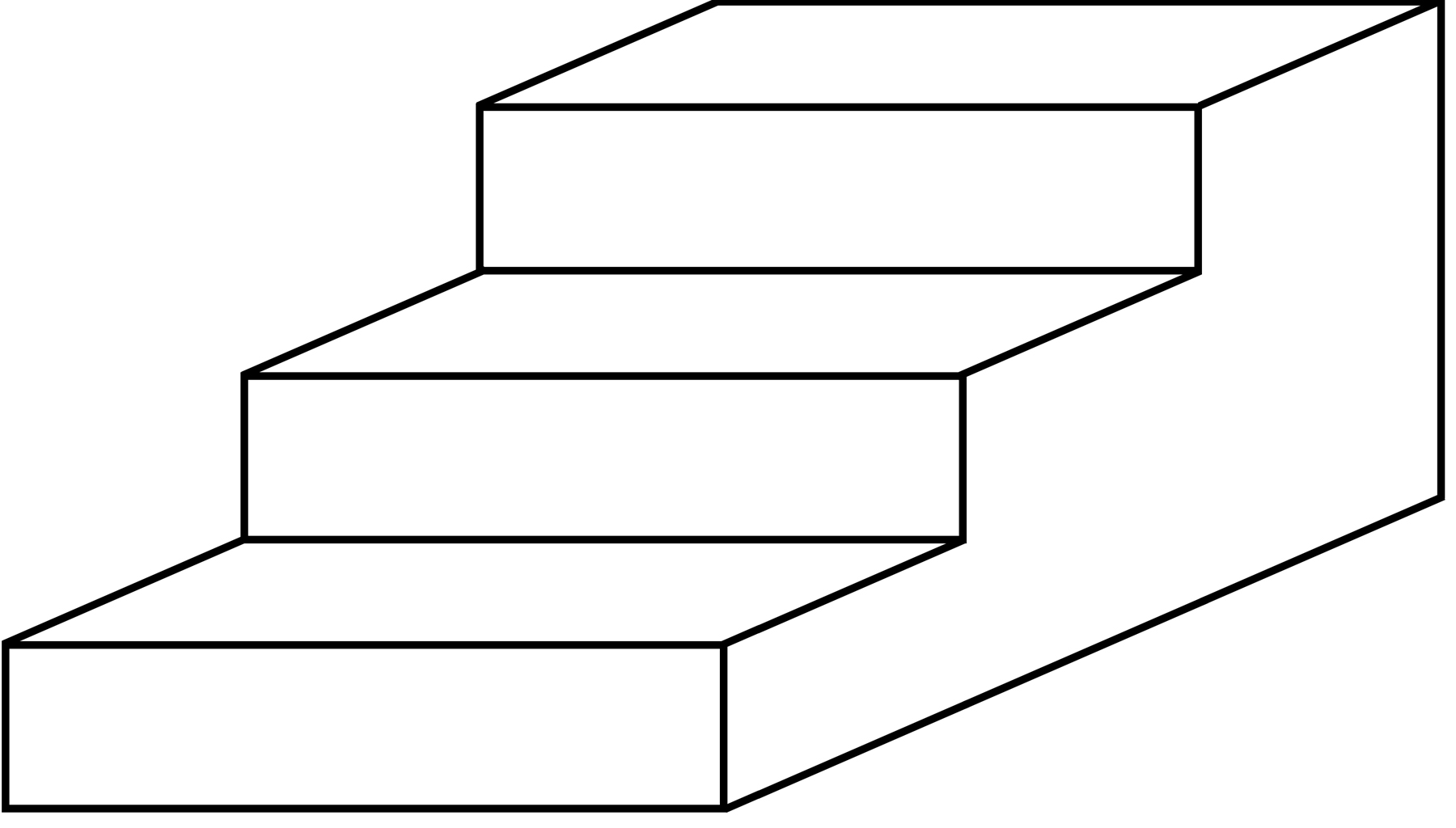

Our 2016 discovery explains how the brain learns this model. We deduced that the neocortex stores everything we know, all our knowledge, using something called reference frames. I will explain this more fully later, but for now, consider a paper map as an analogy. A map is a type of model: a map of a town is a model of the town, and the grid lines, such as lines of latitude and longitude, are a type of reference frame. A map’s grid lines, its reference frame, provide the structure of the map. A reference frame tells you where things are located relative to each other, and it can tell you how to achieve goals, such as how to get from one location to another. We realized that the brain’s model of the world is built using maplike reference frames. Not one reference frame, but hundreds of thousands of them. Indeed, we now understand that most of the cells in your neocortex are dedicated to creating and manipulating reference frames, which the brain uses to plan and think.

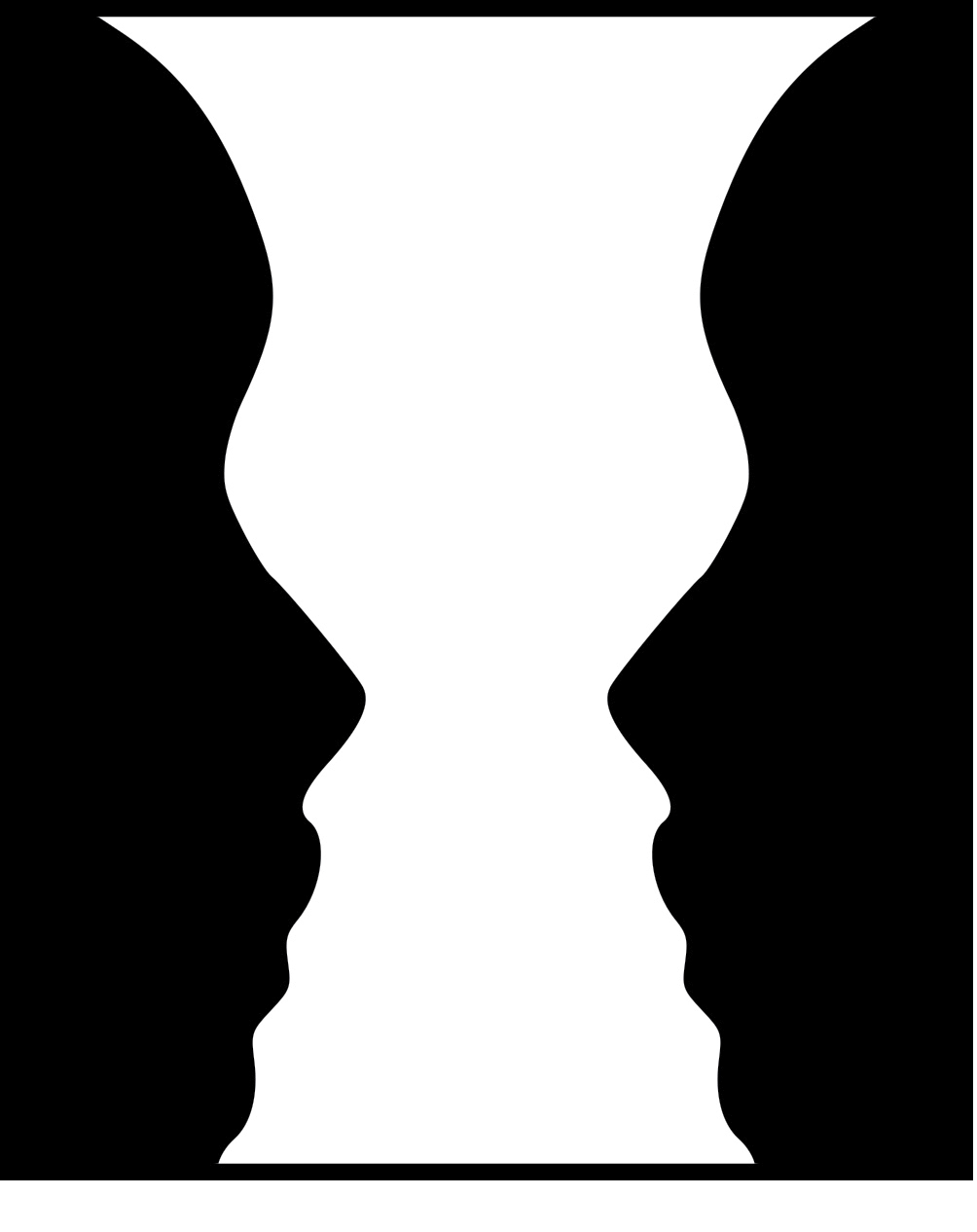

With this new insight, answers to some of neuroscience’s biggest questions started to come into view. Questions such as, How do our varied sensory inputs get united into a singular experience? What is happening when we think? How can two people reach different beliefs from the same observations? And why do we have a sense of self?

This book tells the story of these discoveries and the implications they have for our future. Most of the material has been published in scientific journals. I provide links to these papers at the end of the book. However, scientific papers are not well suited for explaining large-scale theories, especially in a way that a nonspecialist can understand.

I have divided the book into three parts. In the first part, I describe our theory of reference frames, which we call the Thousand Brains Theory. The theory is partly based on logical deduction, so I will take you through the steps we took to reach our conclusions. I will also give you a bit of historical background to help you see how the theory relates to the history of thinking about the brain. By the end of the first part of the book, I hope you will have an understanding of what is going on in your head as you think and act within the world, and what it means to be intelligent.

The second part of the book is about machine intelligence. The twenty-first century will be transformed by intelligent machines in the same way that the twentieth century was transformed by computers. The Thousand Brains Theory explains why today’s AI is not yet intelligent and what we need to do to make truly intelligent machines. I describe what intelligent machines in the future will look like and how we might use them. I explain why some machines will be conscious and what, if anything, we should do about it. Finally, many people are worried that intelligent machines are an existential risk, that we are about to create a technology that will destroy humanity. I disagree. Our discoveries illustrate why machine intelligence, on its own, is benign. But, as a powerful technology, the risk lies in the ways humans might use it.

In the third part of the book, I look at the human condition from the perspective of the brain and intelligence. The brain’s model of the world includes a model of our self. This leads to the strange truth that what you and I perceive, moment to moment, is a simulation of the world, not the real world. One consequence of the Thousand Brains Theory is that our beliefs about the world can be false. I explain how this can occur, why false beliefs can be difficult to eliminate, and how false beliefs combined with our more primitive emotions are a threat to our long-term survival.

The final chapters discuss what I consider to be the most important choice we will face as a species. There are two ways to think about ourselves. One is as biological organisms, products of evolution and natural selection. From this point of view, humans are defined by our genes, and the purpose of life is to replicate them. But we are now emerging from our purely biological past. We have become an intelligent species. We are the first species on Earth to know the size and age of the universe. We are the first species to know how the Earth evolved and how we came to be. We are the first species to develop tools that allow us to explore the universe and learn its secrets. From this point of view, humans are defined by our intelligence and our knowledge, not by our genes. The choice we face as we think about the future is, should we continue to be driven by our biological past or choose instead to embrace our newly emerged intelligence?

We may not be able to do both. We are creating powerful technologies that can fundamentally alter our planet, manipulate biology, and soon, create machines that are smarter than we are. But we still possess the primitive behaviors that got us to this point. This combination is the true existential risk that we must address. If we are willing to embrace intelligence and knowledge as what defines us, instead of our genes, then perhaps we can create a future that is longer lasting and has a more noble purpose.

The journey that led to the Thousand Brains Theory has been long and convoluted. I studied electrical engineering in college and had just started my first job at Intel when I read Francis Crick’s essay. It had such a profound effect on me that I decided to switch careers and dedicate my life to studying the brain. After an unsuccessful attempt to get a position studying brains at Intel, I applied to be a graduate student at MIT’s AI lab. (I felt that the best way to build intelligent machines was first to study the brain.) In my interviews with MIT faculty, my proposal to create intelligent machines based on brain theory was rejected. I was told that the brain was just a messy computer and there was no point in studying it. Crestfallen but undeterred, I next enrolled in a neuroscience PhD program at the University of California, Berkeley. I started my studies in January 1986.

Upon arriving at Berkeley, I reached out to the chair of the graduate group of neurobiology, Dr. Frank Werblin, for advice. He asked me to write a paper describing the research I wanted to do for my PhD thesis. In the paper, I explained that I wanted to work on a theory of the neocortex. I knew that I wanted to approach the problem by studying how the neocortex makes predictions. Professor Werblin had several faculty members read my paper, and it was well received. He told me that my ambitions were admirable, my approach was sound, and the problem I wanted to work on was one of the most important in science, but—and I didn’t see this coming—he didn’t see how I could pursue my dream at that time. As a neuroscience graduate student, I would have to work for a professor, doing similar work to what the professor was already working on. And no one at Berkeley, or anywhere else that he knew of, was doing something close enough to what I wanted to do.

Trying to develop an overall theory of brain function was considered too ambitious and therefore too risky. If a student worked on this for five years and didn’t make progress, they might not graduate. It was similarly risky for professors; they might not get tenure. The agencies that dispensed funding for research also thought it was too risky. Research proposals that focused on theory were routinely rejected.

I could have worked in an experimental lab, but after interviewing at a few I knew that it wasn’t a good fit for me. I would be spending most of my hours training animals, building experimental equipment, and collecting data. Any theories I developed would be limited to the part of the brain studied in that lab.

For the next two years, I spent my days in the university’s libraries reading neuroscience paper after neuroscience paper. I read hundreds of them, including all the most important papers published over the previous fifty years. I also read what psychologists, linguists, mathematicians, and philosophers thought about the brain and intelligence. I got a first-class, albeit unconventional, education. After two years of self-study, a change was needed. I came up with a plan. I would work again in industry for four years and then reassess my opportunities in academia. So, I went back to working on personal computers in Silicon Valley.

I started having success as an entrepreneur. From 1988 to 1992, I created one of the first tablet computers, the GridPad. Then in 1992, I founded Palm Computing, beginning a ten-year span when I designed some of the first handheld computers and smartphones such as the PalmPilot and the Treo. Everyone who worked with me at Palm knew that my heart was in neuroscience, that I viewed my work in mobile computing as temporary. Designing some of the first handheld computers and smartphones was exciting work. I knew that billions of people would ultimately rely on these devices, but I felt that understanding the brain was even more important. I believed that brain theory would have a bigger positive impact on the future of humanity than computing. Therefore, I needed to return to brain research.

There was no convenient time to leave, so I picked a date and walked away from the businesses I helped create. With the assistance and prodding of a few neuroscientist friends, (notably Bob Knight at UC Berkeley, Bruno Olshausen at UC Davis, and Steve Zornetzer at NASA Ames Research), I created the Redwood Neuroscience Institute (RNI) in 2002. RNI focused exclusively on neocortical theory and had ten full-time scientists. We were all interested in large-scale theories of the brain, and RNI was one of the only places in the world where this focus was not only tolerated but expected. Over the course of the three years that I ran RNI, we had over one hundred visiting scholars, some of whom stayed for days or weeks. We had weekly lectures, open to the public, which usually turned into hours of discussion and debate.

Everyone who worked at RNI, including me, thought it was great. I got to know and spend time with many of the world’s top neuroscientists. It allowed me to become knowledgeable in multiple fields of neuroscience, which is difficult to do with a typical academic position. The problem was that I wanted to know the answers to a set of specific questions, and I didn’t see the team moving toward consensus on those questions. The individual scientists were content to do their own thing. So, after three years of running an institute, I decided the best way to achieve my goals was to lead my own research team.

RNI was in all other ways doing well, so we decided to move it to UC Berkeley. Yes, the same place that told me I couldn’t study brain theory decided, nineteen years later, that a brain-theory center was exactly what they needed. RNI continues today as the Redwood Center for Theoretical Neuroscience.

As RNI moved to UC Berkeley, several colleagues and I started Numenta. Numenta is an independent research company. Our primary goal is to develop a theory of how the neocortex works. Our secondary goal is to apply what we learn about brains to machine learning and machine intelligence. Numenta is similar to a typical research lab at a university, but with more flexibility. It allows me to direct a team, make sure we are all focused on the same task, and try new ideas as often as needed.

As I write, Numenta is over fifteen years old, yet in some ways we are still like a start-up. Trying to figure out how the neocortex works is extremely challenging. To make progress, we need the flexibility and focus of a start-up environment. We also need a lot of patience, which is not typical for a start-up. Our first significant discovery—how neurons make predictions—occurred in 2010, five years after we started. The discovery of maplike reference frames in the neocortex occurred six years later in 2016.

In 2019, we started to work on our second mission, applying brain principles to machine learning. That year was also when I started writing this book, to share what we have learned.

I find it amazing that the only thing in the universe that knows the universe exists is the three-pound mass of cells floating in our heads. It reminds me of the old puzzle: If a tree falls in the forest and no one is there to hear it, did it make a sound? Similarly, we can ask: If the universe came into and out of existence and there were no brains to know it, did the universe really exist? Who would know? A few billion cells suspended in your skull know not only that the universe exists but that it is vast and old. These cells have learned a model of the world, knowledge that, as far as we know, exists nowhere else. I have spent a lifetime striving to understand how the brain does this, and I am excited by what we have learned. I hope you are excited as well. Let’s get started.

CHAPTER 1

Old Brain—New Brain

To understand how the brain creates intelligence, there are a few basics you need to know first.

Shortly after Charles Darwin published his theory of evolution, biologists realized that the human brain itself had evolved over time, and that its evolutionary history is evident from just looking at it. Unlike species which often disappear as new ones appear, the brain evolved by adding new parts on top of the older parts. For example, some of the oldest and simplest nervous systems are sets of neurons that run down the back of tiny worms. These neurons allow the worm to make simple movements, and they are the predecessor of our spinal cord, which is similarly responsible for many of our basic movements. Next to appear was a lump of neurons at one end of the body that controlled functions such as digestion and breathing. This lump is the predecessor of our brain stem, which similarly controls our digestion and breathing. The brain stem extended what was already there, but it did not replace it. Over time, the brain grew capable of increasingly complex behaviors by evolving new parts on top of the older parts. This method of growth by addition applies to the brains of most complex animals. It is easy to see why the old brain parts are still there. No matter how smart and sophisticated we are, breathing, eating, sex, and reflex reactions are still critical to our survival.

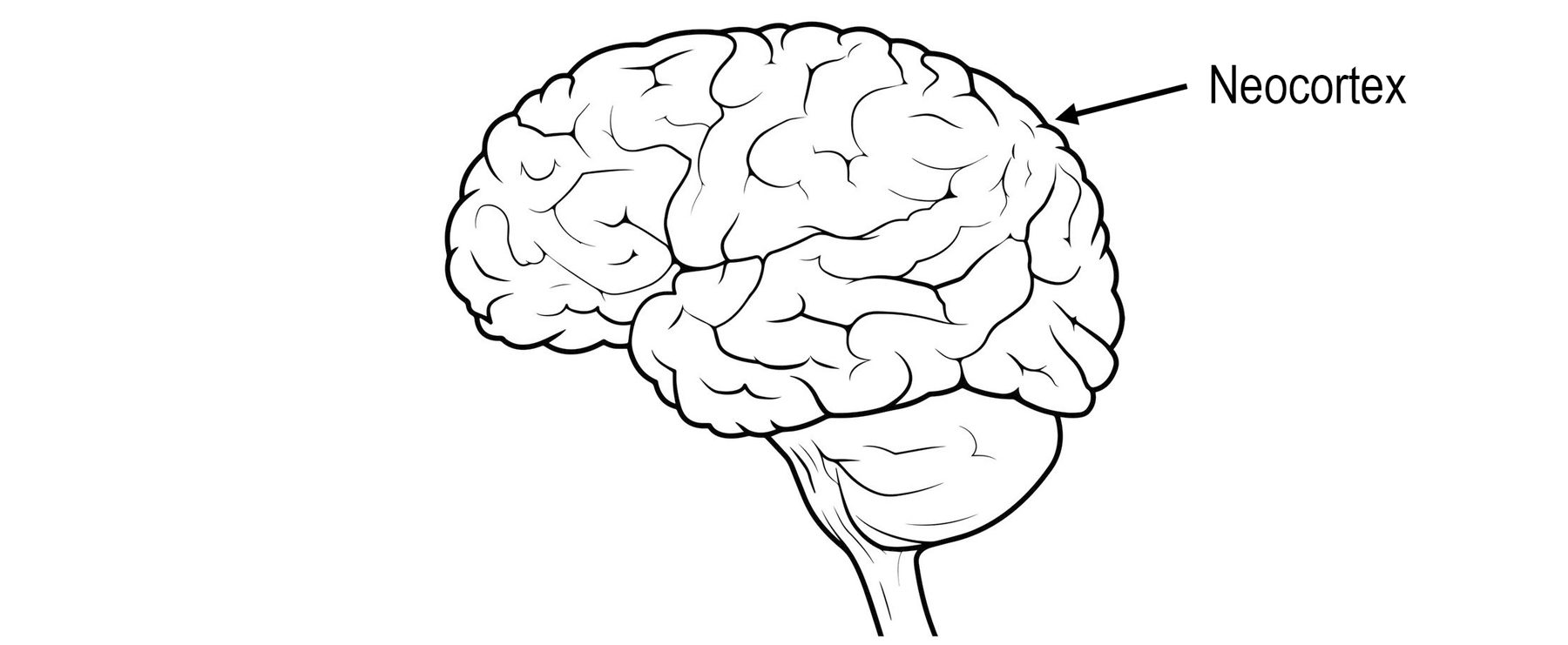

The newest part of our brain is the neocortex, which means “new outer layer.” All mammals, and only mammals, have a neocortex. The human neocortex is particularly large, occupying about 70 percent of the volume of our brain. If you could remove the neocortex from your head and iron it flat, it would be about the size of a large dinner napkin and twice as thick (about 2.5 millimeters). It wraps around the older parts of the brain such that when you look at a human brain, most of what you see is the neocortex (with its characteristic folds and creases), with bits of the old brain and the spinal cord sticking out the bottom.

A human brain

The neocortex is the organ of intelligence. Almost all the capabilities we think of as intelligence—such as vision, language, music, math, science, and engineering—are created by the neocortex. When we think about something, it is mostly the neocortex doing the thinking. Your neocortex is reading or listening to this book, and my neocortex is writing this book. If we want to understand intelligence, then we have to understand what the neocortex does and how it does it.

An animal doesn’t need a neocortex to live a complex life. A crocodile’s brain is roughly equivalent to our brain, but without a proper neocortex. A crocodile has sophisticated behaviors, cares for its young, and knows how to navigate its environment. Most people would say a crocodile has some level of intelligence, but nothing close to human intelligence.

The neocortex and the older parts of the brain are connected via nerve fibers; therefore, we cannot think of them as completely separate organs. They are more like roommates, with separate agendas and personalities, but who need to cooperate to get anything done. The neocortex is in a decidedly unfair position, as it doesn’t control behavior directly. Unlike other parts of the brain, none of the cells in the neocortex connect directly to muscles, so it can’t, on its own, make any muscles move. When the neocortex wants to do something, it sends a signal to the old brain, in a sense asking the old brain to do its bidding. For example, breathing is a function of the brain stem, requiring no thought or input from the neocortex. The neocortex can temporarily control breathing, as when you consciously decide to hold your breath. But if the brain stem detects that your body needs more oxygen, it will ignore the neocortex and take back control. Similarly, the neocortex might think, “Don’t eat this piece of cake. It isn’t healthy.” But if older and more primitive parts of the brain say, “Looks good, smells good, eat it,” the cake can be hard to resist. This battle between the old and new brain is an underlying theme of this book. It will play an important role when we discuss the existential risks facing humanity.

The old brain contains dozens of separate organs, each with a specific function. They are visually distinct, and their shapes, sizes, and connections reflect what they do. For example, there are several pea-size organs in the amygdala, an older part of the brain, that are responsible for different types of aggression, such as premeditated and impulsive aggression.

The neocortex is surprisingly different. Although it occupies almost three-quarters of the brain’s volume and is responsible for a myriad of cognitive functions, it has no visually obvious divisions. The folds and creases are needed to fit the neocortex into the skull, similar to what you would see if you forced a napkin into a large wine glass. If you ignore the folds and creases, then the neocortex looks like one large sheet of cells, with no obvious divisions.

Nonetheless, the neocortex is still divided into several dozen areas, or regions, that perform different functions. Some of the regions are responsible for vision, some for hearing, and some for touch. There are regions responsible for language and planning. When the neocortex is damaged, the deficits that arise depend on what part of the neocortex is affected. Damage to the back of the head results in blindness, and damage to the left side could lead to loss of language.

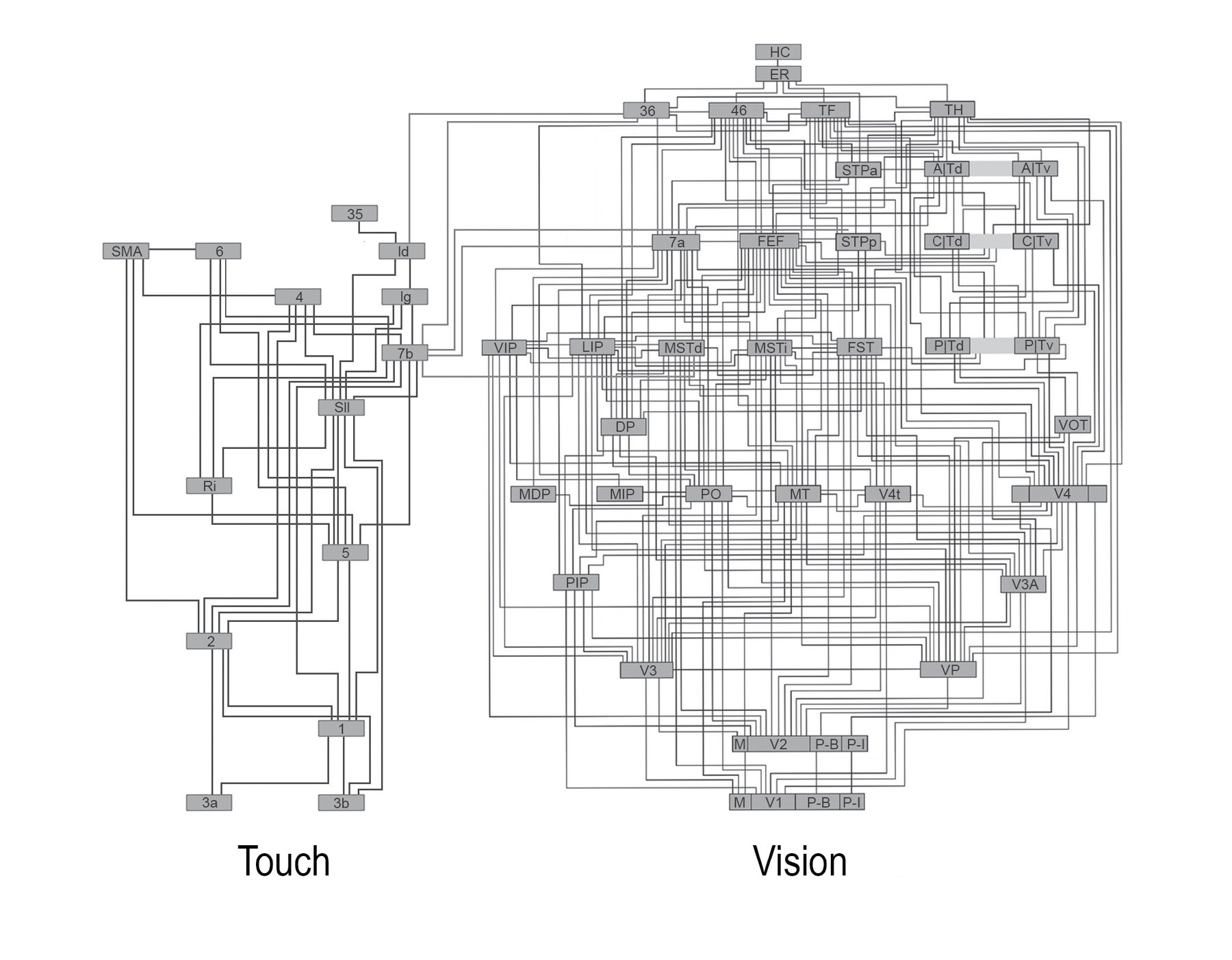

The regions of the neocortex connect to each other via bundles of nerve fibers that travel under the neocortex, the so-called white matter of the brain. By carefully following these nerve fibers, scientists can determine how many regions there are and how they are connected. It is difficult to study human brains, so the first complex mammal that was analyzed this way was the macaque monkey. In 1991, two scientists, Daniel Felleman and David Van Essen, combined data from dozens of separate studies to create a famous illustration of the macaque monkey’s neocortex. Here is one of the images that they created (a map of a human’s neocortex would be different in detail, but similar in overall structure).

Connections in the neocortex

The dozens of small rectangles in this picture represent the different regions of the neocortex, and the lines represent how information flows from one region to another via the white matter.

A common interpretation of this image is that the neocortex is hierarchical, like a flowchart. Input from the senses enters at the bottom (in this diagram, input from the skin is on the left and input from the eyes is on the right). The input is processed in a series of steps, each of which extracts more and more complex features from the input. For example, the first region that gets input from the eyes might detect simple patterns such as lines or edges. This information is sent to the next region, which might detect more complex features such as corners or shapes. This stepwise process continues until some regions detect complete objects.

There is a lot of evidence supporting the flowchart hierarchy interpretation. For example, when scientists look at cells in regions at the bottom of the hierarchy, they see that they respond best to simple features, while cells in the next region respond to more complex features. And sometimes they find cells in higher regions that respond to complete objects. However, there is also a lot of evidence that suggests the neocortex is not like a flowchart. As you can see in the diagram, the regions aren’t arranged one on top of another as they would be in a flowchart. There are multiple regions at each level, and most regions connect to multiple levels of the hierarchy. In fact, the majority of connections between regions do not fit into a hierarchical scheme at all. In addition, only some of the cells in each region act like feature detectors; scientists have not been able to determine what the majority of cells in each region are doing.

We are left with a puzzle. The organ of intelligence, the neocortex, is divided into dozens of regions that do different things, but on the surface, they all look the same. The regions connect in a complex mishmash that is somewhat like a flowchart but mostly not. It is not immediately clear why the organ of intelligence looks this way.

The next obvious thing to do is to look inside the neocortex, to see the detailed circuitry within its 2.5 mm thickness. You might imagine that, even if different areas of the neocortex look the same on the outside, the detailed neural circuits that create vision, touch, and language would look different on the inside. But this is not the case.

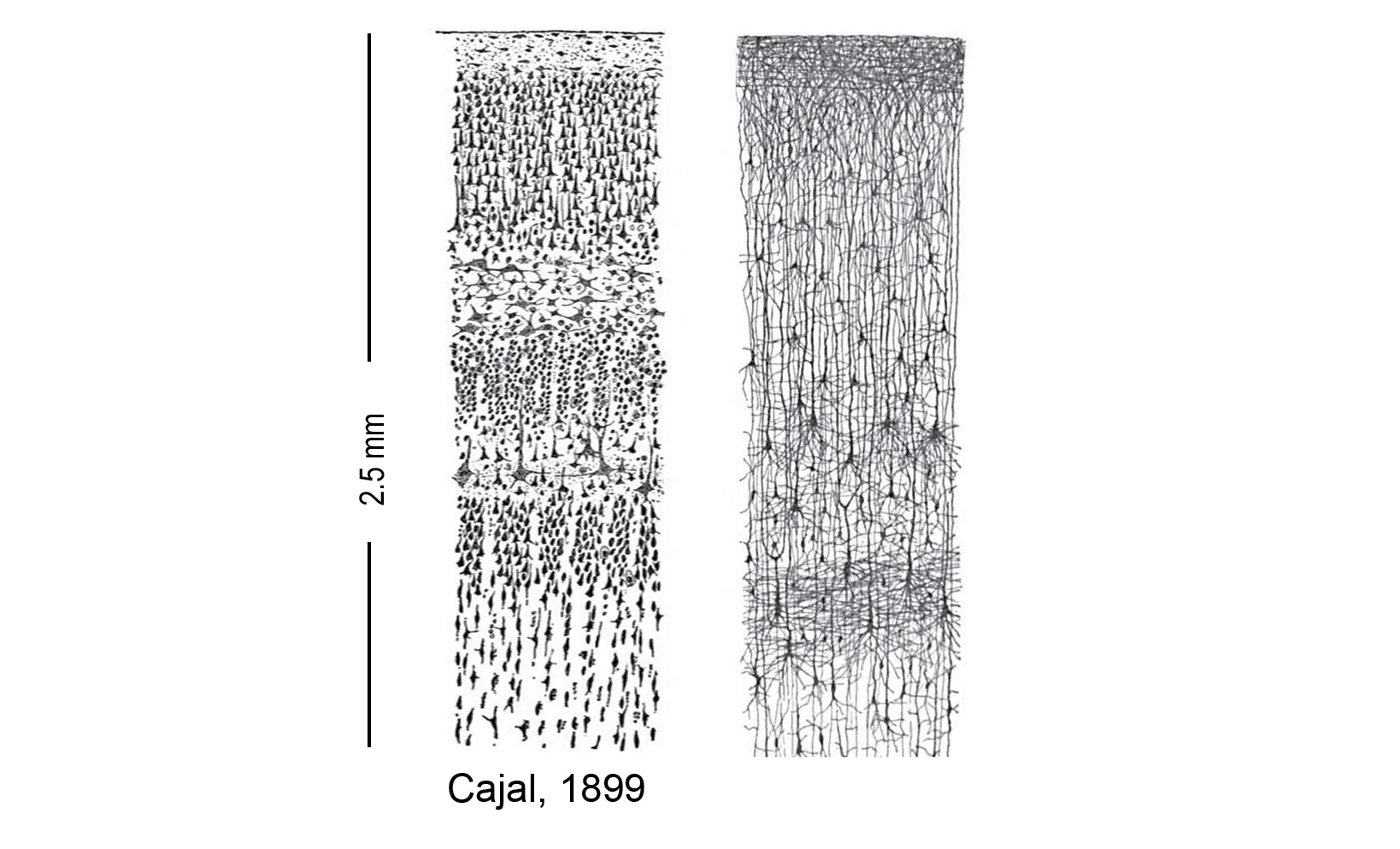

The first person to look at the detailed circuitry inside the neocortex was Santiago Ramón y Cajal. In the late 1800s, staining techniques were discovered that allowed individual neurons in the brain to be seen with a microscope. Cajal used these stains to make pictures of every part of the brain. He created thousands of images that, for the first time, showed what brains look like at the cellular level. All of Cajal’s beautiful and intricate images of the brain were drawn by hand. He ultimately received the Nobel Prize for his work. Here are two drawings that Cajal made of the neocortex. The one on the left shows only the cell bodies of neurons. The one on the right includes the connections between cells. These images show a slice across the 2.5 mm thickness of the neocortex.

Neurons in a slice of neocortex

The stains used to make these images only color a small percentage of the cells. This is fortunate, because if every cell were stained then all we would see is black. Keep in mind that the actual number of neurons is much larger than what you see here.

The first thing Cajal and others observed was that the neurons in the neocortex appear to be arranged in layers. The layers, which run parallel to the surface of the neocortex (horizontal in the picture), are caused by differences in the size of the neurons and how closely they are packed. Imagine you had a glass tube and poured in an inch of peas, an inch of lentils, and an inch of soybeans. Looking at the tube from the side you would see three layers. You can see layers in the pictures above. How many layers there are depends on who is doing the counting and the criteria they use for distinguishing the layers. Cajal saw six layers. A simple interpretation is that each layer of neurons is doing something different.

Today we know that there are dozens of different types of neurons in the neocortex, not six. Scientists still use the six-layer terminology. For example, one type of cell might be found in Layer 3 and another in Layer 5. Layer 1 is on the outermost surface of the neocortex closest to the skull, at the top of Cajal’s drawing. Layer 6 is closest to the center of the brain, farthest from the skull. It is important to keep in mind that the layers are only a rough guide to where a particular type of neuron might be found. It matters more what a neuron connects to and how it behaves. When you classify neurons by their connectivity, there are dozens of types.

The second observation from these images was that most of the connections between neurons run vertically, between layers. Neurons have treelike appendages called axons and dendrites that allow them to send information to each other. Cajal saw that most of the axons ran between layers, perpendicular to the surface of the neocortex (up and down in the images here ). Neurons in some layers make long-distance horizontal connections, but most of the connections are vertical. This means that information arriving in a region of the neocortex moves mostly up and down between the layers before being sent elsewhere.

In the 120 years since Cajal first imaged the brain, hundreds of scientists have studied the neocortex to discover as many details as possible about its neurons and circuits. There are thousands of scientific papers on this topic, far more than I can summarize. Instead, I want to highlight three general observations.

1. The Local Circuits in the Neocortex Are Complex

Under one square millimeter of neocortex (about 2.5 cubic millimeters), there are roughly one hundred thousand neurons, five hundred million connections between neurons (called synapses), and several kilometers of axons and dendrites. Imagine laying out several kilometers of wire along a road and then trying to squish it into two cubic millimeters, roughly the size of a grain of rice. There are dozens of different types of neurons under each square millimeter. Each type of neuron makes prototypical connections to other types of neurons. Scientists often describe regions of the neocortex as performing a simple function, such as detecting features. However, it only takes a handful of neurons to detect features. The precise and extremely complex neural circuits seen everywhere in the neocortex tell us that every region is doing something far more complex than feature detection.

2. The Neocortex Looks Similar Everywhere

The complex circuitry of the neocortex looks remarkably alike in visual regions, language regions, and touch regions. It even looks similar across species such as rats, cats, and humans. There are differences. For example, some regions of the neocortex have more of certain cells and less of others, and there are some regions that have an extra cell type not found elsewhere. Presumably, whatever these regions of the neocortex are doing benefits from these differences. But overall, the variations between regions are relatively small compared to the similarities.

3. Every Part of the Neocortex Generates Movement

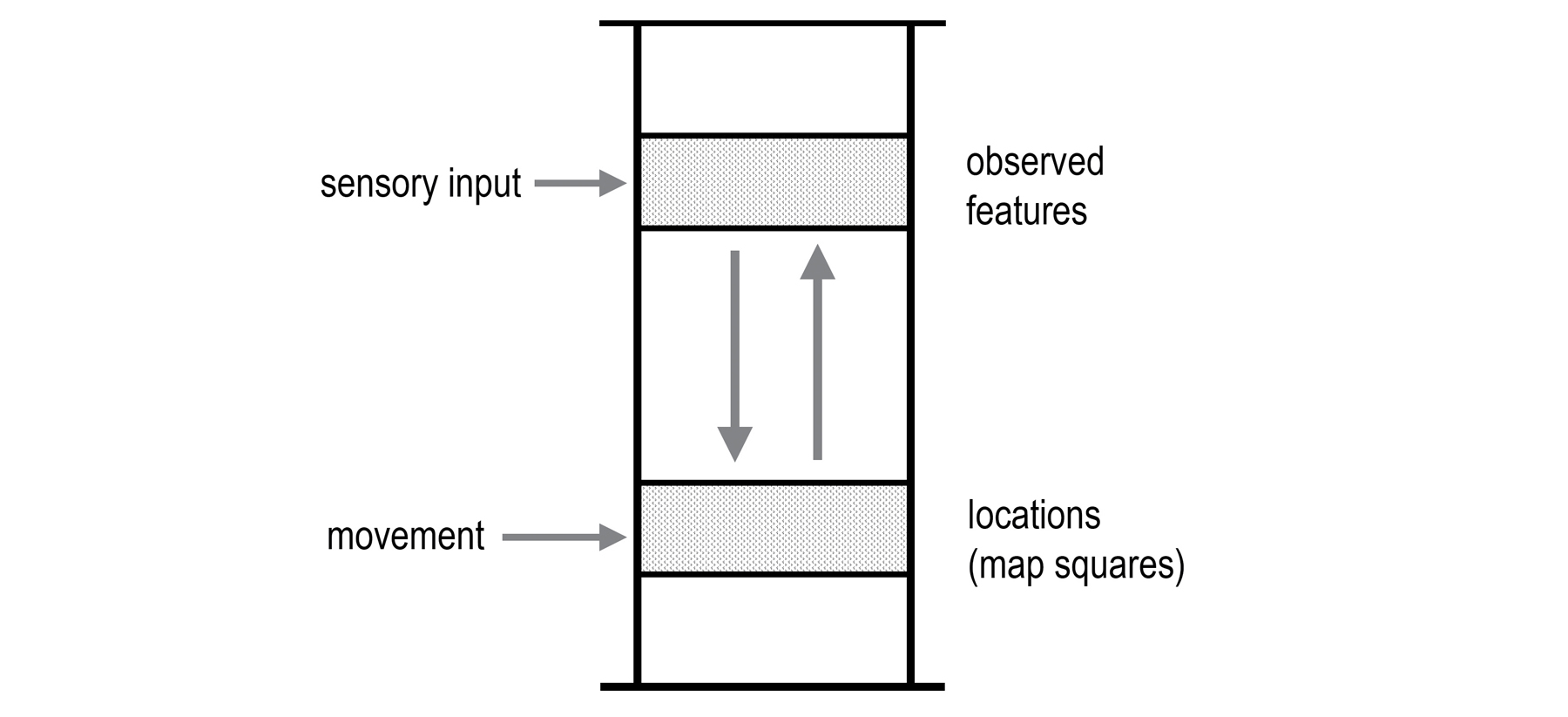

For a long time, it was believed that information entered the neocortex via the “sensory regions,” went up and down the hierarchy of regions, and finally went down to the “motor region.” Cells in the motor region project to neurons in the spinal cord that move the muscles and limbs. We now know this description is misleading. In every region they have examined, scientists have found cells that project to some part of the old brain related to movement. For example, the visual regions that get input from the eyes send a signal down to the part of the old brain responsible for moving the eyes. Similarly, the auditory regions that get input from the ears project to the part of the old brain that moves the head. Moving your head changes what you hear, similar to how moving your eyes changes what you see. The evidence we have indicates that the complex circuitry seen everywhere in the neocortex performs a sensory-motor task. There are no pure motor regions and no pure sensory regions.

In summary, the neocortex is the organ of intelligence. It is a napkin-size sheet of neural tissue divided into dozens of regions. There are regions responsible for vision, hearing, touch, and language. There are regions that are not as easily labeled that are responsible for high-level thought and planning. The regions are connected to each other with bundles of nerve fibers. Some of the connections between the regions are hierarchical, suggesting that information flows from region to region in an orderly fashion like a flowchart. But there are other connections between the regions that seem to have little order, suggesting that information goes all over at once. All regions, no matter what function they perform, look similar in detail to all other regions.

We will meet the first person who made sense of these observations in the next chapter.

This is a good point to say a few words about the style of writing in this book. I am writing for an intellectually curious lay reader. My goal is to convey everything you need to know to understand the new theory, but not a lot more. I assume most readers will have limited prior knowledge of neuroscience. However, if you have a background in neuroscience, you will know where I am omitting details and simplifying complex topics. If that applies to you, I ask for your understanding. There is an annotated reading list at the back of the book where I discuss where to find more details for those who are interested.

CHAPTER 2

Vernon Mountcastle’s Big Idea

The Mindful Brain is a small book, only one hundred pages long. Published in 1978, it contains two essays about the brain from two prominent scientists. One of the essays, by Vernon Mountcastle, a neuroscientist at Johns Hopkins University, remains one of the most iconic and important monographs ever written about the brain. Mountcastle proposed a way of thinking about the brain that is elegant—a hallmark of great theories—but also so surprising that it continues to polarize the neuroscience community.

I first read The Mindful Brain in 1982. Mountcastle’s essay had an immediate and profound effect on me, and, as you will see, his proposal heavily influenced the theory I present in this book.

Mountcastle’s writing is precise and erudite but also challenging to read. The title of his essay is the not-too-catchy “An Organizing Principle for Cerebral Function: The Unit Module and the Distributed System.” The opening lines are difficult to understand; I include them here so you can get a sense of what his essay feels like.

There can be little doubt of the dominating influence of the Darwinian revolution of the mid-nineteenth century upon concepts of the structure and function of the nervous system. The ideas of Spencer and Jackson and Sherrington and the many who followed them were rooted in the evolutionary theory that the brain develops in phylogeny by the successive addition of more cephalad parts. On this theory each new addition or enlargement was accompanied by the elaboration of more complex behavior, and at the same time, imposed a regulation upon more caudal and more primitive parts and the presumably more primitive behavior they control.

What Mountcastle says in these first three sentences is that the brain grew large over evolutionary time by adding new brain parts on top of old brain parts. The older parts control more primitive behaviors while the newer parts create more sophisticated ones. Hopefully this sounds familiar, as I discussed this idea in the previous chapter.

However, Mountcastle goes on to say that while much of the brain got bigger by adding new parts on top of old parts, that is not how the neocortex grew to occupy 70 percent of our brain. The neocortex got big by making many copies of the same thing: a basic circuit. Imagine watching a video of our brain evolving. The brain starts small. A new piece appears at one end, then another piece appears on top of that, and then another piece is appended on top of the previous pieces. At some point, millions of years ago, a new piece appears that we now call the neocortex. The neocortex starts small, but then grows larger, not by creating anything new, but by copying a basic circuit over and over. As the neocortex grows, it gets larger in area but not in thickness. Mountcastle argued that, although a human neocortex is much larger than a rat or dog neocortex, they are all made of the same element—we just have more copies of that element.

Mountcastle’s essay reminds me of Charles Darwin’s book On the Origin of Species. Darwin was nervous that his theory of evolution would cause an uproar. So, in the book, he covers a lot of dense and relatively uninteresting material about variation in the animal kingdom before finally describing his theory toward the end. Even then, he never explicitly says that evolution applies to humans. When I read Mountcastle’s essay, I get a similar impression. It feels as if Mountcastle knows that his proposal will generate pushback, so he is careful and deliberate in his writing. Here is a second quote from later in Mountcastle’s essay:

Put shortly, there is nothing intrinsically motor about the motor cortex, nor sensory about the sensory cortex. Thus the elucidation of the mode of operation of the local modular circuit anywhere in the neocortex will be of great generalizing significance.

In these two sentences, Mountcastle summarizes the major idea put forth in his essay. He says that every part of the neocortex works on the same principle. All the things we think of as intelligence—from seeing, to touching, to language, to high-level thought—are fundamentally the same.

Recall that the neocortex is divided into dozens of regions, each of which performs a different function. If you look at the neocortex from the outside, you can’t see the regions; there are no demarcations, just like a satellite image doesn’t reveal political borders between countries. If you cut through the neocortex, you see a complex and detailed architecture. However, the details look similar no matter what region of the cortex you cut into. A slice of cortex responsible for vision looks like a slice of cortex responsible for touch, which looks like a slice of cortex responsible for language.

Mountcastle proposed that the reason the regions look similar is that they are all doing the same thing. What makes them different is not their intrinsic function but what they are connected to. If you connect a cortical region to eyes, you get vision; if you connect the same cortical region to ears, you get hearing; and if you connect regions to other regions, you get higher thought, such as language. Mountcastle then points out that if we can discover the basic function of any part of the neocortex, we will understand how the entire thing works.

Mountcastle’s idea is as surprising and profound as Darwin’s discovery of evolution. Darwin proposed a mechanism—an algorithm, if you will—that explains the incredible diversity of life. What on the surface appears to be many different animals and plants, many types of living things, are in reality manifestations of the same underlying evolutionary algorithm. In turn, Mountcastle is proposing that all the things we associate with intelligence, which on the surface appear to be different, are, in reality, manifestations of the same underlying cortical algorithm. I hope you can appreciate how unexpected and revolutionary Mountcastle’s proposal is. Darwin proposed that the diversity of life is due to one basic algorithm. Mountcastle proposed that the diversity of intelligence is also due to one basic algorithm.

Like many things of historical significance, there is some debate as to whether Mountcastle was the first person to propose this idea. It has been my experience that every idea has at least some precedent. But, as far as I know, Mountcastle was the first person to clearly and carefully lay out the argument for a common cortical algorithm.

Mountcastle’s and Darwin’s proposals differ in one interesting way. Darwin knew what the algorithm was: evolution is based on random variation and natural selection. However, Darwin didn’t know where the algorithm was in the body. This was not known until the discovery of DNA many years later. Mountcastle, by contrast, didn’t know what the cortical algorithm was; he didn’t know what the principles of intelligence were. But he did know where this algorithm resided in the brain.

So, what was Mountcastle’s proposal for the location of the cortical algorithm? He said that the fundamental unit of the neocortex, the unit of intelligence, was a “cortical column.” Looking at the surface of the neocortex, a cortical column occupies about one square millimeter. It extends through the entire 2.5 mm thickness, giving it a volume of 2.5 cubic millimeters. By this definition, there are roughly 150,000 cortical columns stacked side by side in a human neocortex. You can imagine a cortical column is like a little piece of thin spaghetti. A human neocortex is like 150,000 short pieces of spaghetti stacked vertically next to each other.

The width of cortical columns varies from species to species and region to region. For example, in mice and rats, there is one cortical column for each whisker; these columns are about half a millimeter in diameter. In cats, vision columns appear to be about one millimeter in diameter. We don’t have a lot of data about the size of columns in a human brain. For simplicity, I will continue to refer to columns as being one square millimeter, endowing each of us with about 150,000 cortical columns. Even though the actual number will likely vary from this, it won’t make a difference for our purposes.

Cortical columns are not visible under a microscope. With a few exceptions, there are no visible boundaries between them. Scientists know they exist because all the cells in one column will respond to the same part of the retina, or the same patch of skin, but then cells in the next column will all respond to a different part of the retina or a different patch of skin. This grouping of responses is what defines a column. It is seen everywhere in the neocortex. Mountcastle pointed out that each column is further divided into several hundred “minicolumns.” If a cortical column is like a skinny strand of spaghetti, you can visualize minicolumns as even skinnier strands, like individual pieces of hair, stacked side by side inside the spaghetti strand. Each minicolumn contains a little over one hundred neurons spanning all layers. Unlike the larger cortical column, minicolumns are physically distinct and can often be seen with a microscope.

Mountcastle didn’t know and he didn’t suggest what columns or minicolumns do. He only proposed that each column is doing the same thing and that minicolumns are an important subcomponent.

Let’s review. The neocortex is a sheet of tissue about the size of a large napkin. It is divided into dozens of regions that do different things. Each region is divided into thousands of columns. Each column is composed of several hundred hairlike minicolumns, which consist of a little over one hundred cells each. Mountcastle proposed that throughout the neocortex columns and minicolumns performed the same function: implementing a fundamental algorithm that is responsible for every aspect of perception and intelligence.

Mountcastle based his proposal for a universal algorithm on several lines of evidence. First, as I have already mentioned, is that the detailed circuits seen everywhere in the neocortex are remarkably similar. If I showed you two silicon chips with nearly identical circuit designs, it would be safe to assume that they performed nearly identical functions. The same argument applies to the detailed circuits of the neocortex. Second is that the major expansion of the modern human neocortex relative to our hominid ancestors occurred rapidly in evolutionary time, just a few million years. This is probably not enough time for multiple new complex capabilities to be discovered by evolution, but it is plenty of time for evolution to make more copies of the same thing. Third is that the function of neocortical regions is not set in stone. For example, in people with congenital blindness, the visual areas of the neocortex do not get useful information from the eyes. These areas may then assume new roles related to hearing or touch. Finally, there is the argument of extreme flexibility. Humans can do many things for which there was no evolutionary pressure. For example, our brains did not evolve to program computers or make ice cream—both are recent inventions. The fact that we can do these things tells us that the brain relies on a general-purpose method of learning. To me, this last argument is the most compelling. Being able to learn practically anything requires the brain to work on a universal principle.

There are more pieces of evidence that support Mountcastle’s proposal. But despite this, his idea was controversial when he introduced it, and it remains somewhat controversial today. I believe there are two related reasons. One is that Mountcastle didn’t know what a cortical column does. He made a surprising claim built on a lot of circumstantial evidence, but he didn’t propose how a cortical column could actually do all the things we associate with intelligence. The other reason is that the implications of his proposal are hard for some people to believe. For example, you may have trouble accepting that vision and language are fundamentally the same. They don’t feel the same. Given these uncertainties, some scientists reject Mountcastle’s proposal by pointing out that there are differences between neocortical regions. The differences are relatively small compared to the similarities, but if you focus on them you can argue that different regions of the neocortex are not the same.

Mountcastle’s proposal looms in neuroscience like a holy grail. No matter what animal or what part of the brain a neuroscientist studies, somewhere, overtly or covertly, almost all neuroscientists want to understand how the human brain works. And that means understanding how the neocortex works. And that requires understanding what a cortical column does. In the end, our quest to understand the brain, our quest to understand intelligence, boils down to figuring out what a cortical column does and how it does it. Cortical columns are not the only mystery of the brain or the only mystery related to the neocortex. But understanding the cortical column is by far the largest and most important piece of the puzzle.

In 2005, I was invited to give a talk about our research at Johns Hopkins University. I talked about our quest to understand the neocortex, how we were approaching the problem, and the progress we had made. After giving a talk like this, the speaker often meets with individual faculty. On this trip, my final visit was with Vernon Mountcastle and the dean of his department. I felt honored to meet the man who had provided so much insight and inspiration during my life. At one point during our conversation, Mountcastle, who had attended my lecture, said that I should come to work at Johns Hopkins and that he would arrange a position for me. His offer was unexpected and unusual. I could not seriously consider it due to my family and business commitments back in California, but I reflected back to 1986, when my proposal to study the neocortex was rejected by UC Berkeley. How I would have leaped to accept his offer back then.

Before leaving, I asked Mountcastle to sign my well-read copy of The Mindful Brain. As I walked away, I was both happy and sad. I was happy to have met him and relieved that he thought highly of me. I felt sad knowing it was possible that I might never see him again. Even if I succeeded in my quest, I might not be able to share with him what I had learned and get his help and feedback. As I walked to my cab, I felt determined to complete his mission.

CHAPTER 3

A Model of the World in Your Head

What the brain does may seem obvious to you. The brain gets inputs from its sensors, it processes those inputs, and then it acts. In the end, how an animal reacts to what it senses determines its success or failure. A direct mapping from sensory input to action certainly applies to some parts of the brain. For example, accidentally touching a hot surface will cause a reflex retraction of the arm. The input-output circuit responsible is located in the spinal cord. But what about the neocortex? Can we say that the task of the neocortex is to take inputs from sensors and then immediately act? In short, no.

You are reading or listening to this book and it isn’t causing any immediate actions other than perhaps turning pages or touching a screen. Thousands of words are streaming into your neocortex and, for the most part, you are not acting on them. Maybe later you will act differently for having read this book. Perhaps you will have future conversations about brain theory and the future of humanity that you would not have had if you didn’t read this book. Perhaps your future thoughts and word choices will be subtly influenced by my words. Perhaps you will work on creating intelligent machines based on brain principles, and my words will inspire you in this direction. But right now, you are just reading. If we insist on describing the neocortex as an input-output system, then the best we could say it is that the neocortex gets lots of inputs, it learns from these inputs, and then, later—maybe hours, maybe years—it acts differently based on these prior inputs.

From the moment I became interested in how the brain worked, I realized that thinking of the neocortex as an input-leads-to-output system would not be fruitful. Fortunately, when I was a graduate student at Berkeley I had an insight that led me down a different and more successful path. I was at home, working at my desk. There were dozens of objects on the desk and in the room. I realized that if any one of these objects changed, in even the slightest way, I would notice it. My pencil cup was always on the right side of the table; if one day I found it on the left, I would notice the change and wonder how it got moved. If the stapler changed in length, I would notice. I would notice the change if I touched the stapler or if I looked at it. I would even notice if the stapler made a different sound when being used. If the clock on the wall changed its location or style, I would notice. If the cursor on my computer screen moved left when I moved the mouse to the right, I would immediately realize something was wrong. What struck me was that I would notice these changes even if I wasn’t attending to these objects. As I looked around the room, I didn’t ask, “Is my stapler the correct length?” I didn’t think, “Check to make sure the clock’s hour hand is still shorter than the minute hand.” Changes to the normal would just pop into my head, and my attention would then be drawn to them. There were literally thousands of possible changes in my environment that my brain would notice almost instantly.

There was only one explanation I could think of. My brain, specifically my neocortex, was making multiple simultaneous predictions of what it was about to see, hear, and feel. Every time I moved my eyes, my neocortex made predictions of what it was about to see. Every time I picked something up, my neocortex made predictions of what each finger should feel. And every action I took led to predictions of what I should hear. My brain predicted the smallest stimuli, such as the texture of the handle on my coffee cup, and large conceptual ideas, such as the correct month that should be displayed on a calendar. These predictions occurred in every sensory modality, for low-level sensory features and high-level concepts, which told me that every part of the neocortex, and therefore every cortical column, was making predictions. Prediction was a ubiquitous function of the neocortex.

At that time, few neuroscientists described the brain as a prediction machine. Focusing on how the neocortex made many parallel predictions would be a novel way to study how it worked. I knew that prediction wasn’t the only thing the neocortex did, but prediction represented a systemic way of attacking the cortical column’s mysteries. I could ask specific questions about how neurons make predictions under different conditions. The answers to these questions might reveal what cortical columns do, and how they do it.

To make predictions, the brain has to learn what is normal—that is, what should be expected based on past experience. My previous book, On Intelligence, explored this idea of learning and prediction. In the book, I used the phrase “the memory prediction framework” to describe the overall idea, and I wrote about the implications of thinking about the brain this way. I argued that by studying how the neocortex makes predictions, we would be able to unravel how the neocortex works.

Today I no longer use the phrase “the memory prediction framework.” Instead, I describe the same idea by saying that the neocortex learns a model of the world, and it makes predictions based on its model. I prefer the word “model” because it more precisely describes the kind of information that the neocortex learns. For example, my brain has a model of my stapler. The model of the stapler includes what the stapler looks like, what it feels like, and the sounds it makes when being used. The brain’s model of the world includes where objects are and how they change when we interact with them. For example, my model of the stapler includes how the top of the stapler moves relative to the bottom and how a staple comes out when the top is pressed down. These actions may seem simple, but you were not born with this knowledge. You learned it at some point in your life and now it is stored in your neocortex.

The brain creates a predictive model. This just means that the brain continuously predicts what its inputs will be. Prediction isn’t something that the brain does every now and then; it is an intrinsic property that never stops, and it serves an essential role in learning. When the brain’s predictions are verified, that means the brain’s model of the world is accurate. A mis-prediction causes you to attend to the error and update the model.

We are not aware of the vast majority of these predictions unless the input to the brain does not match. As I casually reach out to grab my coffee cup, I am not aware that my brain is predicting what each finger will feel, how heavy the cup should be, the temperature of the cup, and the sound the cup will make when I place it back on my desk. But if the cup was suddenly heavier, or cold, or squeaked, I would notice the change. We can be certain that these predictions are occurring because even a small change in any of these inputs will be noticed. But when a prediction is correct, as most will be, we won’t be aware that it ever occurred.

When you are born, your neocortex knows almost nothing. It doesn’t know any words, what buildings are like, how to use a computer, or what a door is and how it moves on hinges. It has to learn countless things. The overall structure of the neocortex is not random. Its size, the number of regions it has, and how they are connected together is largely determined by our genes. For example, genes determine what parts of the neocortex are connected to the eyes, what other parts are connected to the ears, and how those parts connect to each other. Therefore, we can say that the neocortex is structured at birth to see, hear, and even learn language. But it is also true that the neocortex doesn’t know what it will see, what it will hear, and what specific languages it might learn. We can think of the neocortex as starting life having some built-in assumptions about the world but knowing nothing in particular. Through experience, it learns a rich and complicated model of the world.

The number of things the neocortex learns is huge. I am sitting in a room with hundreds of objects. I will randomly pick one: a printer. I have learned a model of the printer that includes it having a paper tray, and how the tray moves in and out of the printer. I know how to change the size of the paper and how to unwrap a new ream and place it in the tray. I know the steps I need to take to clear a paper jam. I know that the power cord has a D-shaped plug at one end and that it can only be inserted in one orientation. I know the sound of the printer and how that sound is different when it is printing on two sides of a sheet of paper rather than on one. Another object in my room is a small, two-drawer file cabinet. I can recall dozens of things I know about the cabinet, including what is in each drawer and how the objects in the drawer are arranged. I know there is a lock, where the key is, and how to insert and turn the key to lock the cabinet. I know how the key and lock feel and the sounds they make as I use them. The key has a small ring attached to it and I know how to use my fingernail to pry open the ring to add or remove keys.

Imagine going room to room in your home. In each room you can think of hundreds of things, and for each item you can follow a cascade of learned knowledge. You can also do the same exercise for the town you live in, recalling what buildings, parks, bike racks, and individual trees exist at different locations. For each item, you can recall experiences associated with it and how you interact with it. The number of things you know is enormous, and the associated links of knowledge seem never-ending.

We learn many high-level concepts too. It is estimated that each of us knows about forty thousand words. We have the ability to learn spoken language, written language, sign language, the language of mathematics, and the language of music. We learn how electronic forms work, what thermostats do, and even what empathy or democracy mean, although our understanding of these may differ. Independent of what other things the neocortex might do, we can say for certain that it learns an incredibly complex model of the world. This model is the basis of our predictions, perceptions, and actions.

Learning Through Movement

The inputs to the brain are constantly changing. There are two reasons why. First, the world can change. For example, when listening to music, the inputs from the ears change rapidly, reflecting the movement of the music. Similarly, a tree swaying in the breeze will lead to visual and perhaps auditory changes. In these two examples, the inputs to the brain are changing from moment to moment, not because you are moving but because things in the world are moving and changing on their own.

The second reason is because we move. Every time we take a step, move a limb, move our eyes, tilt our head, or utter a sound, the input from our sensors change. For example, our eyes make rapid movements, called saccades, about three times a second. With each saccade, our eyes fixate on a new point in the world and the information from the eyes to the brain changes completely. This change would not occur if we hadn’t moved our eyes.

The brain learns its model of the world by observing how its inputs change over time. There isn’t another way to learn. Unlike with a computer, we cannot upload a file into our brain. The only way for a brain to learn anything is via changes in its inputs. If the inputs to the brain were static, nothing could be learned.

Some things, like a melody, can be learned without moving the body. We can sit perfectly still, with eyes closed, and learn a new melody by just listening to how the sounds change over time. But most learning requires that we actively move and explore. Imagine you enter a new house, one you have not been in before. If you don’t move, there will be no changes in your sensory input, and you can’t possibly learn anything about the house. To learn a model of the house, you have to look in different directions and walk from room to room. You need to open doors, peek in drawers, and pick up objects. The house and its contents are mostly static; they don’t move on their own. To learn a model of a house, you have to move.

Take a simple object such as a computer mouse. To learn what a mouse feels like, you have to run your fingers over it. To learn what a mouse looks like, you have to look at it from different angles and fixate your eyes on different locations. To learn what a mouse does, you have to press down on its buttons, slide off the battery cover, or move it across a mouse pad to see, feel, and hear what happens.

The term for this is sensory-motor learning. In other words, the brain learns a model of the world by observing how our sensory inputs change as we move. We can learn a song without moving because, unlike the order in which we can move from room to room in a house, the order of notes in a song is fixed. But most of the world isn’t like that; most of the time we have to move to discover the structure of objects, places, and actions. With sensory-motor learning, unlike a melody, the order of sensations is not fixed. What I see when I enter a room depends on which direction I turn my head. What my finger feels when holding a coffee cup depends on whether I move my finger up or down or sideways.

With each movement, the neocortex predicts what the next sensation will be. Move my finger up on the coffee cup and I expect to feel the lip, move my finger sideways and I expect to feel the handle. If I turn my head left when entering my kitchen, I expect to see my refrigerator, and if I turn my head right, I expect to see the range. If I move my eyes to the left front burner, I expect to see the broken igniter that I need to fix. If any input doesn’t match the brain’s prediction—perhaps my spouse fixed the igniter—then my attention is drawn to the area of mis-prediction. This alerts the neocortex that its model of that part of the world needs to be updated.

The question of how the neocortex works can now be phrased more precisely: How does the neocortex, which is composed of thousands of nearly identical cortical columns, learn a predictive model of the world through movement?

This is the question my team and I set out to answer. Our belief was that if we could answer it, we could reverse engineer the neocortex. We would understand both what the neocortex did and how it did it. And ultimately, we would be able to build machines that worked the same way.

Two Tenets of Neuroscience

Before we can start answering the question above, there are a few more basic ideas you need to know. First, like every other part of the body, the brain is composed of cells. The brain’s cells, called neurons, are in many ways similar to all our other cells. For example, a neuron has a cell membrane that defines its boundary and a nucleus that contains DNA. However, neurons have several unique properties that don’t exist in other cells in your body.

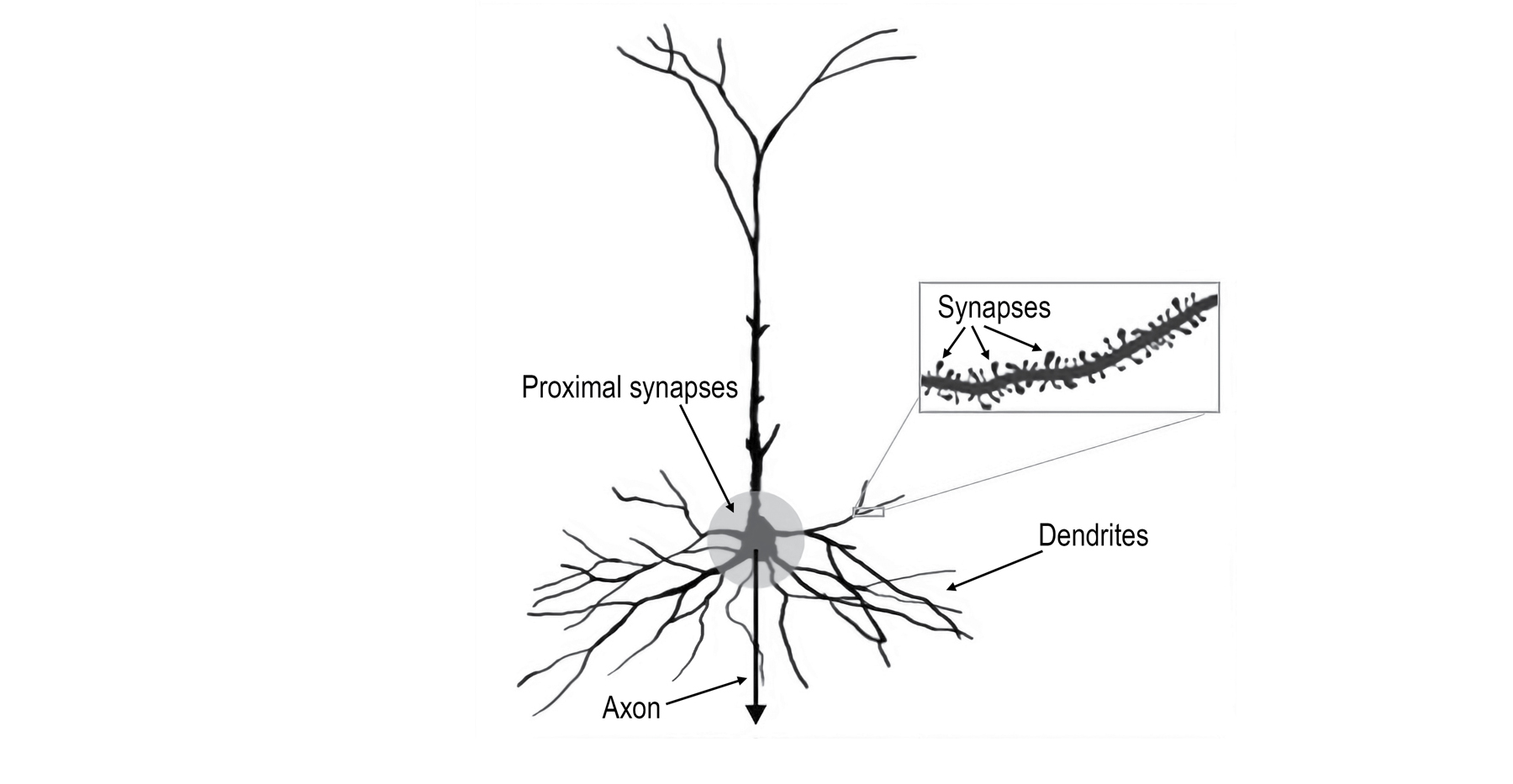

The first is that neurons look like trees. They have branch-like extensions of the cell membrane, called axons and dendrites. The dendrite branches are clustered near the cell and collect the inputs. The axon is the output. It makes many connections to nearby neurons but often travels long distances, such as from one side of the brain to the other or from the neocortex all the way down to the spinal cord.

The second difference is that neurons create spikes, also called action potentials. An action potential is an electrical signal that starts near the cell body and travels along the axon until it reaches the end of every branch.

The third unique property is that the axon of one neuron makes connections to the dendrites of other neurons. The connection points are called synapses. When a spike traveling along an axon reaches a synapse, it releases a chemical that enters the dendrite of the receiving neuron. Depending on which chemical is released, it makes the receiving neuron more or less likely to generate its own spike.